[Day 129] AI with a Scottish accent? + MLE lecture by Chris Piech (Stanford CS109)

Hello :)

Today is Day 129!

A quick summary of today:- I thought to myself: is there an AI that can speak with Scottish accent and does not sound robotic

- covered Lecture 21 from Stanford's CS109 on Maximum Likelihood Estimation

Firstly, about the Scottish AI

I was looking for videos to practice my Scottish accent (which is awful). After a few, I thought whether there has been an AI model that given text, can generate that text being said in Scottish accent. Started looking, and the best I could find was this, which tries to do it but it maybe sounds 5% (general) Scottish.

Then, I started looking on huggingface and kaggle, for models developed by solo devs - nothing. I searched TTS models, most are trained on general south UK English, American, Indian, Australian. But none can speak in Scottish.

Then, I started looking for a dataset. I could not find any either. That is when I tried something.

I found one video on youtube where there is only Scottish accent used - The Glasgow Uni accent. I downloaded the audio file of it, and transcribed it myself.

The short version is: 'Hello? Hi Shanese. How are you? Who is this? It\'s me Kath from school, silly. Why you talking like that? Like what? Like you\'re changing. Don\'t be daft. Anyway, that\'s me done with uni...' (I did the full video but this is just the start).

However, while transcribing, though able to understand, there were a few words that for the life of me I could not understand, and youtube CC could not help either.

I got this for 1. But what if I could do it for many, and actually transcribe it properly. At the time, I was thinking that this requires manual work, because we need to get the Scottish audio transcribed correctly. A general AI model that was not trained on Scottish accent might not be able to transcribe perfectly the Scottish accent (maybe this is something to test).I need a Scot! - is what my first thought was. So I made a few posts on r/learnmachinelearning and r/scotland proposing my idea to create a dataset of transcribed audio files so that future generations of AI can also speak in Scottish accent.

Comments came in and there is a lot of good info about organisations and institutions that aim to preserve Gaelic and promote Scottish, info about potential websites I can use for data. And after a few hours, someone interested replied! I will call them 'M'. M shared with me a youtube video where they do short phrases in a general Scottish accent, as an example of what we can use for our dataset. We connected on LinkedIn, and we will have a chat about it this Saturday. Exciting!

Before then, I will look at some research papers about audio file length when training TTS models. My initial thoughts are to get this dataset going. Audio files + transcription, but we will discuss it with M.

Secondly, about MLE by Professor Chris Piech

My thoughts while watching: it's kind of scary how great these explanations are

Jokes aside, wow! So far the best MLE explanation I believe was from the book Intro to Statistical Learning, but now I definitely think this lecture beats it. And it's not like if someone new to AI watches that one lecture they will know MLE, watching the previous lectures - it's like building up to MLE, but without knowing it. And *all* the explanations along the way are so clear, and now with MLE too. Anyway ~ below are some screenshots I took.

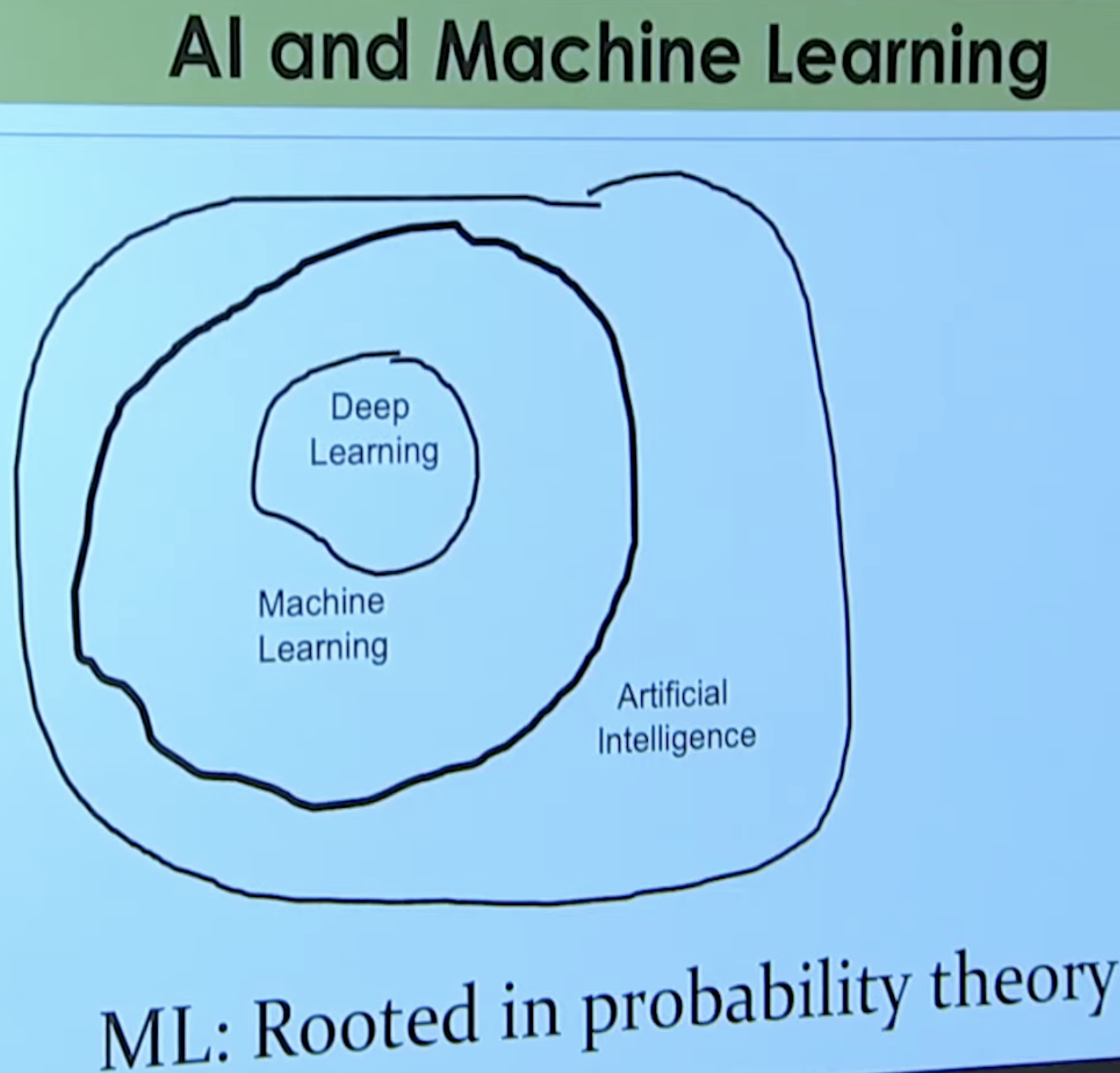

We begin with an image of how AI, ML and DL fit in together

Then, is our path to how to get to DLIn that big box there are 3 mini boxes

The 1st one, from the lectures so far, we learned how to do

We already know how to get unbiased estimateros (the 1st part in the Path image)

Now, for the 2nd cube - MLE: the theory behind a general method for choosing numbers in a model

We begin with trying to guess the mu and sigma of some random data points, and try to find the best parameters that result in the data

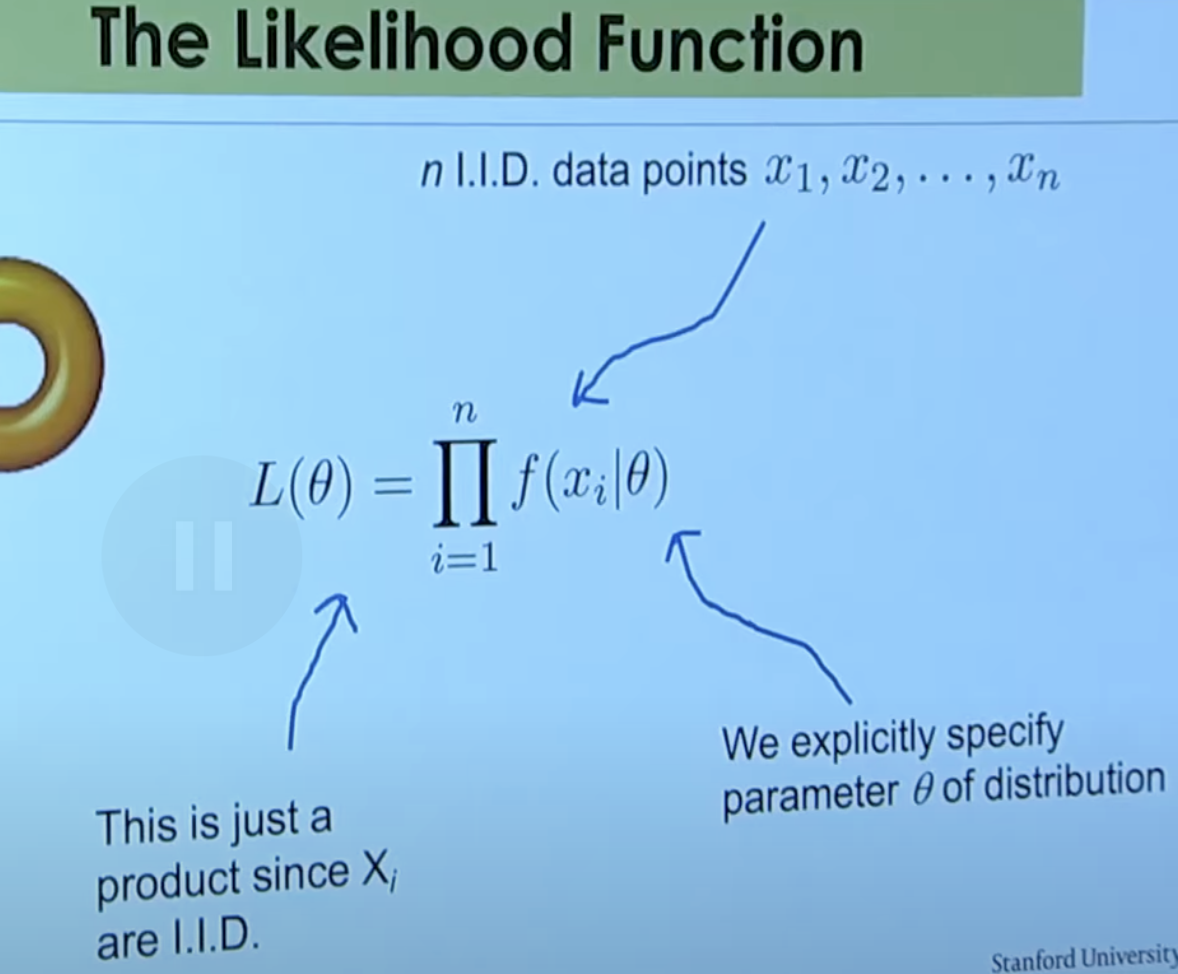

We can find those params by using argmax (find the arguments of a function that maximise the likelihood)

While max looks for the max value, argmax is looking for the inpuWe are not really interested in how likely is our data, what we’re really interested in is which input of mean and std make the data look most likely.t that results in the max value.

It turns out that the argmax of a function is the same as the argmax of the log(function). And the log helps us with dealing with very very very small numbers and also cleans up the math (as seen later)

The Holy Grail for MLE

(we always assume iid, otherwise nothing works)Then we derived the MLE with Poisson, which is just average the data points.

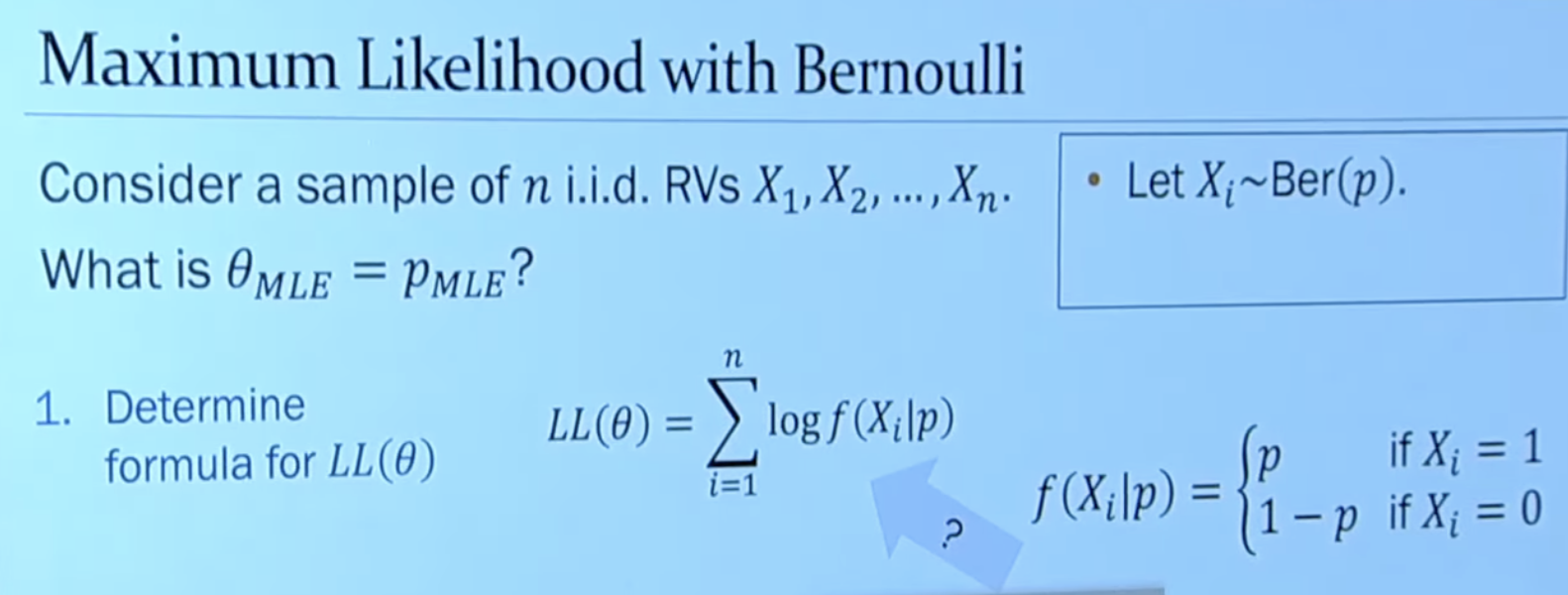

Then we tried for Bernoulli

However, this is not differentiable!!!

The right is a continuous version of the left expression

Result: choose your p to be the sample mean (same as Poisson)

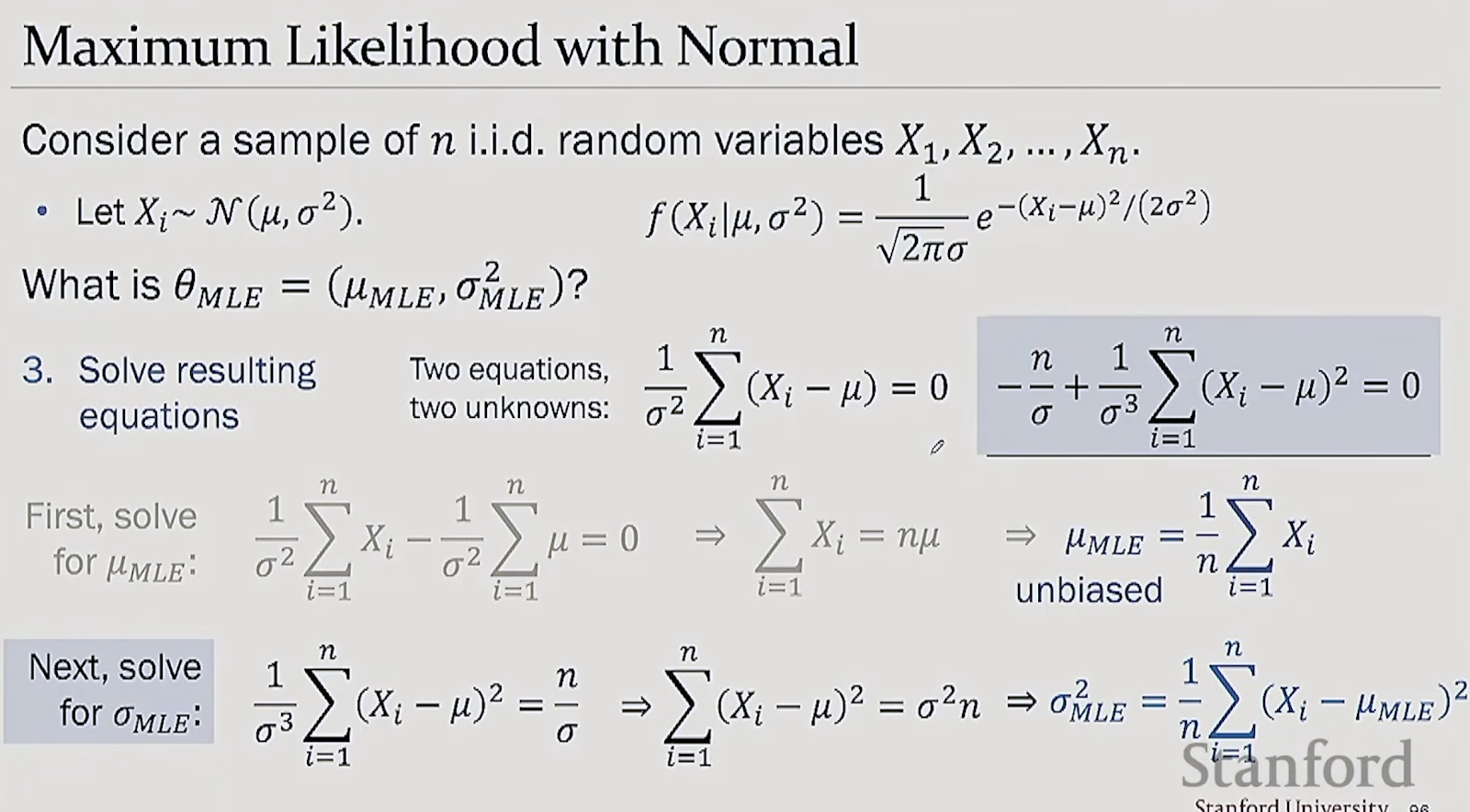

Then for a Normal distribution

However, the variance is a biased estimator (no n-1)Finally, MLE with Uniform dist

Finally, summarised with the properties of MLE

Just amazing. What a great way to introduce MLE, a key concept.

That is all for today!

See you tomorrow :)