[Day 69] Training an LLM to generate Harry Potter text

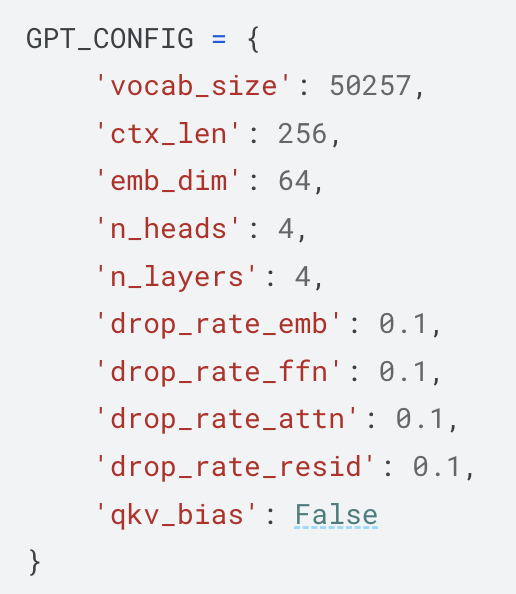

Hello :) Today is Day 69! A quick summary of today: tried to build upon the built LLM from the book from the days before, and write a training loop in the hopes of generating some Harry Potter text ( kaggle notebook ) Firstly I will provide pictures of the implementation (then share my journey today) The built transformer is based on this configuration Dataset is Harry Potter book 1 (Harry Potter and the Philosopher's Stone) text file from kaggle. Used batch size 16, and a train:valid ratio 9:1 Model architecture code: Multi-head attention GELU activation function Feed forward network Layer normalization Transformer block GPT model (+generate function) Optimizer: AdamW with learning rate 5e-4 and 0.01 weight decay. Ran for 1000 epochs. Final number of model parameters: 1,622,208 million Now, about my journey today ~ The easy part of was the dataset choice - harry potter. The hard part was choosing hyperparams that would end up in not perfect, but somewhat readable and s...