[Day 69] Training an LLM to generate Harry Potter text

Hello :)

Today is Day 69!

A quick summary of today:- tried to build upon the built LLM from the book from the days before, and write a training loop in the hopes of generating some Harry Potter text (kaggle notebook)

Firstly I will provide pictures of the implementation

(then share my journey today)

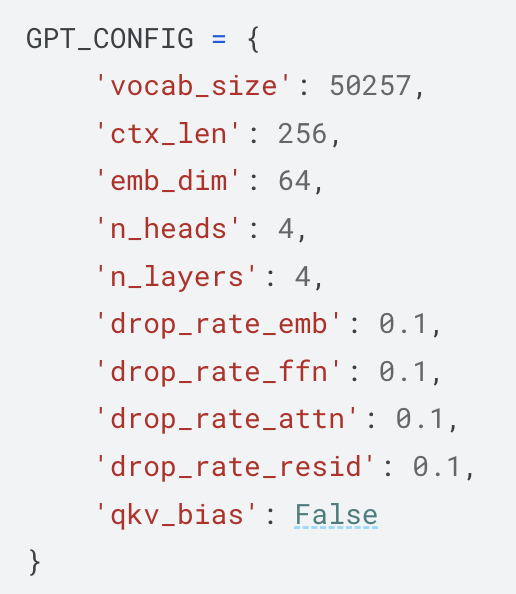

The built transformer is based on this configuration

Dataset is Harry Potter book 1 (Harry Potter and the Philosopher's Stone) text file from kaggle. Used batch size 16, and a train:valid ratio 9:1

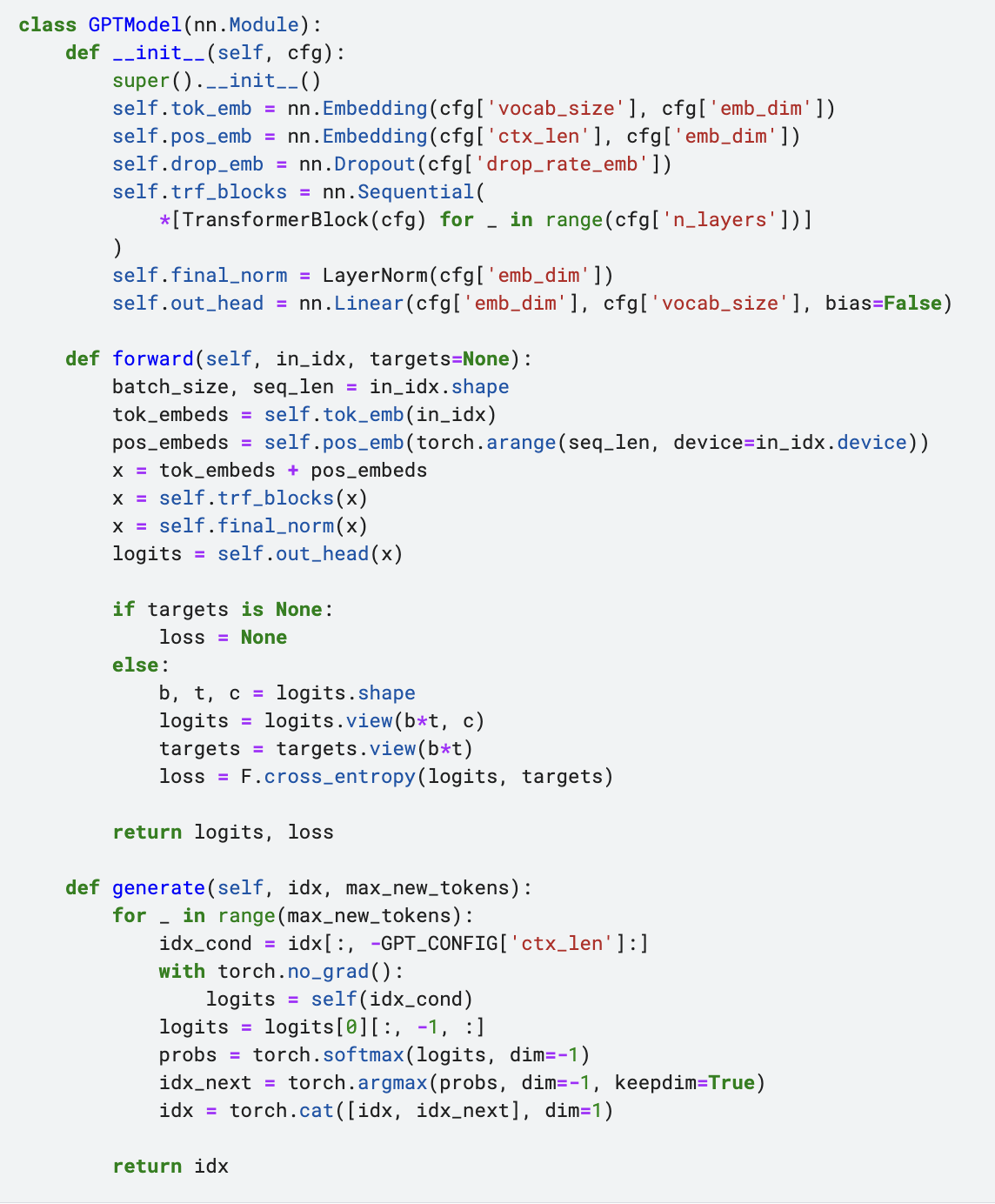

Model architecture code:

Multi-head attention

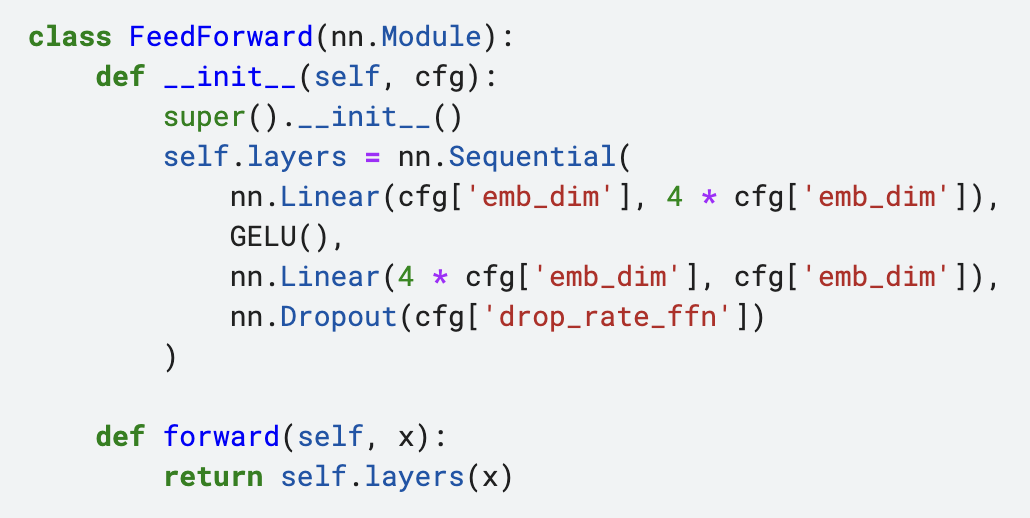

GELU activation functionFeed forward networkLayer normalizationTransformer block

GPT model (+generate function)

Final number of model parameters: 1,622,208 million

Now, about my journey today ~

The easy part of was the dataset choice - harry potter.

The hard part was choosing hyperparams that would end up in not perfect, but somewhat readable and some logic to it.

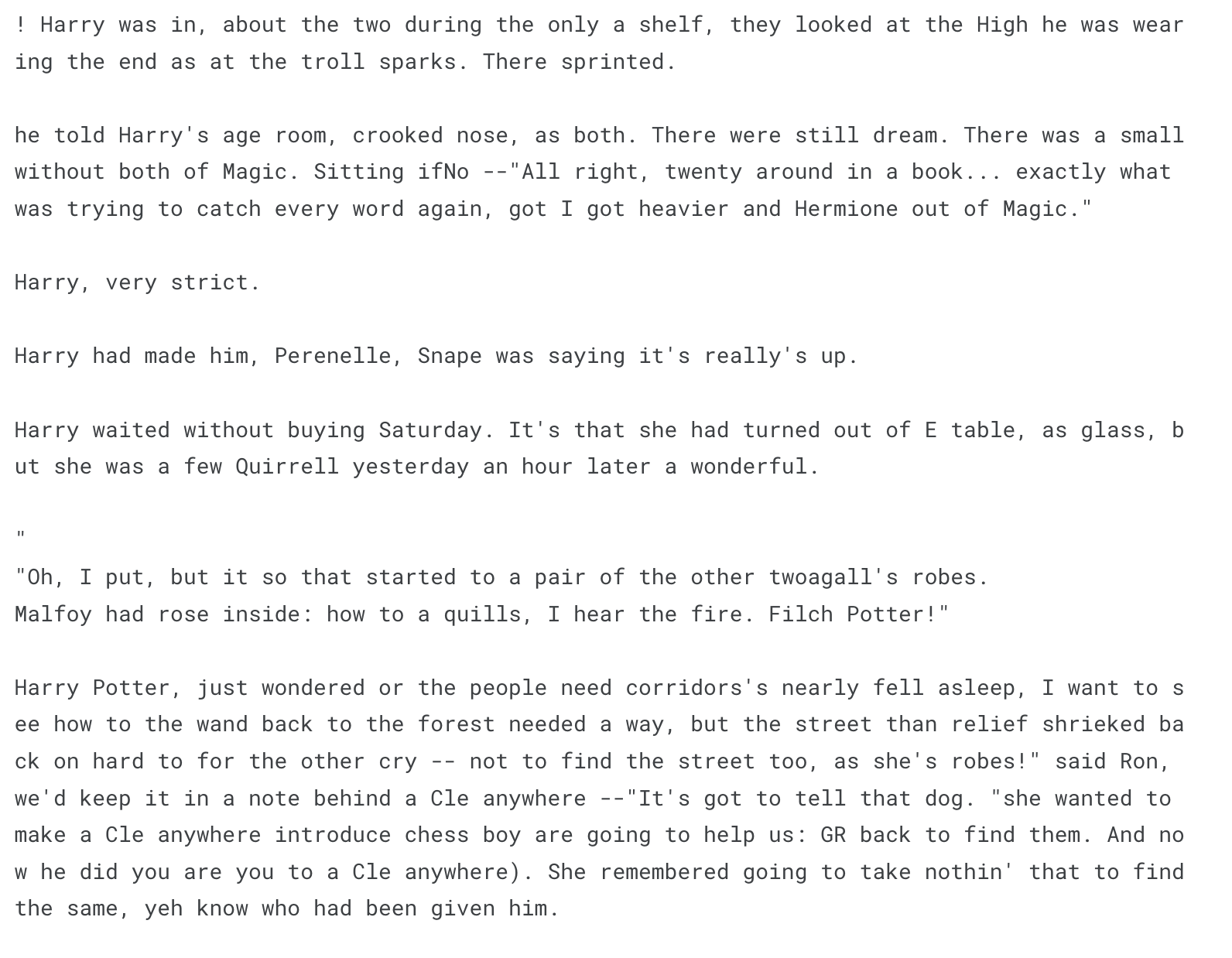

The result is:

Initially, I was doing it with just a train_dataloader (no validation) and I found a combination of hyperparams (manual search) that resulted in going to ~0.4 loss and the text was readable. Then I wanted to add validation to my process. That is when, almost every combination of hyperparams (higher learning rates, lower learning rates, embedding dims, context length, playing with the GPT_CONFIG) resulted in exploding valid loss. During my time today, I was running different versions on colab, my laptop (both slow) and on kaggle (fast), and I was getting great training, but lowering and at some point increasing valid loss (also at the points at which the valid loss started increaseing, the traing loss was still high, I remember in some cases still between 3.~6.). Lowering the learning rate was the biggest factor affecting the valid loss jumping after some epochs. The training was slow, so many params and understandable. Of course - a transformer might be an overkill for such a small (~600KB) dataset, but I just wanted to practice, to see the transformer in action. Still lots to learn about the inner workings, definitely using a hyperparam search would help, and also using weight clipping and lr scheduler - which I saw are used with transformers. I used them for 2 runs, but then I removed them, because at that point I was switching between train and train+valid training loop because I thought my code was wrong in how I calculated the valid loss.

Below is a generated text from another run that is on kaggle too (version 1).

It is ...something... haha.

That is all for today!

See you tomorrow :)