[Day 117] Some linear algebra + eigenvector/values and transferring more posts to the new blog

Hello :)

Today is Day 117!

A quick summary of today:- watched a couple of 3brown1blue's vides

- transferred more posts to the new blog

Firstly, I watched this one about abstract vector spaces

In abstract vector spaces we are dealing with a space that exists independently from the coordinates we are given. and the coordinates can be arbitrary depending what we choose as our basis vector.

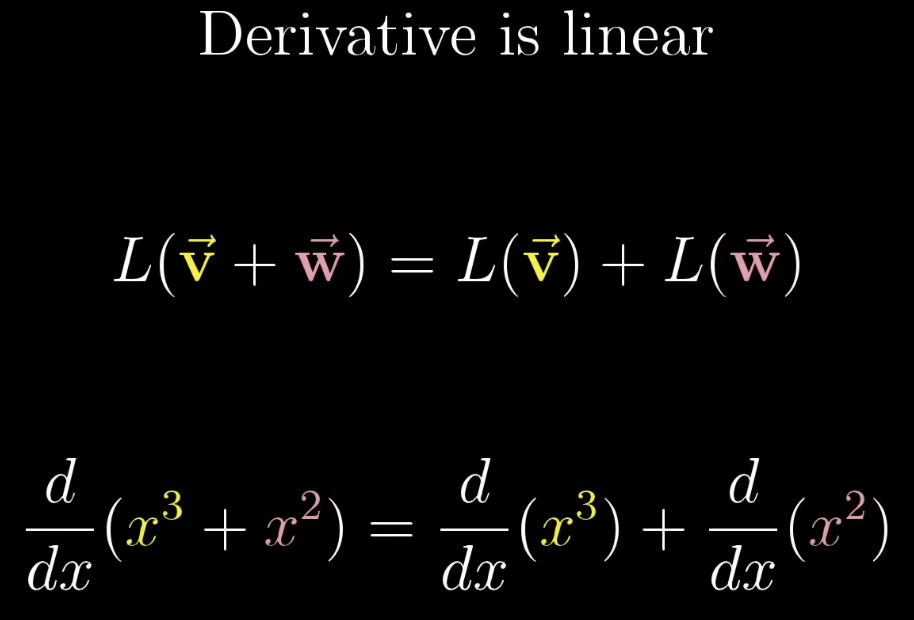

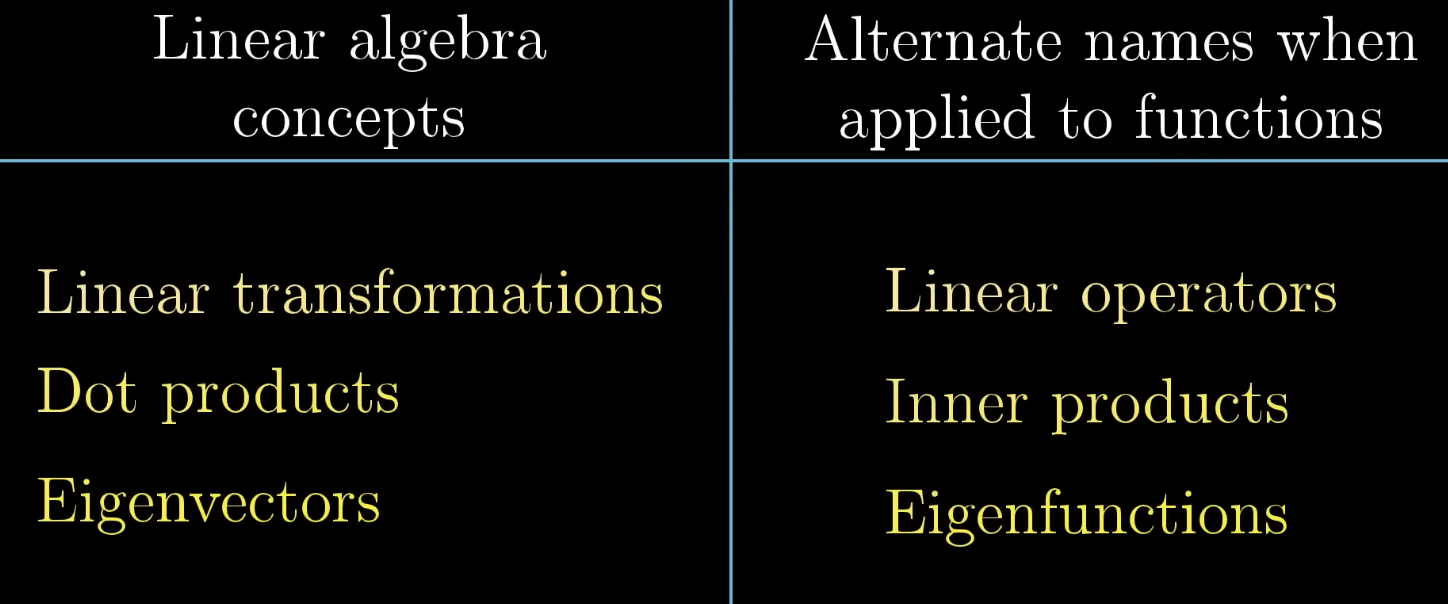

Determinants and eigenvectors dont care about the coordinate system - the determinant tells us how a transformation sclaes areas, and eigenvectors are the ones that stay on their own span during a transformation. Se can change the coordinate system, and it wont affect the values of the above 2.Functions also have vector-ish qualities. in the same we can add 2 vectors together, we can add 2 functions to get a third, resulting function.It is similar to adding vectors coordinate by coordinate.

Similarly, we can scale a function.

and it is the same as scaling a vector by a number

Secondly, eigenvectors and eigenvalues

A while ago, during Day 47-52, when I covered KAIST's AI503 Math for AI, I got introduced to these two concepts, and I kept seeing them around, knowing how to calculate them, but never 100% sure what they meant. Today I learned ^^

Imagine a linear transformation in 2 dimensions.

becomes (we apply a linear transformation)

Most vectors will get knocked off their original span during the transformation. However there are some that just get stretched/squashed, but still remain on their span.

For example, below, the black and green vectors remain unchanged.

becomesThese vectors are called eigenvectors of the transformation.

And the eigenvalue is the emount the vector is stretched/squashed during the transformation.More formally, the result from the multiplication of an eigenvector with the transformation matrix is the same as multiplying that eigenvector by the eigenvalue.

Also, I found a quick trick on how to compute eigenvalues (for 2d cases).

using the formula on the left, we can find the eigenvalues are: 2 +- sqrt(2^2 - -1) => 4.23606798 and -0.236067977Finally, I managed to transfer more blogs to the new blog

It is indeed a bit tidious, but I have only a few Korean ones left, and the rest should be just copy-pastes.

Tomorrow, I am going to Seoul for a career event, so on the bus ride there, I will probably read 1-2 research papers that I can talk about on tomorrow's post.

That is all for today!

See you tomorrow :)