[Day 56] I found my next step in the ladder - cs224n NLP with DL by Stanford

Hello :)

Today is Day 56!

A quick summary of today:- Found Stanford Uni's cs224n NLP with DL course

Today... was a bit weird, I suppose. My goal was to start something related to NLP and build on my knowledge from the previous days.

Before that, I will share about the book (from the 1st point).

I saw this book about NLP with transformers, and looking at the content it looked awesome, covers a variety of topics in NLP - transformers, text classification, named entity recognition, text generation, summarization, question answering, dealing with different data.

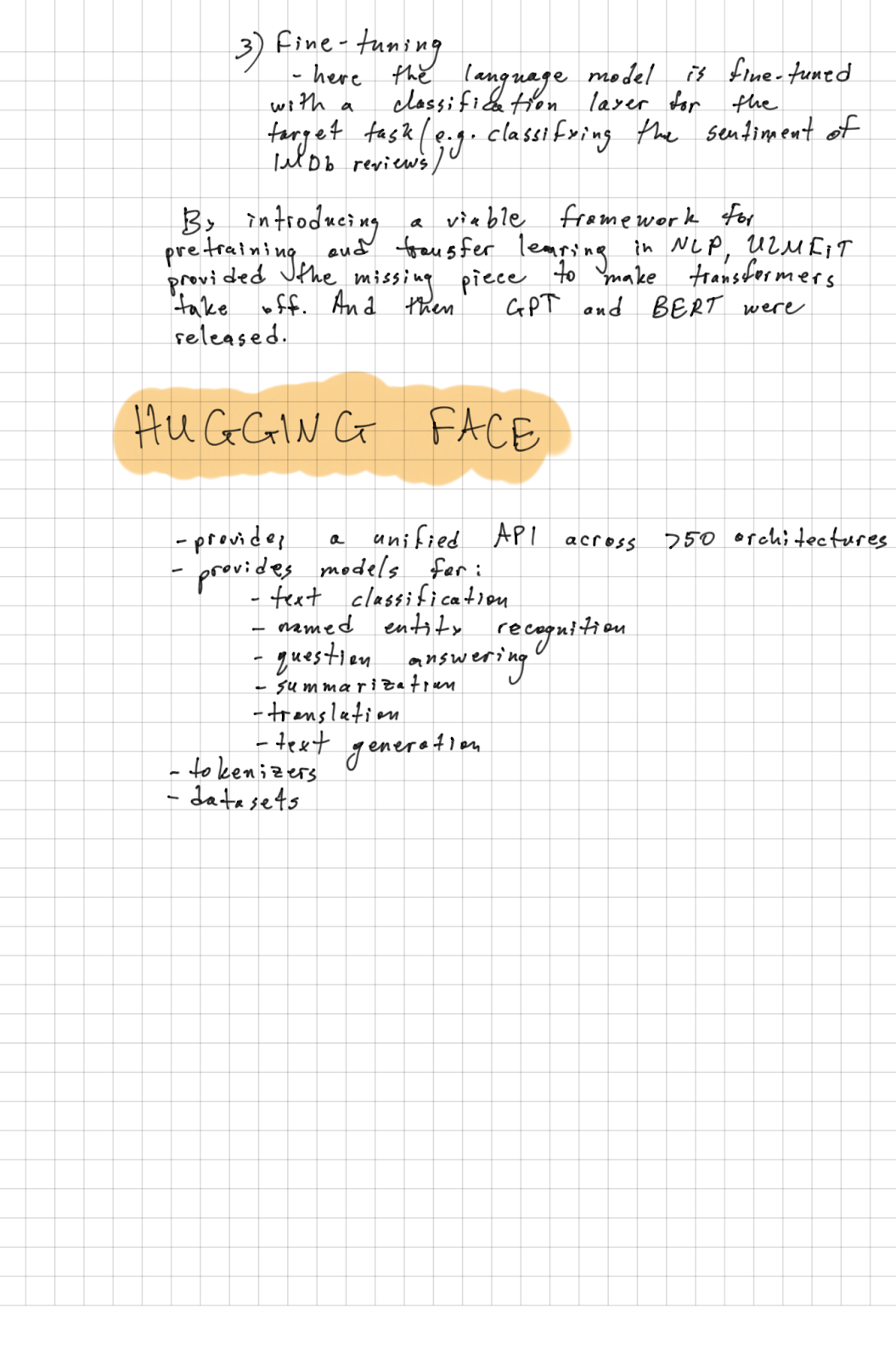

So I decided to give it a go, and took notes on the 1st chapter, which was great. (you can see them at the end of this post)

I learned about how the transformer came to be, its 'story'. And my notes are below.

Then the book starts to use huggingface - a popular library that provides a vast amount of models, datasets, tokenizers and NLP tools. But I feel like that is kind of cheating.

I actually saw a meme online, where transformers' theory is so long and hard to understand and grasp, but in reality, you just do import transformers haha

But my goal is not that. I wanna actually learn everything, all the small bits. And of course, from Professor Choi's KAIST course, and Andrej Karpathy and Andrew Ng, I believe I have a good grasp on the transformer architecture, but in order for me to have a good grasp on NLP as a field, I need to learn about the other issues, models, architectures, etc that were and are used in the field. Just like when I learned about computer vision - I went through AlexNet, VGGNet, GoogLeNet, ResNet, YOLO and MaskRCNN, I want to see what is out there in terms of NLP.

With that in mind, I started to look around what to do. NLP specialization by DeepLearning.ai ? KAIST's AI605 DL for NLP ? Stanford's CS224N NLP with DL ? Find and read another book ? Read research ? (I actually searched papers, pic below, about tokenization because Andrej Karpathy made it sound very interesting, so I considered this as an option)

And I started to 'audit' all the options, while also looking for what other people have suggested on r/deeplearning, r/machinelearning. I watched maybe 1.5hrs of each of the 3 courses, found a book by Michael Nielsen (Neural networks and Deep learning), and a 'mit-deep-learning-book'. But I think what made the most sense to me was to commit to the Stanford course. Why? It was the most up to date (half is from 2021, half is from 2023), for each lecture they provide an extensive list of extra readings, there are slides and notes for each lecture, the course has a big community for questions, after the basics, they start using pytorch, and others.

So, from tomorrow I will commit and be like a student that takes that class. It is not my first time taking a free Stanford course, so I am excited for the amazing teaching, and excited to dive into the world of NLP!

That is all for today!

See you tomorrow :)