[Day 184] Mlflow experiment tracking and trying out metaflow

Hello :)

Today is Day 184!

A quick summary of today:- got various experiments ran and saved in my GCP mlflow server

- set up and tried out metaflow for MLOps orchestration

I ran a few experiements and the currently saved are:

I will select a model solely based on recall - being able to detect the most fraud cases out of all fraud cases. Based on that, so far (after finding optimal params) the Balanced RF Classifier is the best -> recall being 0.92.The most recent code and files are in my repo.

Today I did some refactoring to make things look nicer -> added a utils folder with some helper functions that I used a lot throughout notebooks. The current are log_model_performance and get_best_params which log a model run to mlflow, and then load the params of a model from mlflow.

Another thing - I asked on reddit's MLOps page what is a good tool to tryout because so far I know only Mage as an orchestrator, and what is a good, popularly used tool, for beginners and for an MLOps project. Metaflow got the most votes, but also flyte. I decided to first check out metaflow as it seems it is widely used in top companies like Netflix and LinkedIN.

metaflow has an UI which can be set up in the cloud (prefered method) or locally (which I did following this article).

I found a simple metaflow workflow, and modified it as follows:

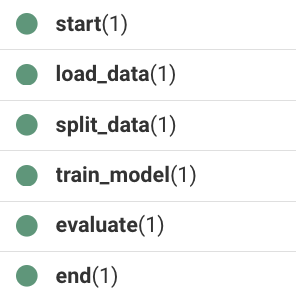

The code for it is on my repo as well, under testing_metaflow.pyI am running the metaflow UI locally and the below is what I get in the UI (given a success run)

Going into one of the 'green' runs:However, I am not seeing anything else. I am not sure if it is because I need to initialise it in my code, or maybe because I am running the UI locally. Nevertheless, it seems nice (I guess) but I need to figure out logging more info and maybe artifacts.Tomorrow ~ I will try to explore metaflow a bit more. Hopefully I can figure out how to see more things in the UI.

That is all for today!

See you tomorrow :)