[Day 174] Starting LLM zoomcamp + Learning about Data Warehouses + BigQuery

Hello :)

Today is Day 175!

A quick summary of today:

LLM camp module 1: basic RAG app

As for the LLM zoomcamp material from Module 1 which serves as an intro to RAG ~This is a nice simple graphic done by the instructor and it explains the basics pretty well. I have already had plenty of experience with RAG apps but still I wanted to give this camp a go as I know I will learn something new. And even today I did - mainly GPT api and the Elasticsearch library for a search database.

Here is a bit of the material from today. Everything is on this repo.

The built RAG app is based on DataTalksClub's Q&A documents where they have gathered tons and tons of questions so that students can reference and ask the doc itself, rather than the same questions appearing in Slack all the time.

First, load documents (and you can see an example)

{'text': "The purpose of this document is to capture frequently asked technical questions\nThe exact day and hour of the course will be 15th Jan 2024 at 17h00. The course will start with the first “Office Hours'' live.1\nSubscribe to course public Google Calendar (it works from Desktop only).\nRegister before the course starts using this link.\nJoin the course Telegram channel with announcements.\nDon’t forget to register in DataTalks.Club's Slack and join the channel.",

'section': 'General course-related questions',

'question': 'Course - When will the course start?',

'course': 'data-engineering-zoomcamp'}

Next, create a search index

We can run elastic search using docker.

And to create an index we need the following format

Then adding documents to the index using (the actual doc count is ~985 so the for loop is fine in this case)

for doc in tqdm(documents):

es_client.index(index=index_name, document=doc)

Next, querying elastic search

We need the below format

Finally, sending the context + query to GPTQuery: 'I just discovered the course. Can I still join it?' (Answer should be yes)

Returned result: 'Based on the information provided, yes, you can still join the course even after the start date. Registration is not necessary, and you are still eligible to submit homework. Just be aware of deadlines for final projects and work at your own pace.'

As for the homework, it was quite similar to the above. Here is my submission.

Also, all of this made me think for when I finish this 365 day learning journey. I should create an app like that, that lets people chat and more easily learn about my journey.

LLM camp module 2: open-source LLMs

Here the instructor introduced us to free alternatives to OpenAI, like huggingface and ollama.

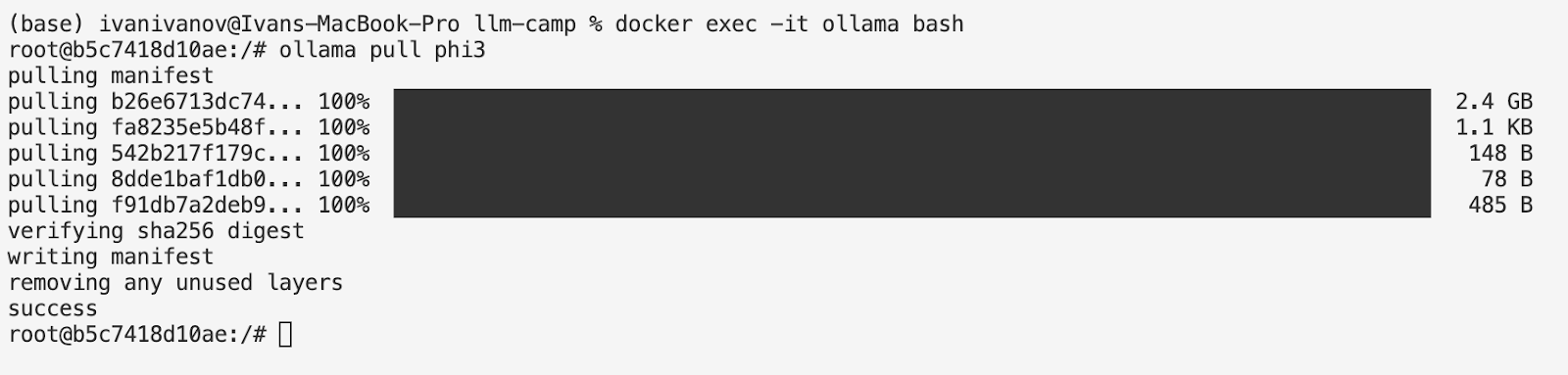

I learned how to setup ollama locally using docker.

Firstly, a docker compose file was needed to run both elastic search and ollama

Then, we used phi3, and because we need it locally downloaded, I went into docker and downloaded itAnd then, I just modified the final notebook from Module 1 just so it uses ollama, and the output is surprisingly good.Query: 'I just disovered the course. Can I still join it?'

Result: ' Yes, you can still join the course even after the start date. We will keep all materials available for you to follow at your own pace after it finishes. You are also eligible to submit homeworks and prepare for future cohorts if needed.\n\nHowever, please note that there will be deadlines for submitting final projects. It is recommended not to leave everything for the last minute.'

Here is the full code.

The final bit of content of module 2 is creating a basic streamlit FAQ bot using ollama and elastic search.

Very nice so far. Can't wait for the next topics which will come in the next 2 weeks~Data engineering camp Module 3: data warehouses

Firstly, I learned about OLAP vs OLTP

What is a data warehouse?

it is an OLAP solution, that is used for reporting and data analysis. They might have many data sources that all report to a staging area which is then written to a data warehouse. A warehouse can consist of metadata, raw data and summary data. Then it can be transformed into various data marts, and then users can access this data.

What is BigQuery?- a serverless data warehouse

- there are no servers to manage or database software to install

- it includes scalable and highly available software and infrastructure

- there are built-in features like ML, geospatial analysis, BI

- it maximises flexibility by separating the compute engine that analyses our data from our storage

Then a bit about partitioning in BigQuery

And clustering

Choosing clustering over partitioning

- partitioning results in a small amount of data per partition (apprx < 1GB)

- partitioning results in a large number of partitions beyond the limits on partitioned tables

- partitioning results in our mutation operations modifying the majority of partitions in the table frequently

- as data is added to a clustered table

- the newly inserted data can be written to blocks that contain key ranges that overlap with the key ranges in previously written blocks

- these overlapping keys weaken the sort property of the table

- to maintain the performance characteristics of a clustered table

- BigQuery performs automatic re-clustering in the background to restore the sort property of the table

- for partitioned tables, clustering is maintained for data within the scope of each partition

- cost reduction

- avoid SELECT *

- price your queries before running them

- use clistered or partitioned tables

- use streaming inserts with caution

- materialise query results in stages

- query performance

- filter on partitioned columns

- denormalising data

- use nested or repeated columns

- use external data sources appropriately

- reduce data before using a JOIN

- do not treat WITH clauses as prepared statements

- avoid oversharding tables

- avoid javascript user-defined functions

- use approximate aggregation functions

- order Last, for query operations to maximise performance

- optimize join patterns

- as a best practice, place the table with the largest number of rows first, followed by the table with fewest rows, and then place the remaining tables by decreasing size

That is all for today!

See you tomorrow :)