[Day 162] Deploying a mage.ai instance to aws

Hello :)

Today is Day 162!

A quick summary of today:- yes! I finally completed the 03-orchestration part from the MLOps zoomcamp

- downloaded the NYC taxi dataset and started learning how to turn it into a graph dataset

Firstly, regarding mage.ai

I cannot express how glad I am, to finish *completely* module 3. Here is my repo.

So many errors and deviations from what is shown on the videos (which were recorded 2 weeks ago...) I am speechless. Nevertheless, I finally did it all.

Today I figured out some of the errors related to AWS authentication, and managed to deploy an instance to AWS using github actions. I hope I can use it, or what is probably better - use what I learned today as a template for an actual production-ready deployment. Because right now some things like database info and passwords are on github.

I played around with deployments ~. Next will be module 4 - deployment.Secondly, about what I did in the lab

Firstly, today I read a nice paper: PGCN: Progressive Graph Convolutional Networks for Spatial–Temporal Traffic Forecasting

Introduction

This study introduces the Progressive Graph Convolutional Networks (PGCN) model for spatial-temporal traffic forecasting. Key features include:

- Trend Similarities: Uses parameterized cosine similarity to define trend similarities between traffic nodes.

- Progressive Graph Convolution Module: Constructs dynamic graphs based on trend similarities, adapting to online input signals during both training and testing.

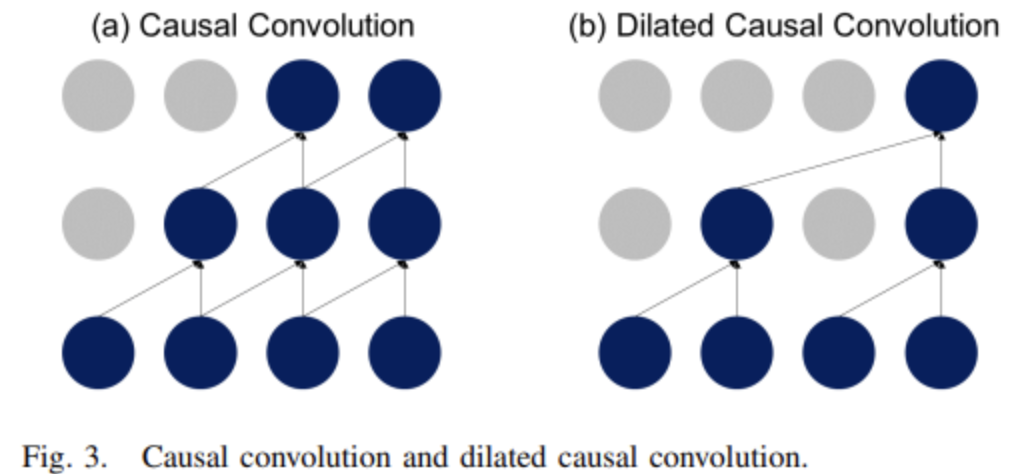

- Spatial and Temporal Feature Extraction: Captures spatial correlations through progressive graph convolution and extracts temporal features using dilated causal convolution with a gated activation unit.

The model demonstrates state-of-the-art performance on seven real-world datasets, consistently performing within 1.5% of the best models, and remains competitive even without structural network information.

Progressive graph convolutional networks

This is the core idea of PGCN

Progressive graph construction

Static features like POI category or speed limit are not always efficient for modelling node signal correlations, which change over time (e.g., morning vs. afternoon peak hours). Instead, this study proposes learning rich semantics from online traffic data by measuring node similarities using parameterised cosine similarity. Unlike previous methods, which only updated graphs during training, this study introduces a progressive graph that adapts to traffic changes based on trend similarities during both training and testing phases.

Dilated causal convolution

Causal convolutions extract temporal features by stacking 1-D convolution layers while ensuring that future information is not considered for prediction. Causal convolution requires many layers to increase the size of the receptive field. To overcome this, dilated causal convolution is used.

Overall architecture of PGCNEach spatialtemporal layer consists of dilated casual convolutions, a gated activation unit, a progressive graph convolution module, and a residual connection. While multiple spatial-temporal layers are staked to increase the size of the reception field in temporal feature extraction, the parameter for the progressive graph constructor is shared across the layers. Finally, the output layer consists of a skip connection from each layer to prevent information loss from the initial layers and two fully connected layers with ReLU activation.

Experiments

Datasets

PeMS-Bay, METR-LA, Urban-core, Seattle-Loop, PEMS04, PEMS08, PEMS07

Baselines

HA, FC-LSTM, DCRNN, GraphWaveNet, TGC-GRU, DMSTGCN, STSGCN, AGCRN, Z-GCNETs

Results

(only results on 3 of the datasets shown in picture)

Then, I decided to try to start recreating some of the taxi prediction papers. But to begin with I needed to learn how to transform the NYC taxi dataset (one of the most popularly used) into a temporal graph dataset.

There are of course ways, including using networkx and pytorch_geometric, but today I could not find one that explains it well. I found this youtube video which touches not only on transforming table to graph dataset but also to temporal dataset. Tomorrow I need to look more into this.

That is all for today!

See you tomorrow :)