[Day 160] Simple data engineering pipeline with Prefect, and... MLOps with mage.ai (tons of problems)

Hello :)

Today is Day 160!

A quick summary of today:- simple data engineering pipeline with prefect

- tons of trouble learning about orchestration with mage.ai

After yesterday's journey with prefect the youtube algorithm recommended me another tutorial for prefect - this time for creating data pipelines with prefect. So I decided to give it a go.

What is data engineering?

- data scientists can do data engineering, but in specific cases where the two jobs cannot or are not needed to be separate

- data engineers build databases, they build lots of data pipelines and manage infrastructure (also care about cost, security)

- ETL(ELT)/batch pipelines that move data from A to B

- databases, APIs, files

- streaming pipelines - as data comes in, we consume that data and send it wherever it needs to go

- message queues, polled data

After some basic setup, when we run 'pipeline' in the terminal which runs the main.py file:

In prefect we get a flow runand logged outputs from the petstore urlNow for a flow with a bit more tasks~

1st task: retrieve from API

2nd task: clean data3rd task: insert to postgres dbfinal flow:Success!We can also see the data loaded into postgres:Beege (the teacher) showed also how to do simple task tests and avoid a common prefect error when executing prefect task functions outside a prefect flow

The content was not much, but the constant errors... made me invest upwards of 12 hours of bug fixing for a total of 30 minutes of video content.

Orchestration with Mage.ai from MLOps zoomcamp module 3

The content of the intro to orchestration is:

I have to do 3.5 tomorrow (hopefully).I just want to say a huge thank you to the QnA bot on the mlops zoomcamp slack channel that at least pointed me into the right direction to solve my issues. Just quickly - I am bit sad that there are so many issues (almost every video) because it will drive people away from this amazing course, and below I will just provide my successes, and if you are really curious about my problems - they are all on the datatalks slack channel haha.

I started from the beginning

3.1 Data preparation

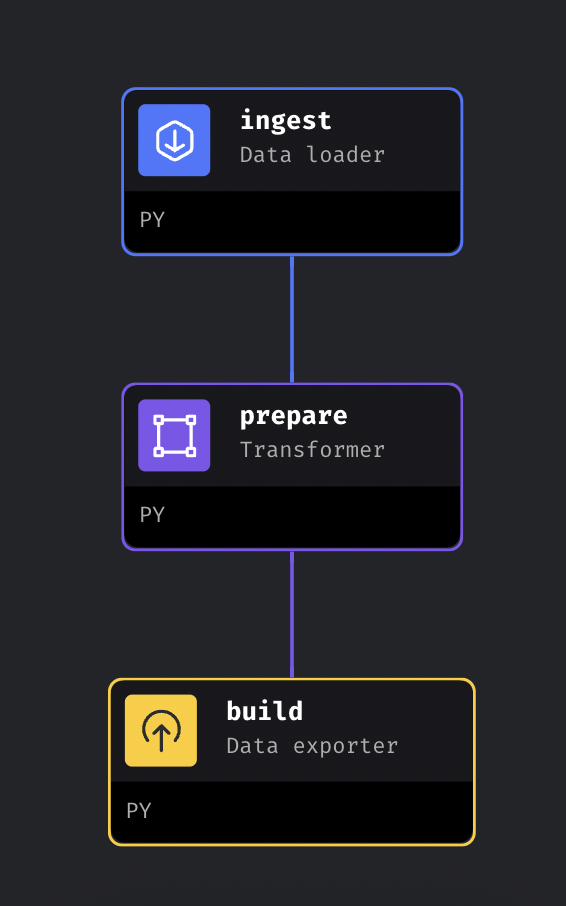

I created the above pipeline that reads NY taxi data, does a bit of preprocessing, and then outputs X, X_train, X_val, y, y_train, y_val, dv. By the way, each block is a piece of code.3.2 Training

First, create a pipeline to train a linear regression and lasso model. It takes data from the above 3.1 pipeline, loads models, does hparam search, and finally trains the two models.

Secondly, I had to create a pipeline for an xgboost modelThe last (pink) bit is connected to creating visualisations.

3.3 Observability

We can create any kind of bar/flow/line/custom charts, and the above 3 are some SHAP values from the xgboost model.3.4 Triggering

Here I created an automatic trigger to train the xgboost model when new data is detected.

Also, created this predict pipeline

There is a nice way to setup a basic interface for inferenceWe can also setup an API to do inference through that as well:That was all for today. I have very strong feelings towards Mage.ai but for now I will roll with it because of the nice course. Otherwise I will try to use prefect for some side project (have to think about that a bit).

That is all for today!

See you tomorrow :)