[Day 159] Learning and using prefect for MLOps orchestration

Hello :)

Today is Day 159!

A quick summary of today:- did Module 3 of the MLOps zoom camp from the 2023 cohort that uses Prefect

Before everything else, I finally got the notebook expert title on Kaggle! :party:

As for prefect ~Github repo from today's study is here.

A common MLOps workflow

Where we take data from a database into pandas, save it (checkpoint), reload with parquet, then maybe use sklearn for feature engineering and or running models, mlflow is there to track experiments, and finally the model is served.However, we might have failure points at any of the arrows (connections) between steps.

Prefect comes in when we give an engineer the following tasks:

- could you just set up this pipeline to train this model?

- could you set up logging?

- could you do it every day?

- could you make it retry if it fails?

- could you send me a message when it succeeds?

- could you visualise the dependencies?

- could you add caching?

- could you add collaborators to run ad hoc - who don't code?

The first few could be done quite easily, but the mid/later ones become hard without some kind of extra help like Prefect.

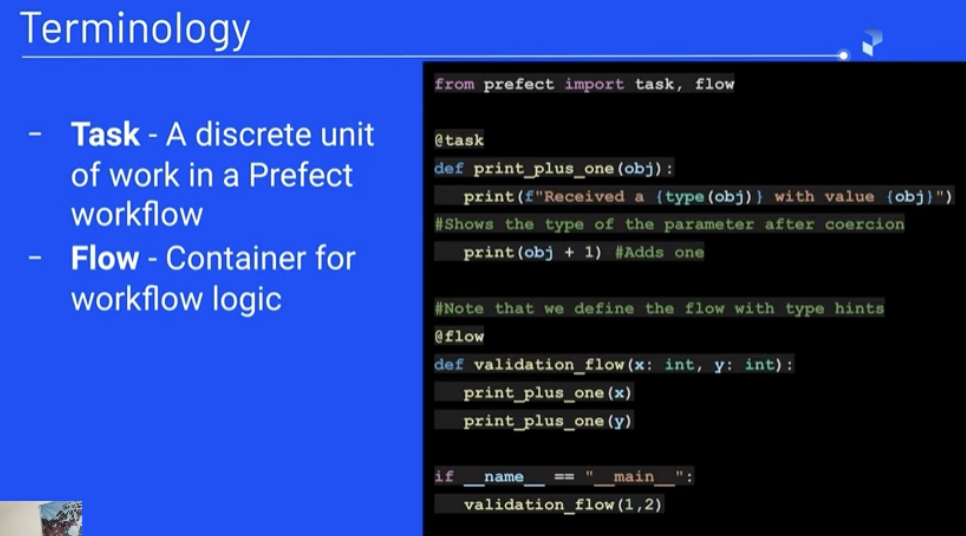

Some terminology:

After some setup ~ and prefect server start ~

And at the bottom in the logs: 'A cat’s nose pad is ridged with a unique pattern, just like the fingerprint of a human.'

Next, I executed a flow that utilises subflows.

And in the UI, 3 flows are creating in total. If we visit the parent one, called animal_facts we can see two two subflows:

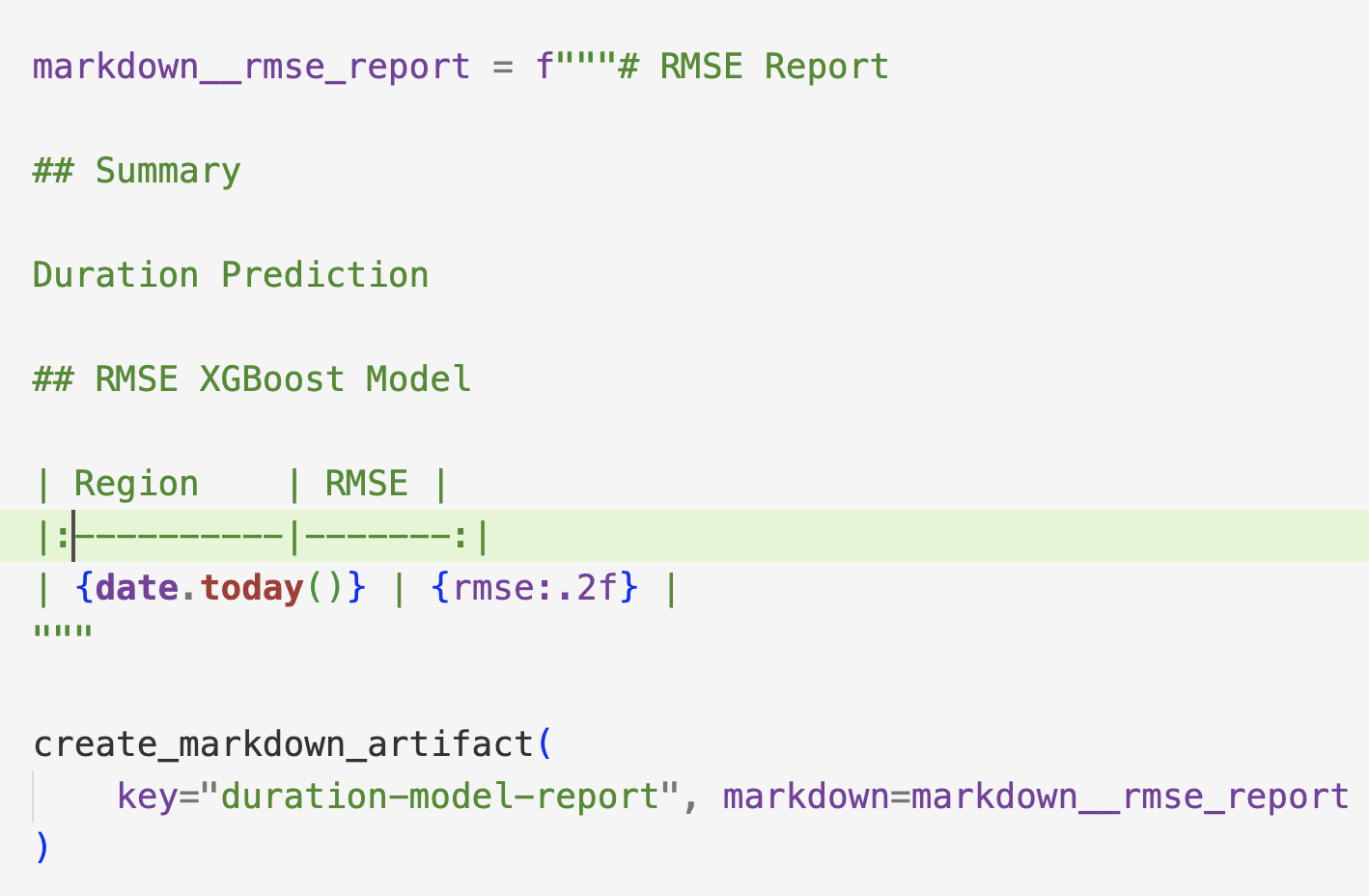

Next ~ creating a workflow

From Module 1, I created a jupyter notebook for taxi prediction, but here, to make it a bit more 'production-ready', the code is a bit more structured as follows:

From Module 1, I created a jupyter notebook for taxi prediction, but here, to make it a bit more 'production-ready', the code is a bit more structured as follows:

Create task - read data

And then, the main flow that executes all the tasks:

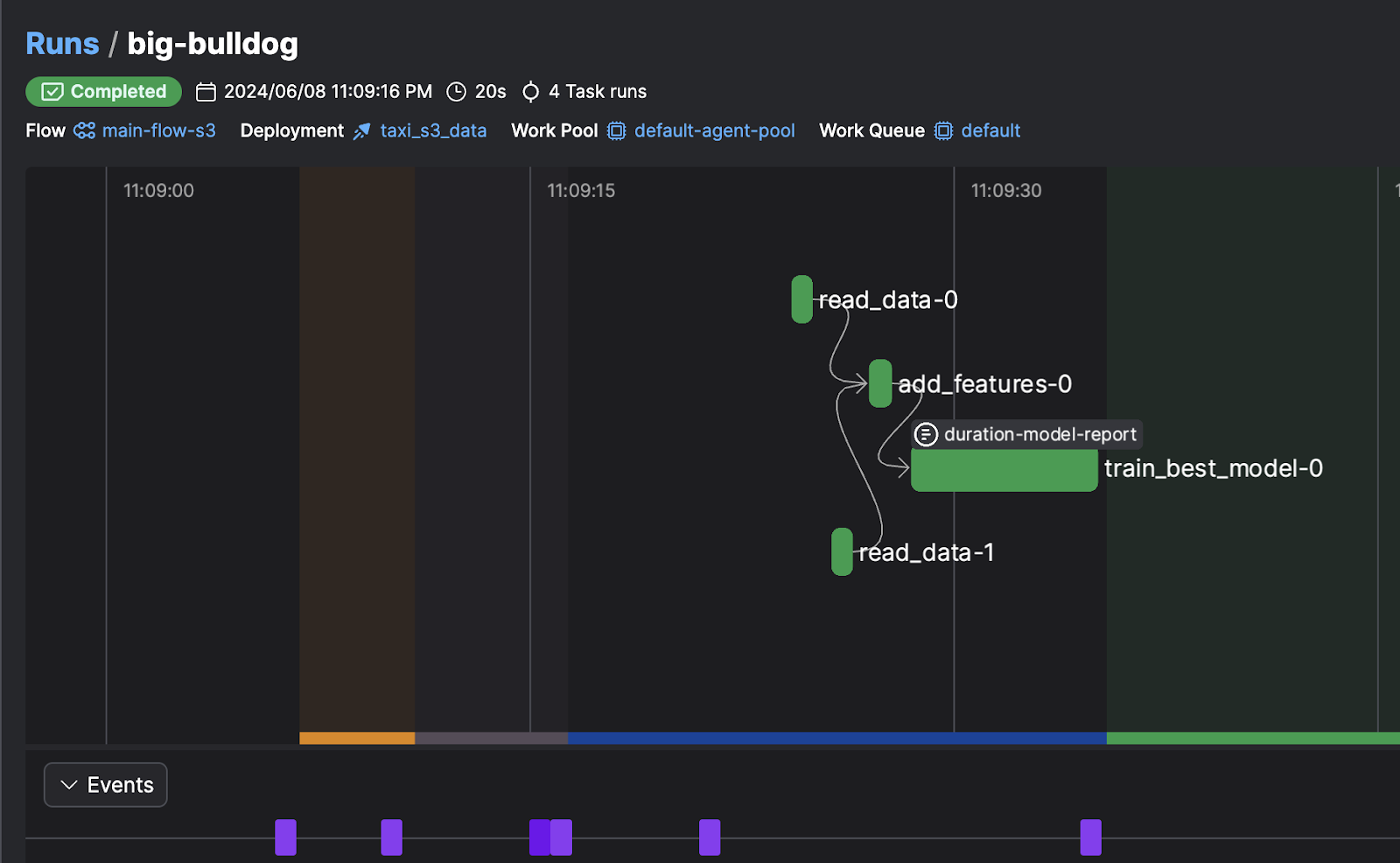

After we execute this flow, in the prefect UI we can see a new flow run with this visualisation of the tasks:

(I am writing today's post as I study ~)

Thankfully, youtube came to the rescue!

The commands are a bit different, but I got a deployment working.

(first one failed because of wrong data file name)

This was just a local deployment, but there are other options to deploy to GCP, aws, etc.

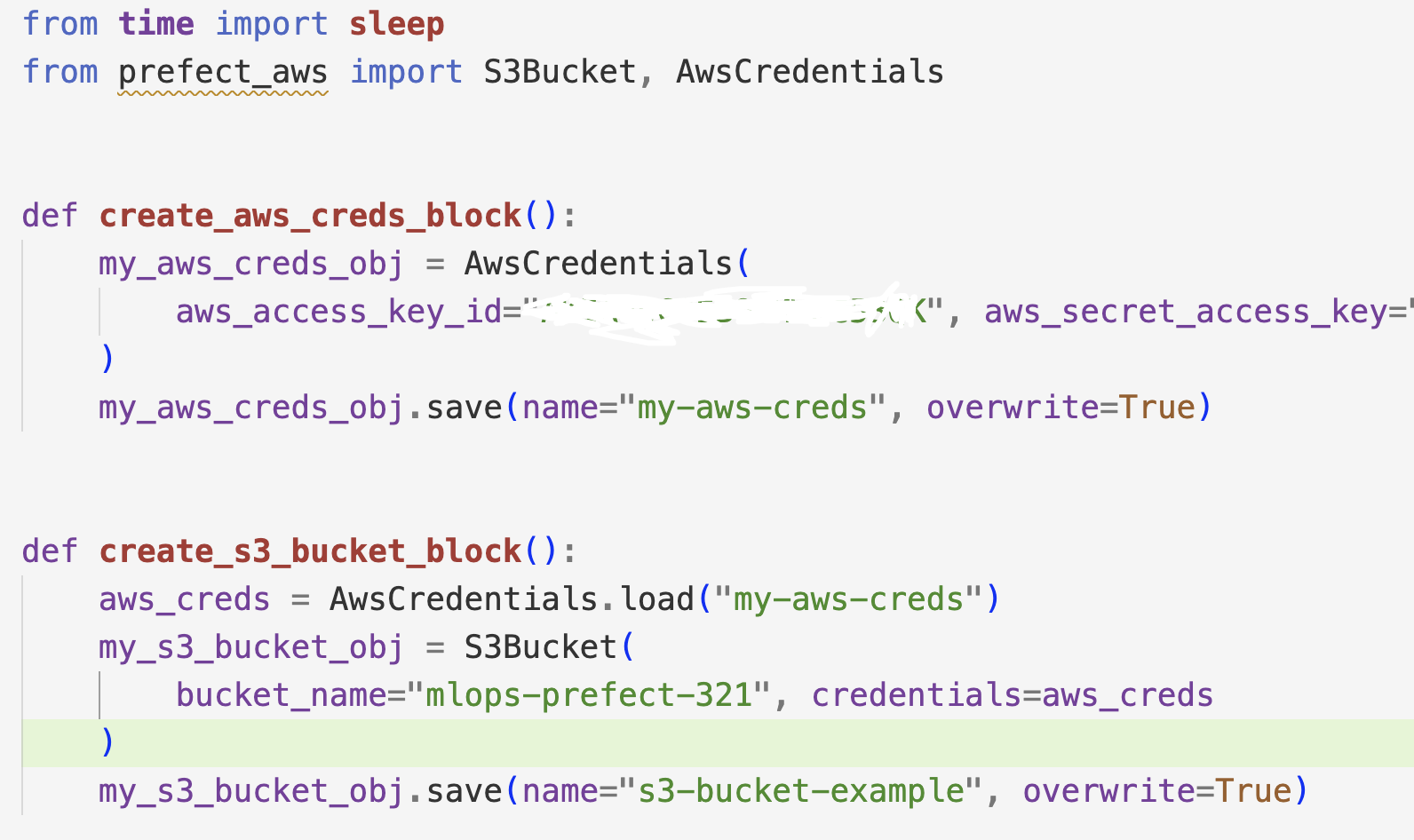

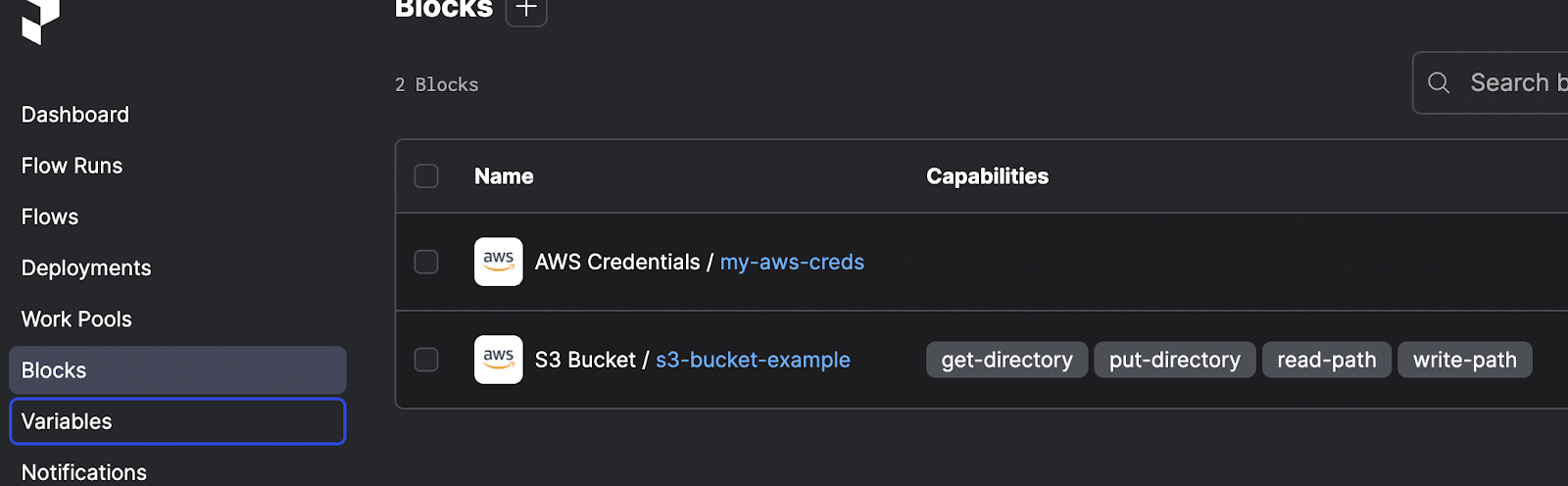

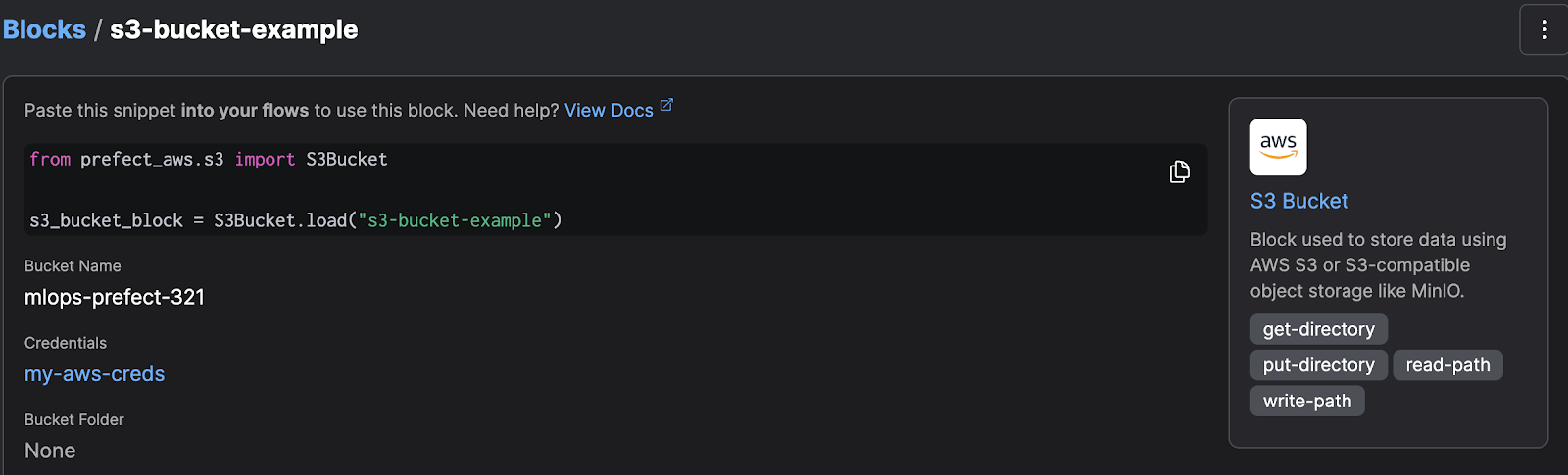

In the next bit, I setup AWS - and S3 storage with taxi data and a user with S3 permissions in IAM.

This was just a local deployment, but there are other options to deploy to GCP, aws, etc.

In the next bit, I setup AWS - and S3 storage with taxi data and a user with S3 permissions in IAM.

These blocks can be modified through the UI (also we can add new blocks through the UI directly too, rather than code)

We can use the uploaded S3 data using aws_prefect

Final section - connecting to prefect cloud

The cloud webapp has pretty much the same UI as the local one, and once I logged in, and deployed a flow, I could see:

The cloud webapp has pretty much the same UI as the local one, and once I logged in, and deployed a flow, I could see:

At least for now. I like prefect a lot, and might end up using it for the final project of the MLOps zoomcamp, but will see. I will give mage.ai another go.

That is all for today!

See you tomorrow :)