[Day 152] SVR & STTCM - Two architectures for taxi demand prediction

Hello :)

Today is Day 152!

A quick summary of today (papers read):

Updated graph of read papers from Obsidian:

The first paper introduces Spatial-Temporal Tree Convolution Model (STTCM)

Introduction

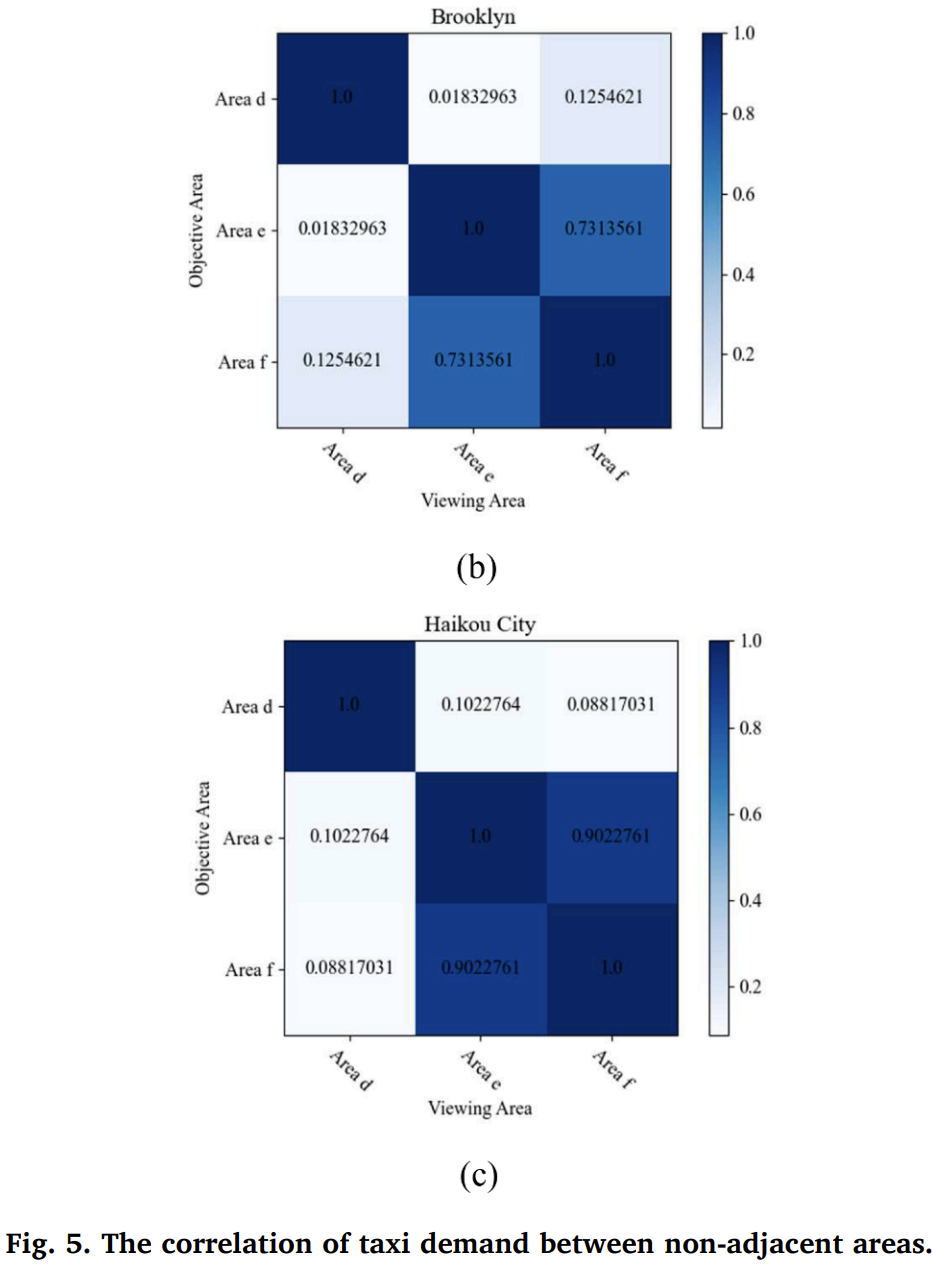

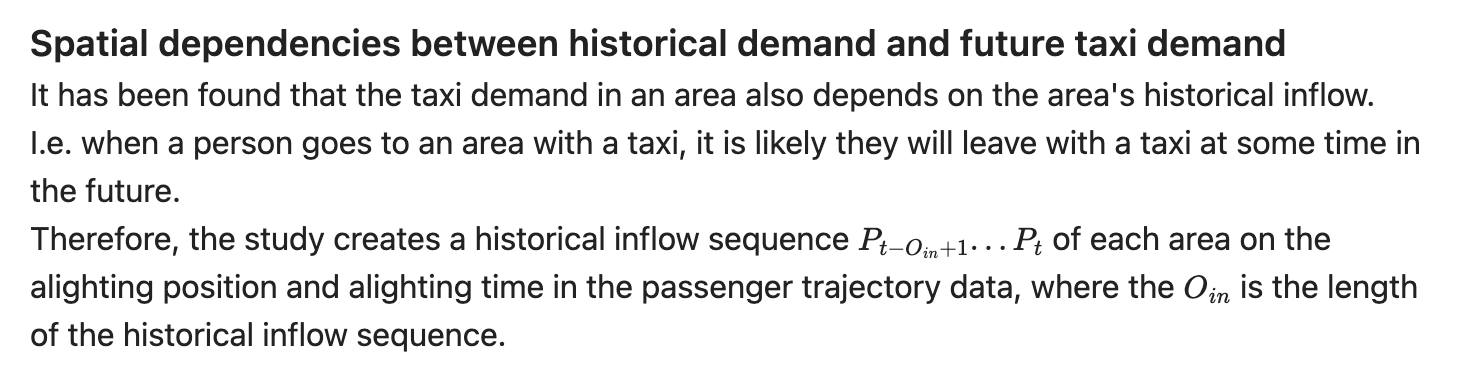

Spatial-temporal dependencies in taxi demand reflect changes across different locations and times. Temporally, demand follows city rhythms, being higher during rush hours and lower late at night, with variations between weekdays and weekends. Spatially, demand varies by area type, such as high demand in business districts during rush hours and in nightlife zones late at night. These dependencies interact; for instance, high morning demand in business districts is linked to the time of day, just as late-night demand in hotspots depends on it being late night. Taxi demand is influenced by multiple historical sequences and spatial dependencies. Temporally, demand is related to previous adjacent moments and shows periodic changes. Historical inflows also impact future demand, as passengers often return by taxi. Spatially, regions close to each other tend to have similar demand patterns and more taxi flows (picture). However, demand can also be affected by distant areas with potential spatial dependencies, such as high morning demand from residential to work areas and evening demand in the opposite direction on weekdays.

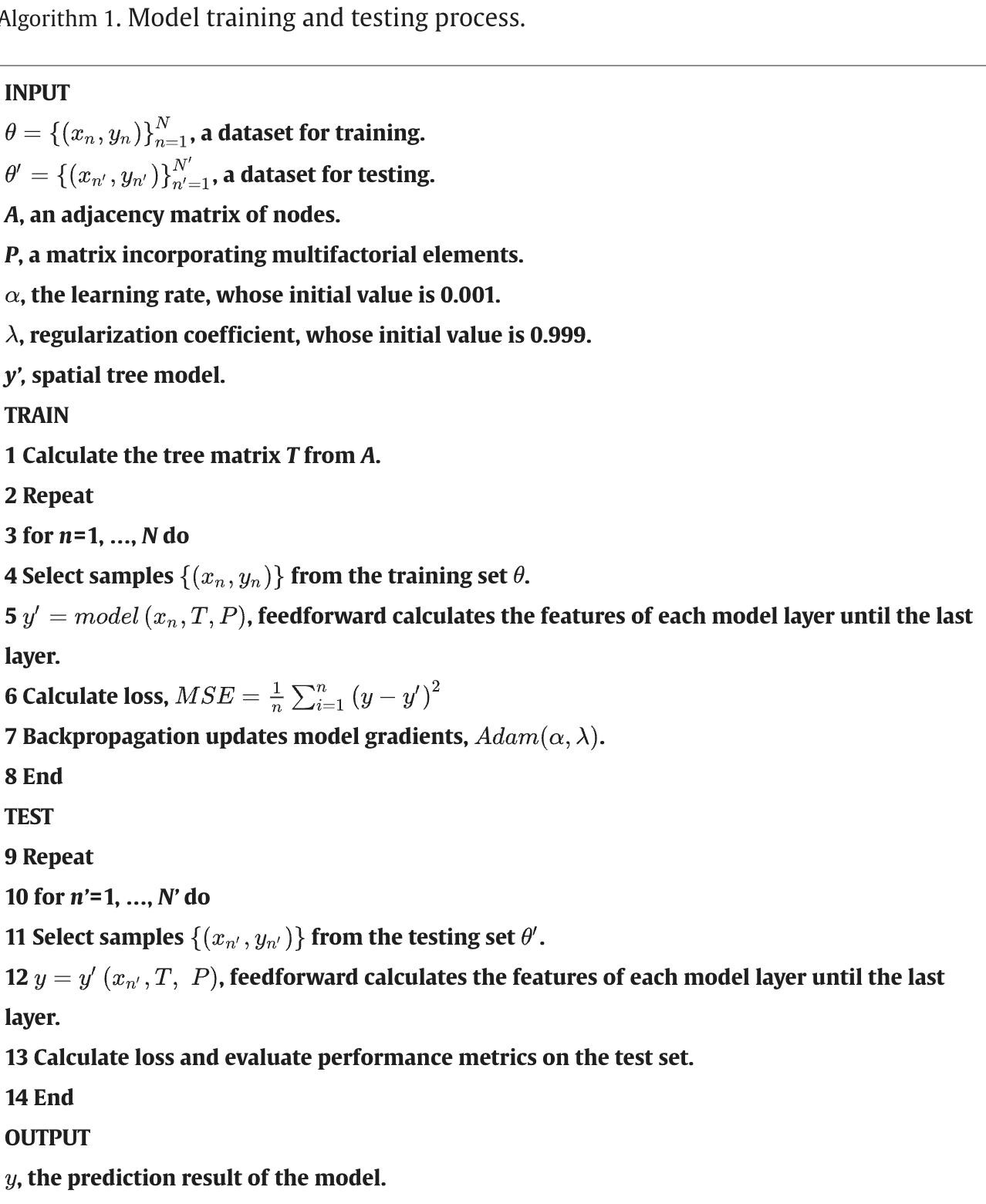

Definition of a tree matrix

Model design

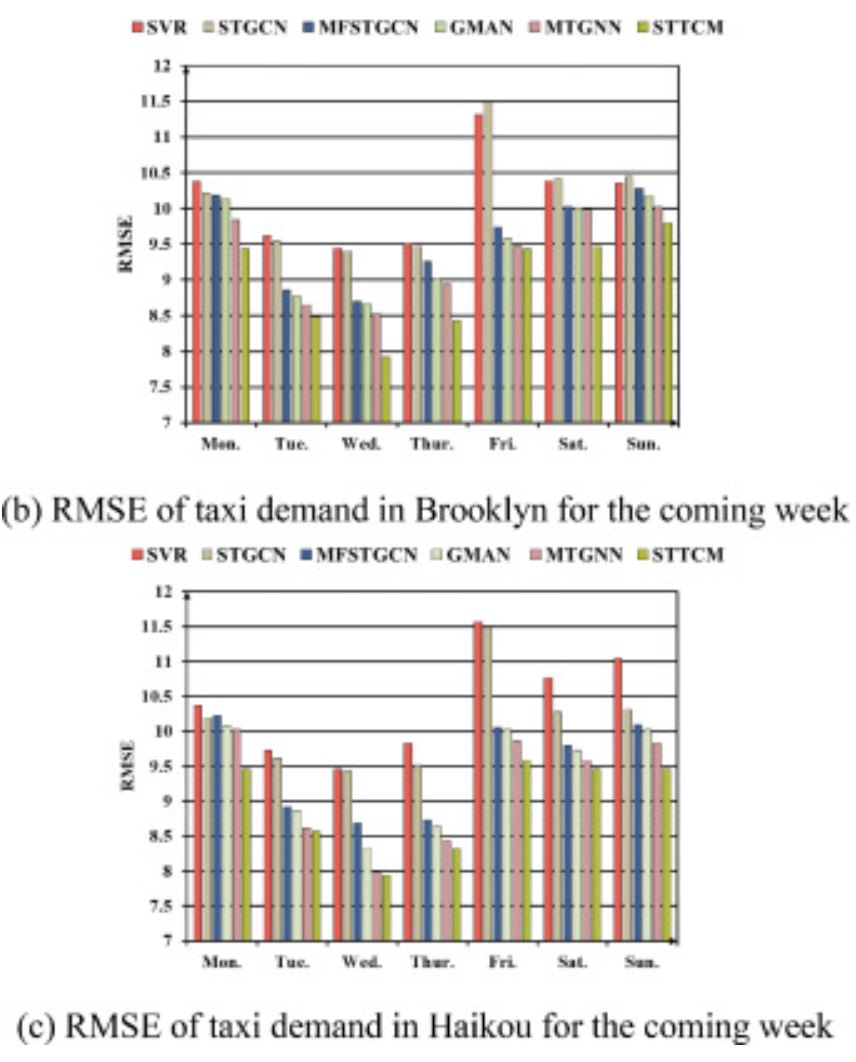

Results

- There is inherent sparsity in the constructed tree matrix.

- Incorporating auxiliary factors like holidays and weather conditions, can also form ideas for future improvements upon STTCM.

- Incorporating new structures that can better capture and reflect time-varying trends in inter-regional travel flows and traffic flows within urban road networks.

The second paper was referenced in the above one so I decided to read it as well - talking about Support Vector Regression

Introduction

Taxi-out time refers to the duration an aircraft spends moving on the ground from the departure gate to the takeoff runway. It starts when the aircraft is pushed back from the gate and ends when the aircraft's wheels lift off the ground during takeoff.

This period includes various activities such as taxiing to the runway, waiting in line for takeoff clearance, and any other ground movements necessary before departure. It's an essential factor in understanding airport operations and flight efficiency since longer taxi-out times can contribute to delays and congestion, affecting both airport capacity and overall flight punctuality.

Taxi-out time prediction techniques

Generalised Linear Model

GLM is a flexible generalisation of OLS that allows for response variables with error-distribution models other than normal distribution. GLM relates the linear model to the response variables through a link function and by allowing the magnitute of the variance of each measurement to be a function of its predicted value.

Softmax Regression Model

SR is a generalisation of logistic regression allowing for multiple (more than two) output classes.

Artificial Neural Network

Improved Swarm Intelligence Algorithm Based Prediction Approaches

Support Vector Regression

SVR is a non-linear regression forecasting method where the input variables are mapped into a high-dimensional linear feature space, commonly through a kernel function. In this higher dimensional space, the training data can be approximated to a linear function, and the global optimal solution is obtained by training of the finite sample.

Particle Swarm Optimization

PSO solves an optimization problem by moving the particles (candidate solutions) over those particles' velocities and positions according to simple mathematical formulae. The position of each particle is updated towards the better-known position driven by its neighbours’, and the global, best performance.

Improved Firefly Algorithm Optimization

The Firefly Algorithm (FA) is a new approach for solving optimization problems efficiently. It works by simulating the behavior of fireflies. In this algorithm, fireflies are attracted to each other based on their brightness and attraction levels. Brightness depends on their location and target value, with higher brightness indicating a better location. Fireflies with higher brightness also have a stronger attraction. If fireflies have similar brightness, they move randomly. Overall, FA is effective in solving optimization problems and can outperform traditional algorithms like Genetic Algorithms (GA).

PSO/IFA Based Support Vector Regression

This study combines swarm intelligence algorithms with SVR for better prediction accuracy. Since the learning data has many more samples than features, the input variables are transformed into Hilbert space using the RBF kernel, which is more effective than other kernels. To accurately predict departure taxi-out time, SVR models need optimal values for penalty factor, RBF kernel parameter, and epsilon. PSO and IFA are used to determine these values beforehand. Incorrect settings could affect training errors, induce overfitting, or lead to less effective learning.

Performance Measures

RMSE, MAPE, squared correlation coefficient, prediction accuracy

Data

Results

That is all for today!

See you tomorrow :)