[Day 135] Going deeper into MLOps

Hello :)

Today is Day 135!

A quick summary of today:- covered module 2 of the mlops-zoomcamp by DataTalks club about experiment tracking and model management

- cut 2 more videos for the Scottish dataset project

Firstly, about using mlflow for MLOps

Maybe this is because I am starting to learn about MLOps for the 1st time and I don't know other tools, but WOW mlflow is amazing. Below are my notes from the module 2 lectures. Full code on my github repo.

First, some important concepts

- ML experiment: the process of building an ML model

- experiment run: each trial in an ML experiment

- run artifact: any file that is associated with an ML run

- experiment metadata

- the process of keeping track of all the relevant info from an ML experiment (could include source code, environment, data, model, hyperparams, metrics, other - these can vary)

- reproducability

- organization

- optimization

- error prone

- no standard format

- visibility and collaboration

We can spin up mlflow with: mlflow ui --backend-store-uri sqlite:///mlflow.db

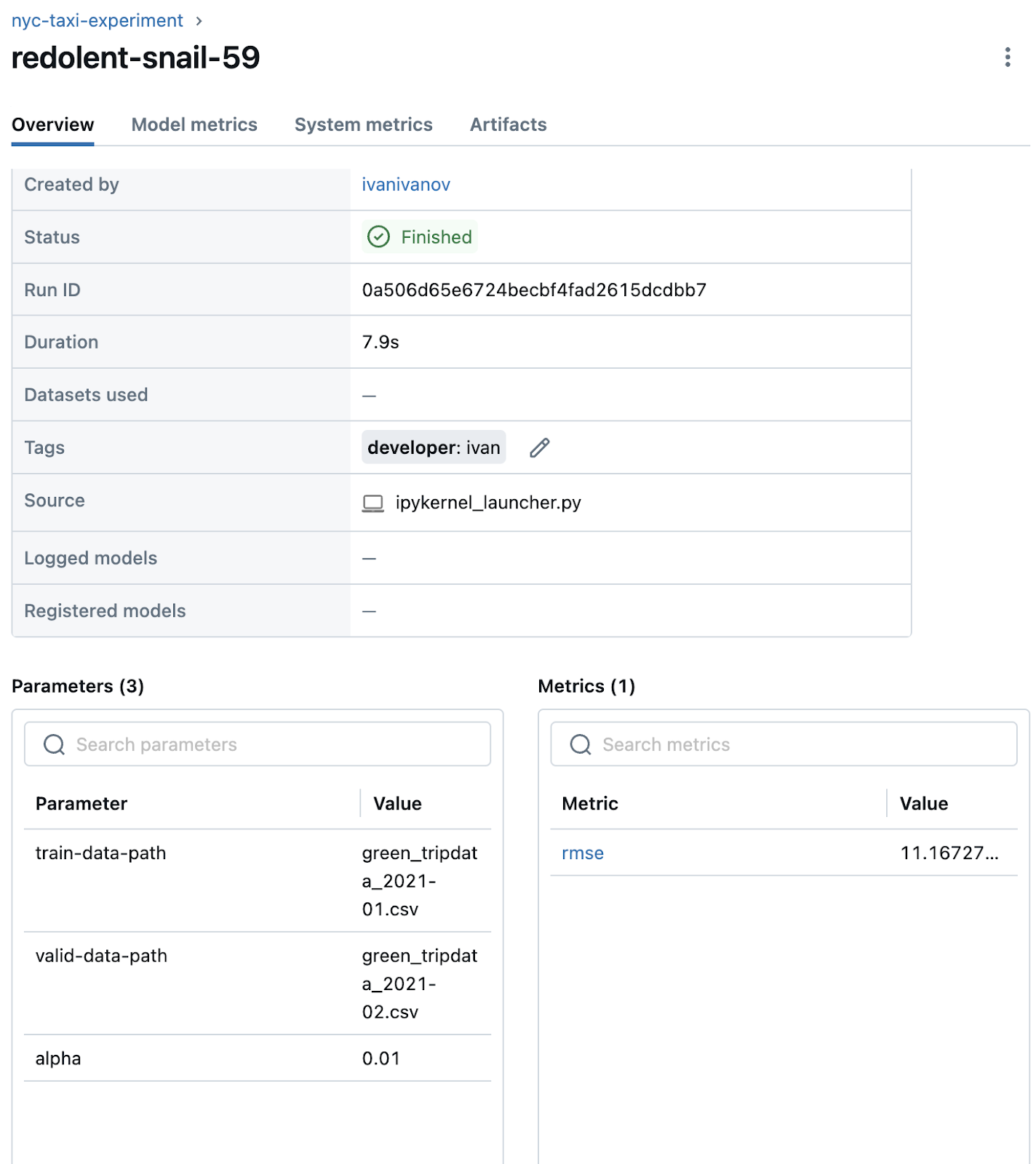

With the above we can log a run, its params, metric, more info.

In the UI, we see

Run another model with alpha=0.1, and we can compare the two

Next, doing hyperparam optimization using xgboost and pyperopt

we need to define an objective

Search space and then run

After this ran (for around 30 mins), in mlflows’ ui we can visualise all the runs that use the xgboost model

it also shows which hyperparam combinations lead to which results

For example if we see a scatter between rmse and min_child_weight we ca see somewhat of a pattern where smaller min_child_weight results in smaller rmse

we can also see a countour plot where we can see 2 hyperparams and their effect on the rmse

How do we go about model selection?

A naive way would be to choose the model with lowest rmse

and after clicking on it we can check more info about it.And we can make further judgements like run duration, data used, etc.

Instead of writing lines of what to log, we can use autolog, and when we run the model with the best params from the hyperparam searchwe get a bit info:In model metrics:

We have more content in the ‘Artifacts’ tab as well

Like model info, dependencies, how to run the model

Next, I learned about model management

What's wrong with using a folder system?

- error prone

- no versioning

- no model lineage

Instead of just getting the bin file, we can get better logging using

Which results in

We can also log the DictVectorizer used in the data preprocessing step, and get it from mlflow

Using the saved model for predictions mlflow provides this sample code when a model is logged in the artifact tab

If we use the sample pandas code to load the model, we can check out the model

Since it is a xgboost model, we can load it with mlflow’s xgboost and then use it as normal

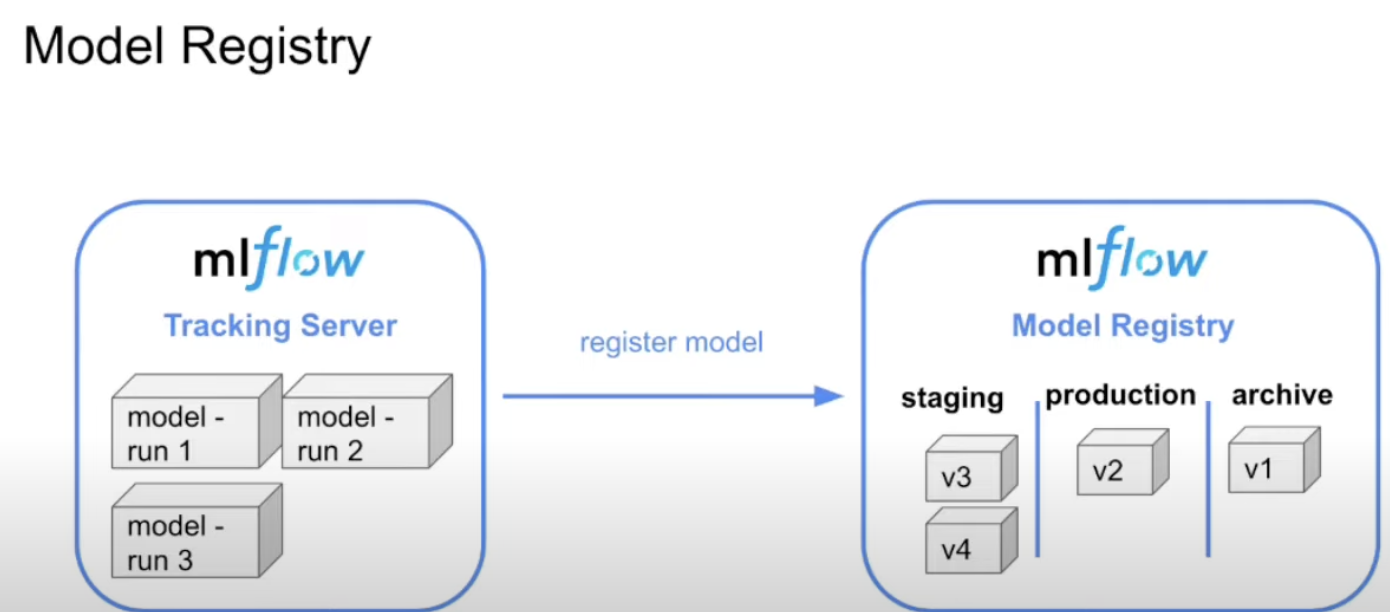

Next, model registry

Imagine a scenario where a DS sends you a developed model and asks for me to deploy it. My task is to take it and run it in prod. But before I do it, I should ask what is different, and what is the purpose. What are the needed dependencies and their versions which are needed for the model’s environment

This email is not very informative, so I might start to look through my emails for the previous prod version. If I am lucky enough someone that was in my place before me or a DS, has put the info of the current prod version in a tool for experiment tracking (like mlflow).The pictures above with lots of model runs is the tracking server. A model registry lists models that are in different stages, production and archive. To do actualy deployment, we need some CI/CD. When analysing which model is best for production, we can consider model metrics, size, run time (complexity), data. To promote a model to the model registry, we can just click on the ‘register model’ button in a model’s artifact tab.We can go to the models tab now in mlflowand see the registered models (I ran and registered a 2nd model)

In the newest version of mlflow, they have removed the staging/deployed/archived flags, and it is more flexible, so I assigned champion and challenger (custom) aliases

Now the deployment engineer (assuming we understand the meaning of the aliases) can decide what to do. All of the above actions can be done through code as well, no need to use the UI (I learned some of the functions and used them in the github notebook and using the mlflow client docs)

Next, I learned about mlflow in practice

1 - no need to use mlflow, saving locally is fine

2 - running mlflow locally would be enough. Using model registry would be a good idea to manage the lifecycle of the models. But it is not clear if we can just run it locally or need a host.

3 - MLflow needed. We need a remote tracking server. Also model registry is important because there might be different ppl with different tasks.

For the 3rd scenario I wanted to follow along and create an AWS instance, but I don’t have an account and for some reason my card keeps getting rejected. So I guess I will have to do it some other time 😕This website shows comparison between mlflow and paid/free alternatives.

Secondly, about the Scottish dataset

Today I finished the last 2 videos from my collaborator

And now the total audio length in our dataset from all 10 videos is 770 seconds. I need to find a better way to store our audio files and the dataset itself. It all lives on my google drive at the moment.I also added some other metadata for each clip

Tomorrow and Friday is AWS Summit Seoul 2024, and there are a lot of panels each day (2 days in total). So I will share my experience and attended panels.

That is all for today!

See you tomorrow :)