[Day 134] Finished CS109 + Scottish dataset project + Started MLOps zoomcamp by DataTalks club

Hello :)

Today is Day 134!

A quick summary of today:- covered the last 2 lectures of Stanford's CS109

- processed 4 more videos for the Scottish accent dataset

- started MLOps zoomcamp by DataTalks club

- watched a nice video comparing the roles of data scientist vs AI engineer

Firstly, on lecture 27: advanced probability and lecture 28: future of probability from Stanford's CS109

What a course! Sad it is over. Chris Piech - what an extraordinary professor!

Both lectures were more or less an overview of the course and professor Piech's hopes for the future of probability and his hopes for his students.

Lecture 27

Around 2013, autograd was introduced for the first time and this allowed for backprop to be automated, instead of manual calculations. This was one of the reasons for deep learning to explode.The professor also talked a bit about diffusion models

#1 remove the noise#2 predict where the noise is

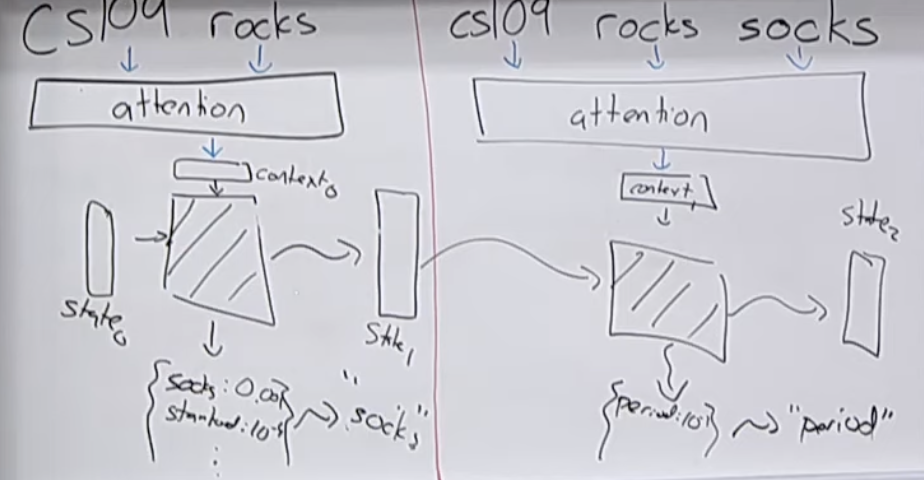

And a bit about language models (at the time of recording ChatGPT had just come out). And he introduced a basic version of RNN/LSTM.

Lecture 28Besides an overview of what was covered throughout the previous 27 lectures, he talked about potential usage of AI in education and how it can help provide feedback to students, and in medicine.

Secondly, about the Scottish dataset project

Today I cut videos 'Scottish phrases 5-8' (4 videos). And now the total time of the audio clips we have in the dataset is 10 minutes :party: We are improving slowly.

Thirdly, started MLOps zoomcamp by DataTalks club

Last night was the opening day for the MLOps camp that I signed up for. It is one (if not the) most popular open courses for MLOps, so I was excited to start it. Well today I covered module 1 and its homework. Below are notes from the intro lecture + module 1 + the homework.

Intro lecture

The course will cover the following topics

Module 1I think the coolest thing was learning about the different MLOps maturity levels (introduced by Microsoft)

Level 0: no MLOps

- no automation

- all code in jupyter

- good for experiments

Level 1: DevOps, No MLOps

- there are experienced devs helping the data scientists

- some automation

- releases are automeated

- unit & integration tests

- CI/CD

- ops metrics

- no experiment tracking

- no reproducability

- data scientists separated from engineers

Level 2: Automated training

- training pipeline

- experiment tracking

- model registry

- low friction deployment

- data scientists work with engineers

- good level if we have 2-3 ML cases

Level 3: Automated deployment

- easy to deploy models

- pipeline is: data prep, model traning, model deployment

- A/B tests between models

- some model monitoring

Level 4: Full MLOps automation

- automatic training

- automatic retraining

- automatic deployment

- A/B tests

- approaching a zero-downtime system

Not all orgs need to be on level 4. Level 3 is still fine because we can have a human making that final decision whether a model goes live. So we need to judge what level is best for a particular project.

The homework

I uploaded all my work to my github.

The homework followed the module 1 materia on creating a linear regression model that predicts the taxi travel time from point A to point B. It involves some data preprocessing, model creation and exporting the model with pickle.

Q1: loading the data and how many columns there are

Q2: What's the standard deviation of the trips duration in January?

Q3: What fraction of the records left after you dropped the outliers?

Q4: What's the dimensionality of the matrix (number of columns) after using DictVectorizer?Q5: What's the RMSE on train?We can interpret this as being 7.6 minutes wrong on average in our ride duration predictions.

Q6: What's the RMSE on validation?

After loading the val dataset (data from February 2023, train is January 2023), I got the result

Overall, pretty satisfied, and excited for the future modules and homeworks.

Finally, the comparison between a data scientist and an AI engineer

This IBM video was in my recommended on youtube so I clicked on it to see. And the below pic is the final explanation.

Some abbreviations: FM: foundation model, FE: feature eng, CV: cross-val, HPT: hyperparam tuning, PEFT: param efficiant finetuning. Of course the below is not static, DS might work on prescriptive cases, and AI eng can work with structured data.

That is all for today!

See you tomorrow :)