[Day 131] Meeting for the Scottish dataset project + CS109: Deep learning

Hello :)

Today is Day 131!

A quick summary of today:- Met the enthusiast wanting to join for the Scottish AI dataset (over zoom)

- Covered lecture 25: deep learning from Stanford's CS109

- Day 3 of MLx Fundamentals

Tonight it is the final Day 4 of the MLx Fundamentals, so hopefully I will include the info about the last 2 classes in tomorrow, or next day's post.

Firstly, about the meeting

For now I will call them M, but I guess next time I will ask if I can even just use their first name here. Anyway ~ we linked up on linkedin and we decided to meet through zoom this morning. The meeting went for about ~50mins.

Topics we covered:

- general intro of each other

- a bit of background

- M told me a bit more about the Scottish accent and how there are 3 main splits - Highlands, West coast and East coast, and that where they are from (West coast) the accent is the least represented - known as Glaswegian.

- We talked about clips, what audio we can use, length

- after the meeting I read some more research on that topic and a couple of papers use an audio corpus from 2~100 hours. So I guess we do not need *that* big of a dataset to get some kind of a model going. But there are still many questions we need to answer. But for now, we decided the first step to be just to create a 'raw' dataset with audio and transcriptions.

- We decided to meet again next Saturday, and I am excited

- During the week we will transcribe M's videos and also start with some podcasts as per M's suggestion

Secondly about DL, explain by Professor Chris Piech

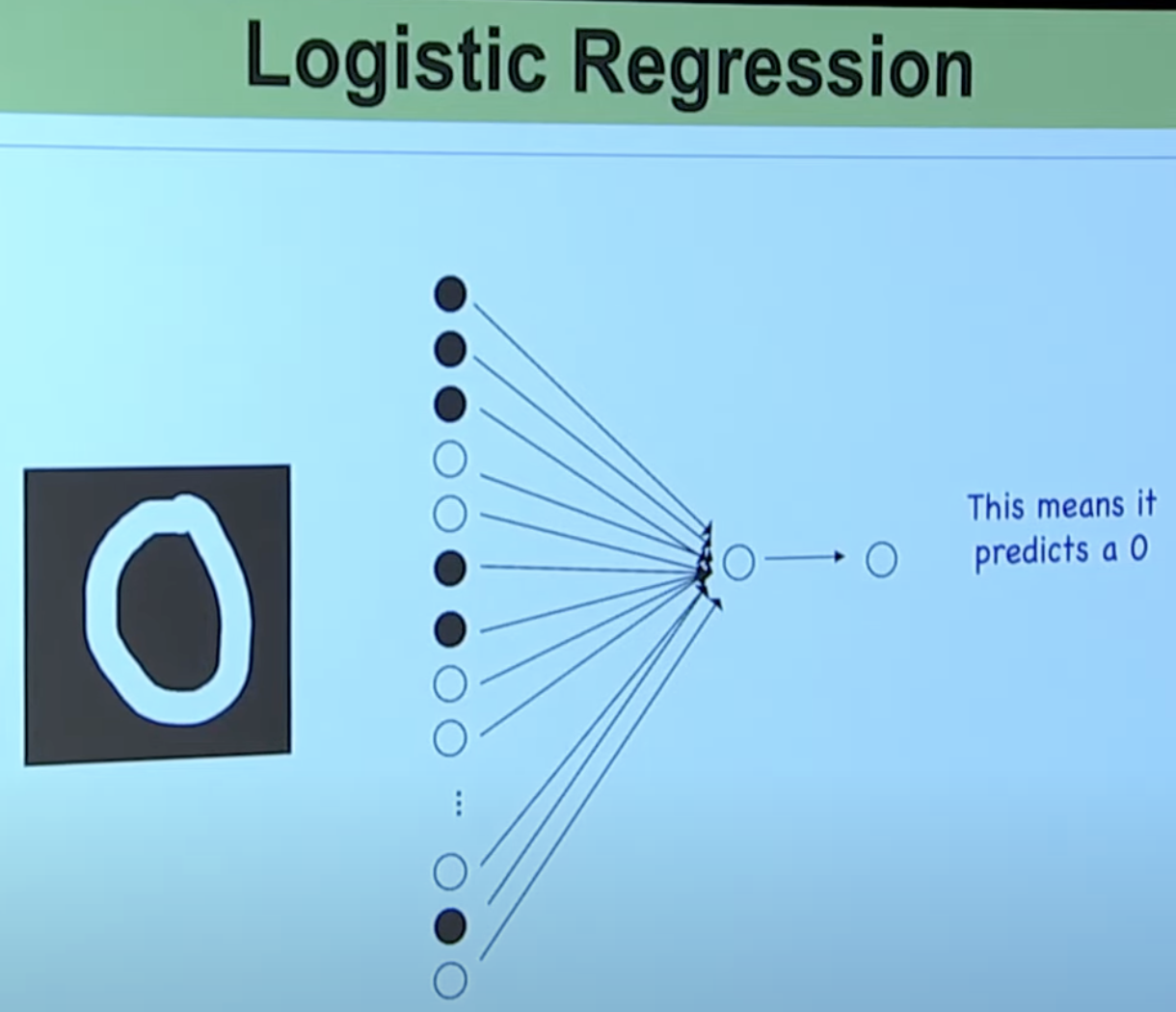

Now, we can think of each neuron as a single logistic regression.Giving a number to a simple logistic regression will overwhelm it, and it does not work. An image is *a lot* of pixels(inputs). And the interaction between the inputs and and output is much more complicated. What if we stacked these onto each other?Every single circle in the hidden layer is a logistic regression connected to the inputs. Once we have this hidden layer, we have a logistic regression that takes the hidden layer as its input and predicts the output. Every circle is a logisic reg that will take the inputs, weight them in its own way with its own params, sum it, squash it and the circle will turn on or off.

We want to choose thetas that maximise the likelihood of our dataset

In the pic, we are predicting handwritten 0s and 1s only.

How do we learn again? A DL model gets its intelligence from its thetas. How to find these good thetas? By looking for ones that maximise the likelihood of our train data. How to do that? We use optimization techniques like gradient descent. In order to do gradient descent - we need to calculate partial derivatives of likelihood w.r.t. its thetas.

It’s all chain rule!

Finally, a general overview of the material covered on Day 3 of MLxFundamentals.

Yuki M. Asano from University of Amsterdam provided a general intro to deep learning

Covered topics were: history of DL, activation functions (ReLU, ELU, GELU, Softmax), backprop (Andrej Karpathy's video mentioned ^^ ), optimization, CNN, self-supervised learning. Definitely a lot of material for a 2hr limit, but it was a good overall intro lecture.

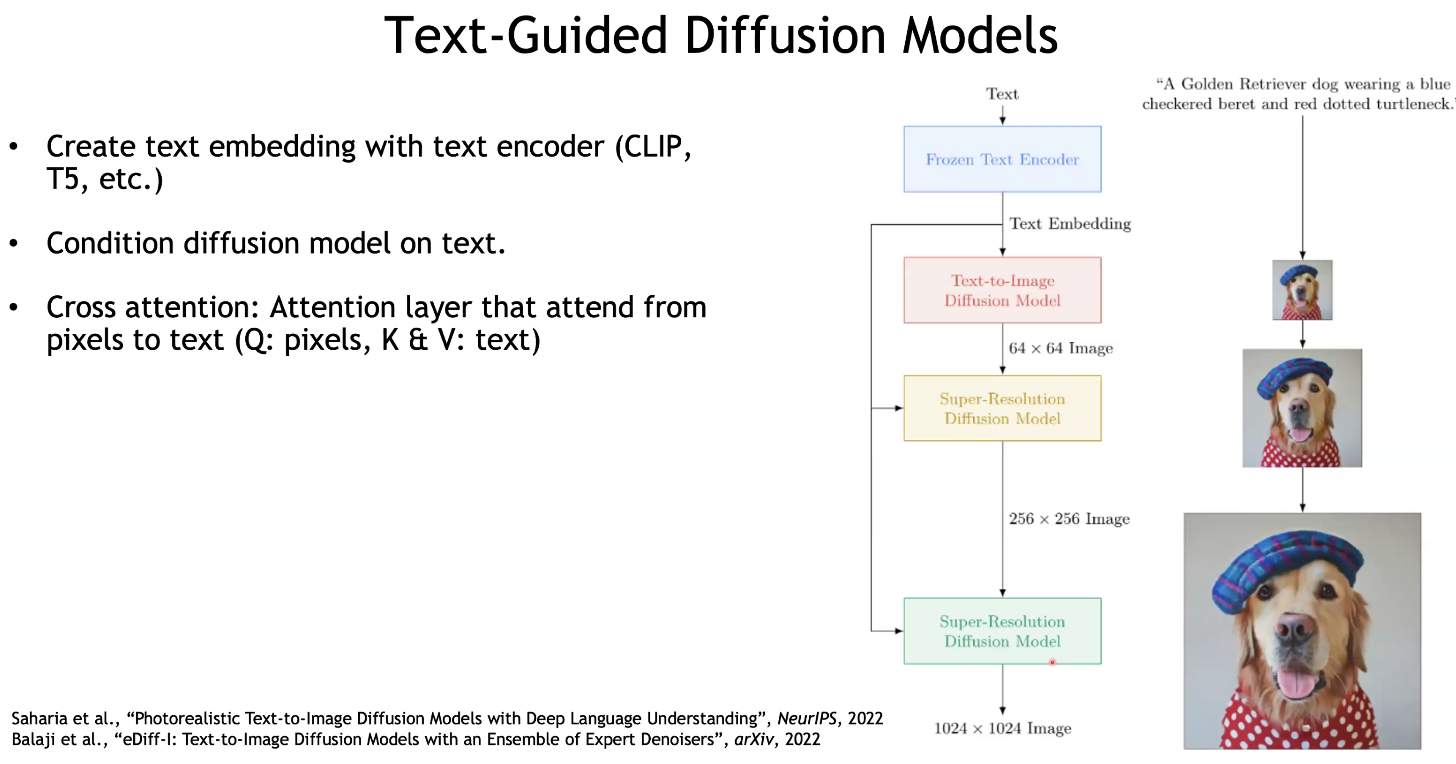

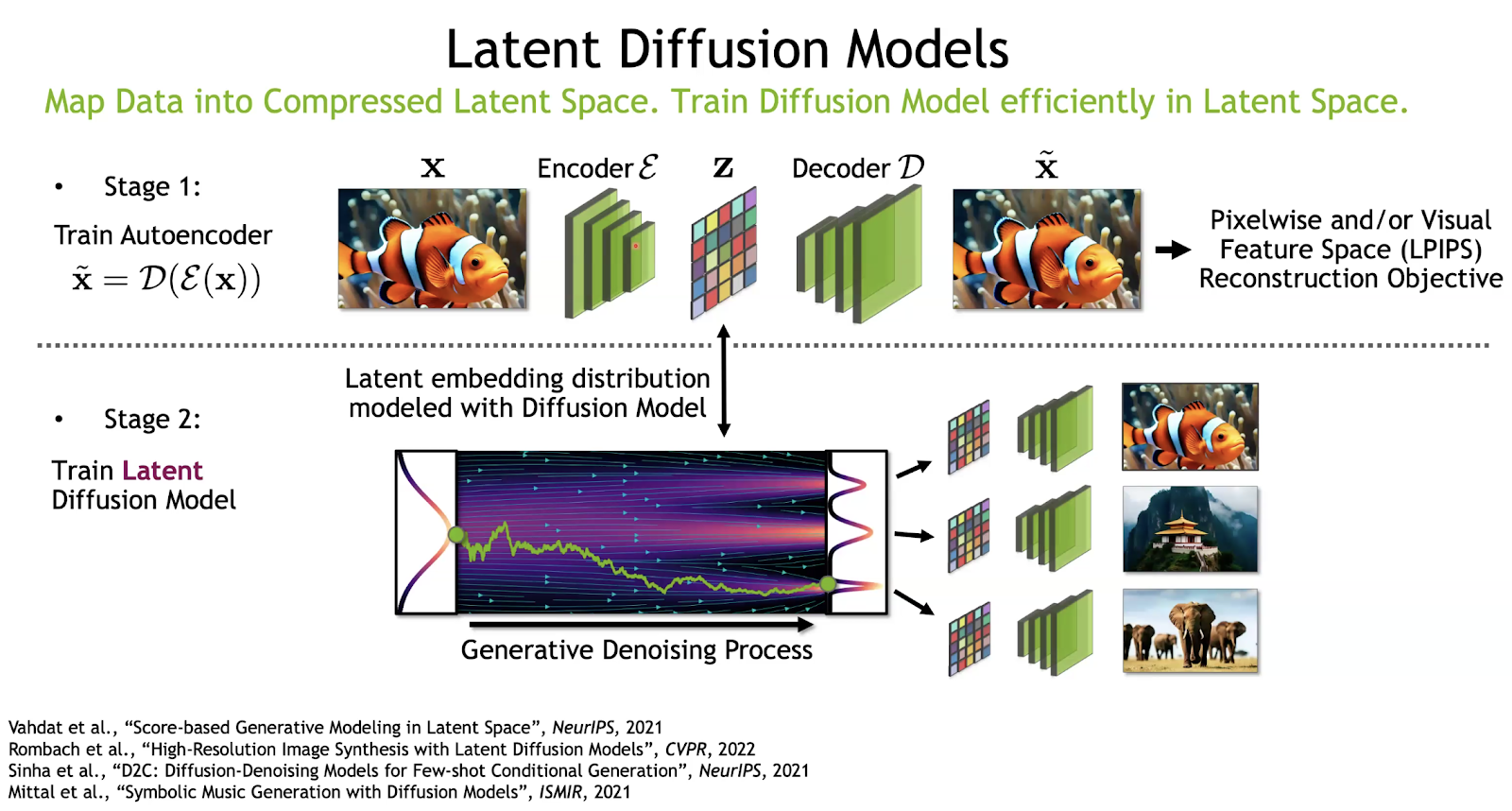

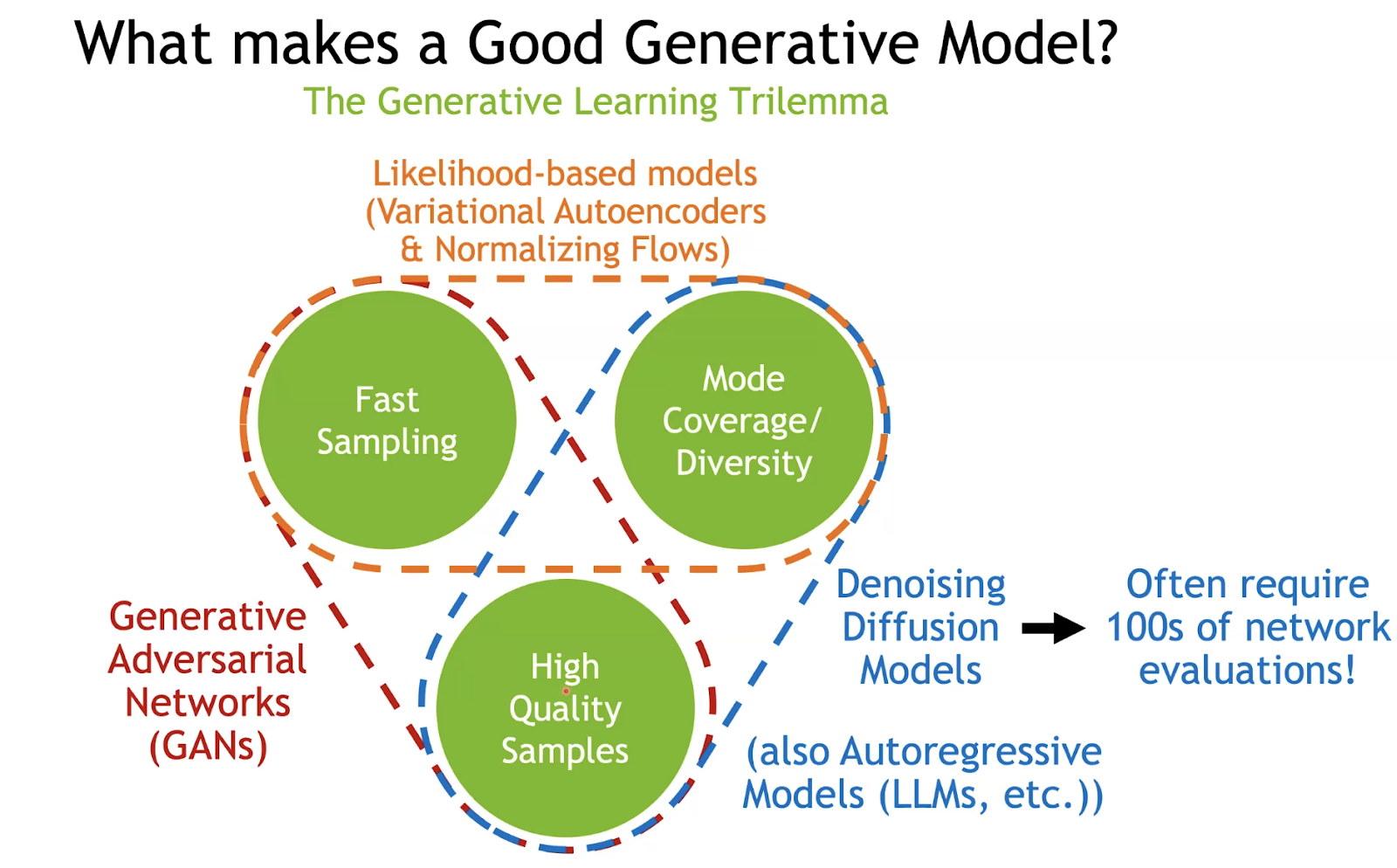

Karsten Kreis from NVIDIA talked about generative models for vision applications

If we add a discriminator (as in GANs) to the above, the quality improves significantly

Example

He also introduced briefly GANs, VAEs, Autoregressive models, energy-based models, and normalizing flows

Finally, provided a quick intro to video diffusion models (like Sora)

That is all for today!

See you tomorrow :)