[Day 120] Starting Stanford's CS246: Mining Massive Datasets + MIT's Intro DL

Hello :)

Today is Day 120!

A quick summary of today:- started MIT's Intro to deep learning 2024 course

Last night I saw a notification that MIT is going to livestream their first intro to DL lecture on youtube (from their 2024) course, and this morning I decided to check what it's about. This is the official website, and it will provide lectures + homeworks. The course lectures will go from 29th April to 24th June. And the schedule is:

As a 'basics lover' I am excited to go over old and hopefully new material. I am excited to see MIT's take on teaching intro to DL.

The summary of the 1st lecture is bellow

It started it where DL fits with AI and ML.

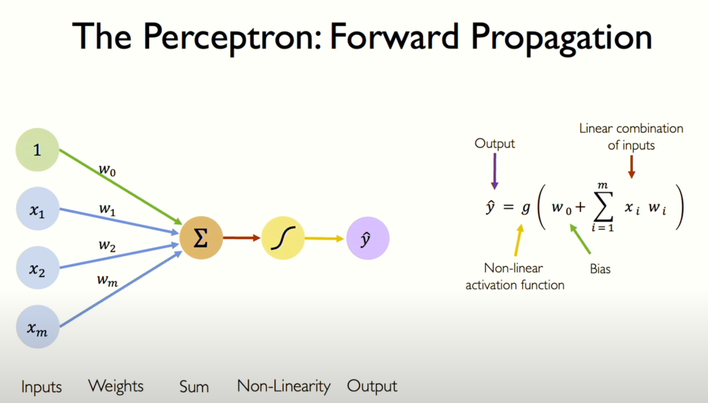

Gave a general and very well put (as expected for MIT) explanation of a neuron/perceptronProvided an example of a simple neural network

Also, how does the network know how wrong it is? Thanks to the loss

However, using the whole dataset to update the weights W (Gradient Descent) might not be the best idea, especially with the amount of data available these days. That is where Stochastic Gradient Descent comes in.

In the end, we got introduced to the 2 of the most common regularization techniques: dropout, early stopping.

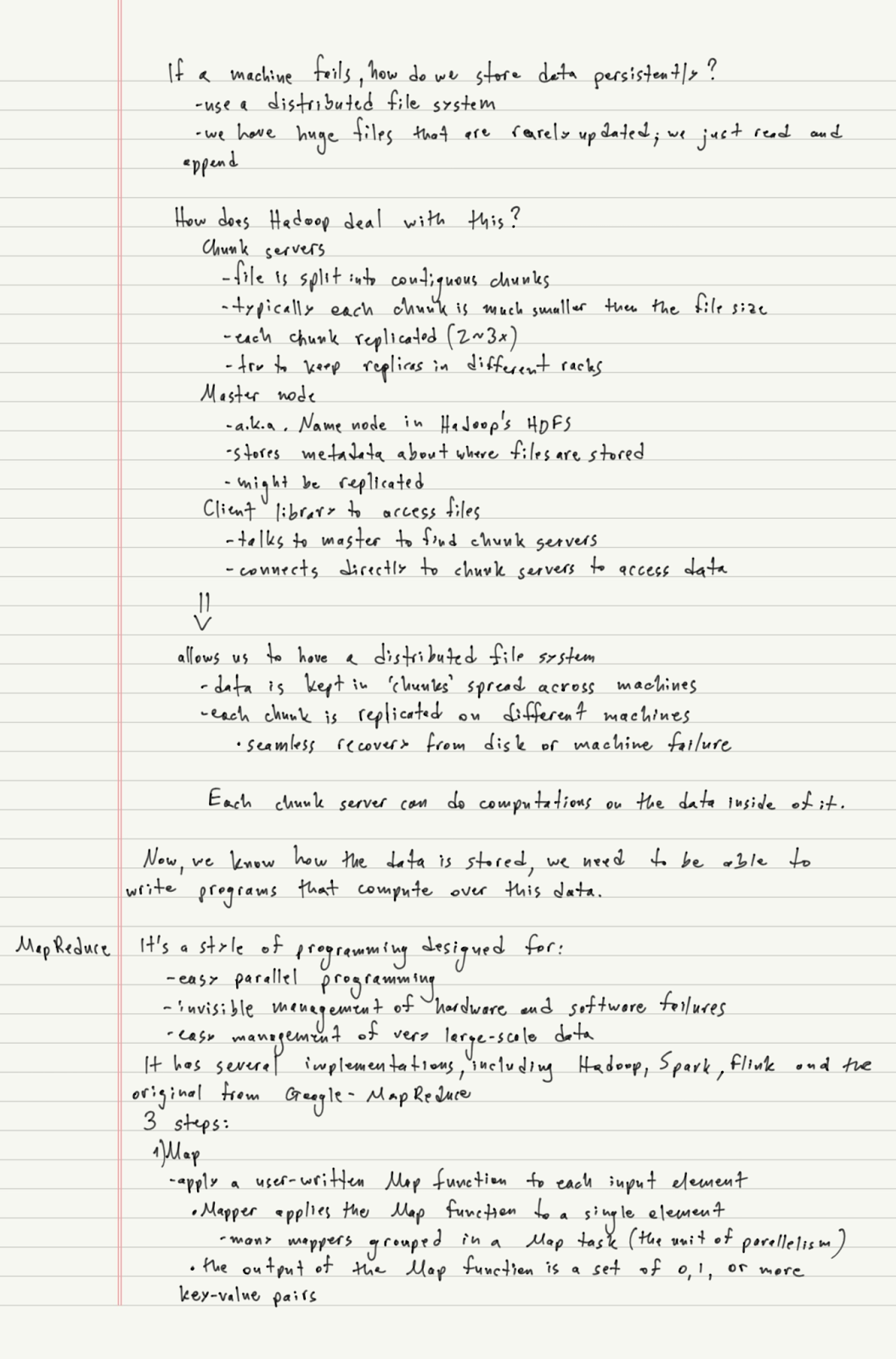

As for the CS246: Mining massive datasets

It was on my list for a while, the reason I did not start it earlier was because I was not sure if there were videos on it (even if there were not, I would still end up doing it, but a video lectures just adds a little more to just reading slides/book chapters). I saw today that there are lecture videos from winter 2019. Yes it was 4 years ago, but I compared slides from the most recent release (winter 2024) with the slides from winter 2019 and there is little deviation towards the end, when I can just watch the lecture and then by myself read the slides.

Today I covered lecture 1

Covered topics:

MapReduce, Spark, Spark's RDD, cost measures

I also registered for a new event hosted by Global AI for good that's in collaboration with Oxford University - MLx Generatie AI (Theory, Agents, Products) on 22-24th August 2024. ^^

Topics to be covered:

- Advanced theoretical topics in representation learning (e.g., vision, language, multi-modal, …)

- Agentic AI (e.g., agentic reasoning and design patterns)

- Human+AI alignment

- Building Gen. AI products — from model.fit() to market.fit()

- Using SOTA foundation models (e.g., fine tuning, RAG, RLHF, prompt engineering, …)

- Application of large frontier models in applied domains (e.g., medicine, finance, education, …)

That is all for today!

See you tomorrow :)