[Day 116] Reading some research + transferring posts to the new blog

Hello :)

Today is Day 116!

A quick summary of today:- read 4 research papers about graphs and LLMs

- started transferring blog posts to the new blog

Do Transformers Really Perform Bad for Graph Representation? (link)

Transformers dominate the field, but so far they have not been able to break into graph representation learning. The paper presents the ‘Graphormer’ which introduces several novel ideas with its architecture.

To better encode graph structural information, below are the 3 main ideas1. Centrality encoding

- In the original transformer, the attention distribution is calculated based on semantic correlation between nodes, which ignores node importance (like a highly followed celebrity node is very important and can easily affect a social network). The centrality encoding assigns each node 2 real-valued embedding vectors according to indegree and outdegree, and this way the model can capture both semantic correlation and node importance in its attention mechanism.

2. Spatial encoding

- Transformers are infamously powerful when it comes to sequential data, but in a graph - nodes are not arranged in a sequence. To give the model some info about the graph’s structure, the paper proposes spatial encoding. By providing spatial info about each node pair, the model can better learn the graph’s structure.

3. Edge encoding in the attention

- Along with nodes, edges can play a key part in understanding a graph’s udnerlying structure. They propose to add to the attention mechanism a term which incorporates edge features, enhancing the model's ability to capture the relationships between nodes more effectively. This method involves considering the edges connecting node pairs when estimating correlations, as opposed to solely relying on node features.

Implementation details

1. Graphormer layer - building upon the classic transformer encoder, they apply layer norm before the multi-head self-attention, and the FF blocks.

2. Special node - they introduce this special(VNode) which is connected to each individual node. Its representation is updated as normal, and in the last layer, the representation of the entire graph, would be the node feature of itself (VNode). By incorporating this special node into the Graphormer, the model gains access to global information about the graph, which can improve its performance in tasks such as graph classification, node classification, and graph generation.Results after experiments

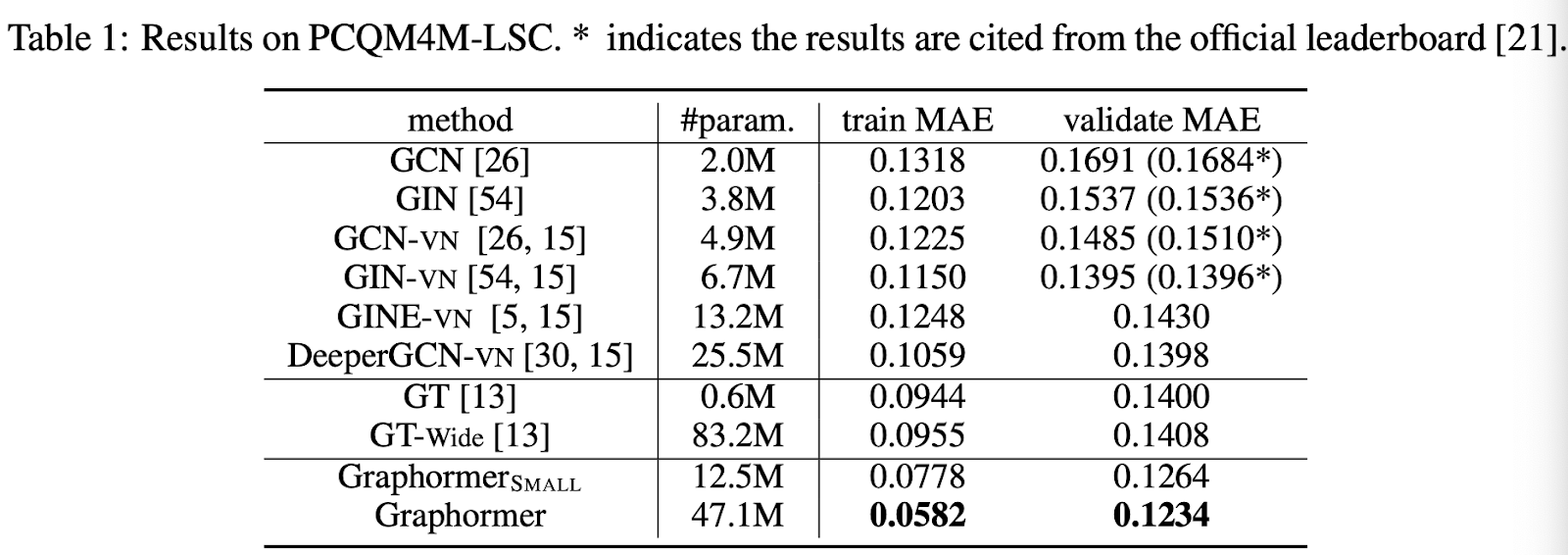

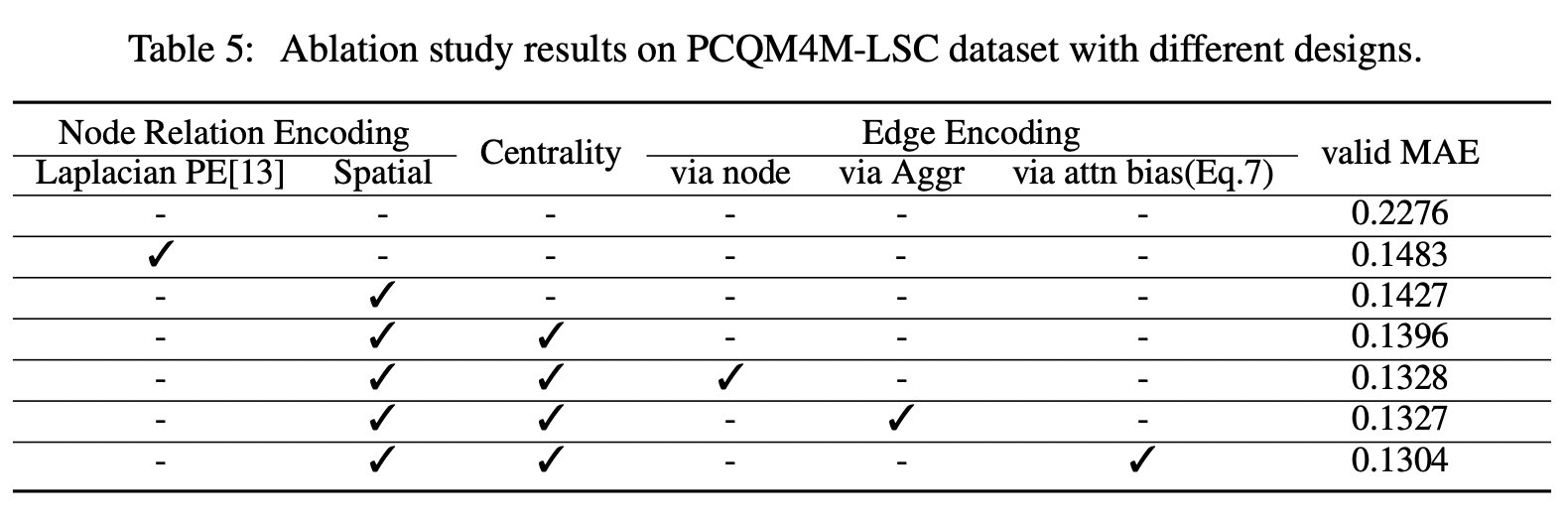

Results on Graphormer’s graph representation skillsAblation study to see effects of different parts of the Graphormer

Position-aware GNNs (link)

I covered this topic on Day 111 so there was not much new things.

Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention (link)

Infini-attention is introduced in this paper - a novel attention mechanism designed to address the limitations of standard Transformers when handling extremely long input sequences.

Traditional Transformer models struggle with long sequences due to the quadratic complexity of the attention mechanism in terms of memory and computation and this makes them inefficient for tasks requiring long-range context understanding. Infini-attention introduces some novel ideas that help battle this limitation:

1. Compressive memory - it stores and retrieves information efficiently, unlike the standard attention mechanism which discards previous states. This allows the model to maintain a history of context without exorbitant memory costs.

2. Mixed kind-of attention - combines local attention for fine-grained context within a segment and long-term linear attention using the compressive memory for global context across segments.

That is all for today!

See you tomorrow :)