[Day 115] Exploring HuggingFace's capabilities and submitting 3rd homework from the ML with Graphs course

Hello :)

Today is Day 115!

A quick summary of today:- submitted 3rd homework of XCS224W:ML with Graphs

- explored different huggingface capabilities with DeepLearning.AI

As for the homework, we are not allowed to share anything from it. But I can happily share

I got full marks ^^As for the huggingface tutorial

It showcased the different type of models that were available. Below is a summary.

Building a chat pipeline

Text translation

Text summarization

Zero-shot audio classifier

Apparently, the model seens the audio differently - 1 second of high resolution audio appears to the model as if it is 12 seconds of audio.Text to speech

Object detection

(code before the pic: od_pipe = pipeline("object-detection", "./models/facebook/detr-resnet-50"))

We can use gradio as a sample interface

I passed a picture of mine to check haha

Image captioning

Example image

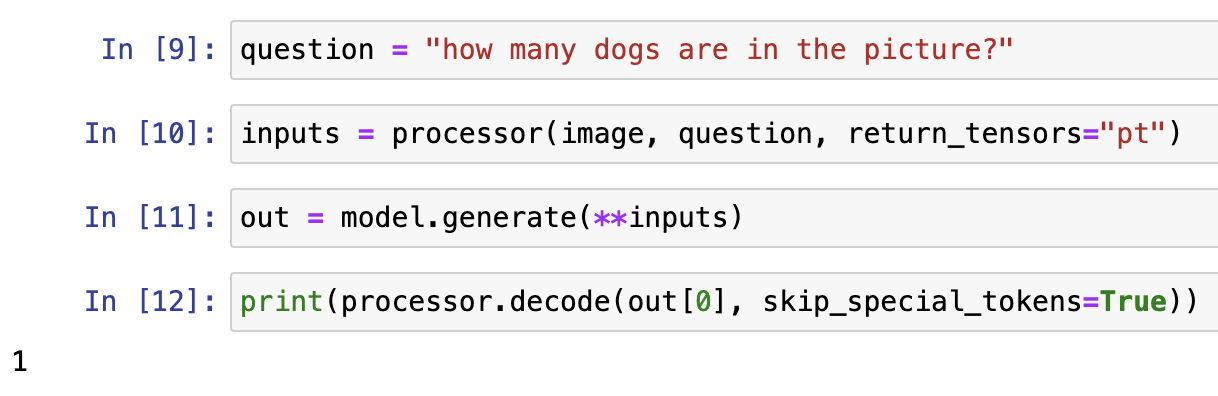

Using the dog and woman pic again for multimodal QA

Zero-shot image classification

Giving labels: a photo of a cat, and a photo of a dog, we can get the models' probs for each label.

Deploying a model

On hugging face to deploy a model, we need to:

1. Create space

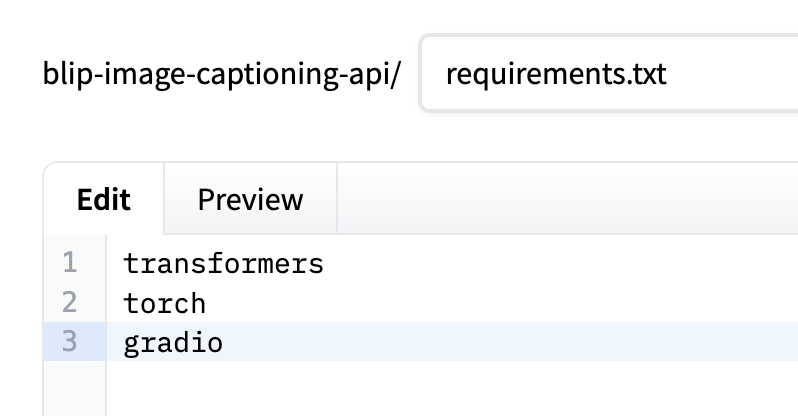

2. Create requirements.txt and app.py files

After I created the two files, after a few minutes, the model was deployed and ready to be used.

In huggingface, the interface showsI tested it with 2 images

Example image

Using the dog and woman pic again for multimodal QA

Zero-shot image classification

Giving labels: a photo of a cat, and a photo of a dog, we can get the models' probs for each label.

Deploying a model

On hugging face to deploy a model, we need to:

1. Create space

2. Create requirements.txt and app.py files

After I created the two files, after a few minutes, the model was deployed and ready to be used.

In huggingface, the interface showsI tested it with 2 images

Output: 'a stone path'

Output: ‘a group of dogs sitting in a row’

The link is here, but I believe it has a limited runtime.

Output: ‘a group of dogs sitting in a row’

The link is here, but I believe it has a limited runtime.

It was a useful tutorial showcasing the code needed to run various open source models and perform various tasks.

That is all for today!

See you tomorrow :)