[Day 110] Learning about Graph Transformers

Hello :)

Today is Day 110!

A quick summary of today:- watched a hackaton held by the best CS high school in Bulgaria - TUES

Technical School Electronic Systems (TUES) unfortunately does not have an english website. This is a link to their youtube channel where student video projects are uploaded.

Every year, they hold a hackaton for ~3 days, and I saw a recording of this year's from last month. Understandably many projects involve AI, and one of the projects from a team of school graduates (my best friend participates in it. I saw him randomly, I did not know he participated :D) is below

Essentially, it is a knowledge graph that takes laws, bills and policies and a user can browse through nodes (different laws), find connections and references to other nodes, and also on the right ask questions, and when a result is given, the user is also pointed to the nodes that refer to the GPT response.There were many projects in the finals (it was a ~10hr stream) but I think the topic was to make projects related to the law (like my friend) or to help the environment because there was one that helps to detect when a body of water is clean, or with petrol/other chemicals.

As for Graph Transformers

I checked my XCS224W: ML with Graphs course and the topic of Graph Transformers was not included, so I went to check the most recent official CS224W: ML with Graphs course and there were slides to it, so I decided to go over them and learn about GTs.

Covered topics:

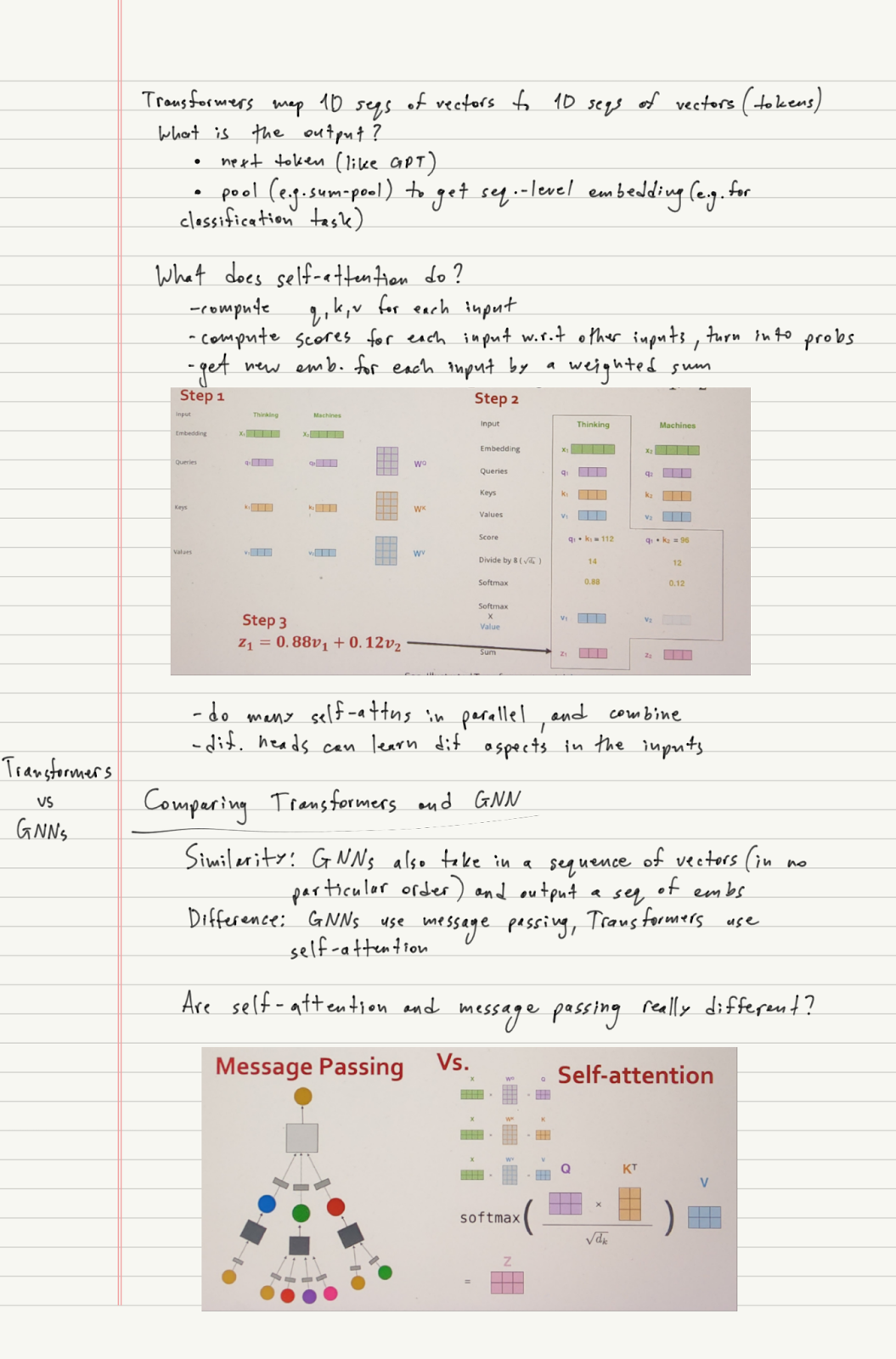

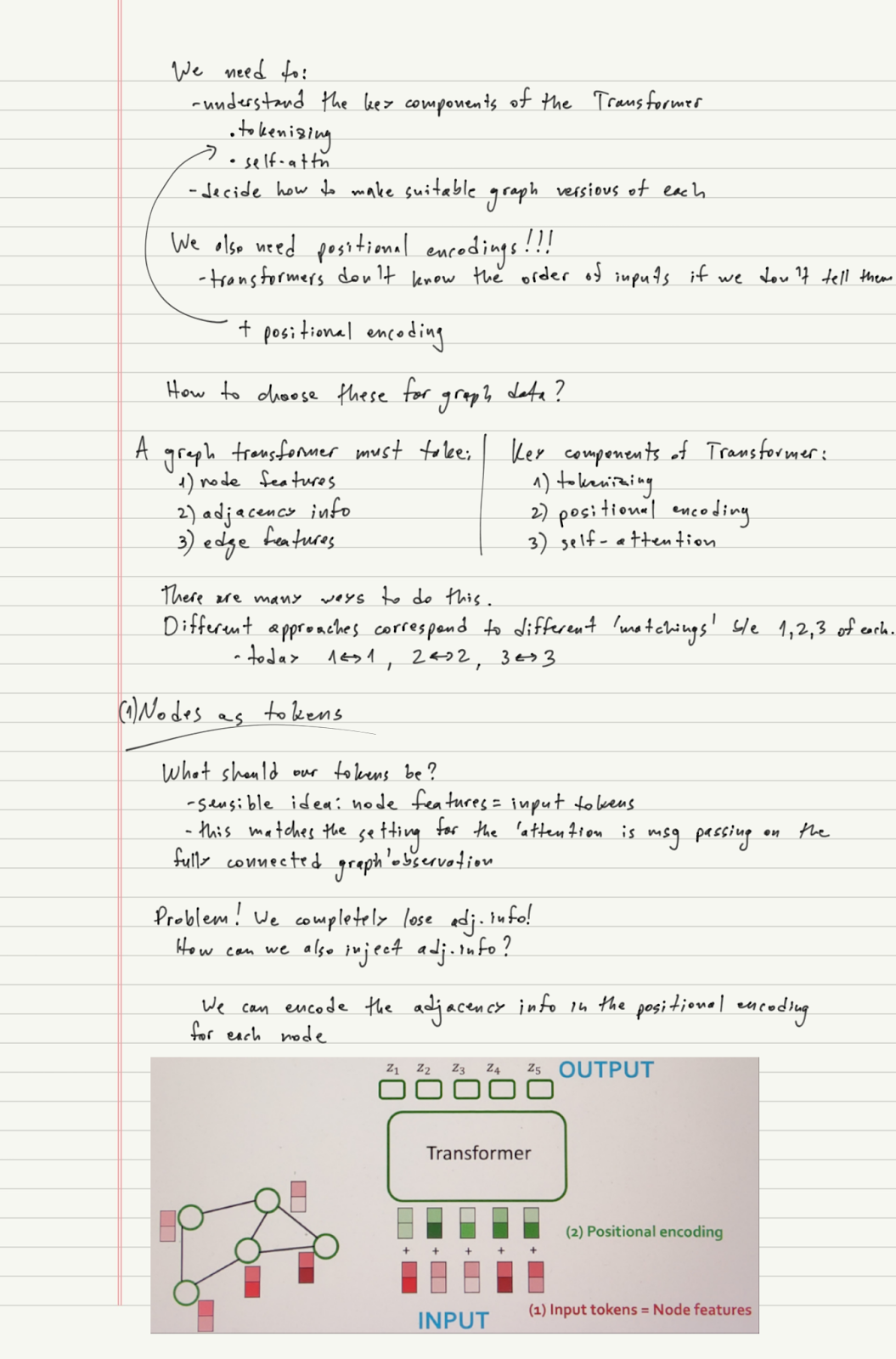

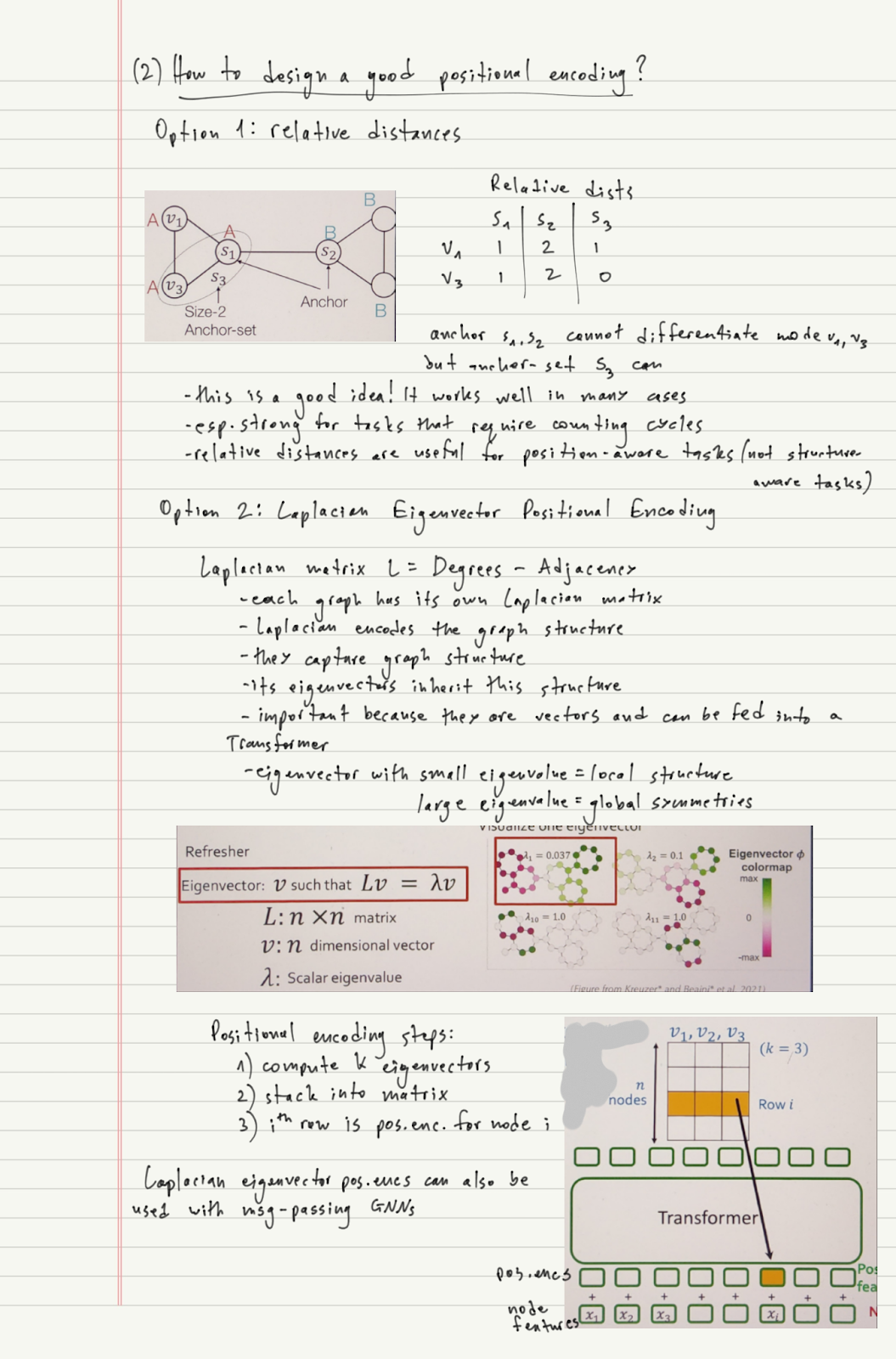

Transformers vs GNNs, self-attention vs message passing, designing graph transformers (nodes as tokens, positional encoding, edge features in self-attention), Laplacian positional encodings, eigenvector sign ambiguity, SignNet

That is all for today!

See you tomorrow :)