[Day 101] Doing the Google Cloud Digital Leader Learning Path

Hello :)

Today is Day 101!

A quick summary of today:

After yesterday's attempt and failure to deploy an LLM to production, I wanted to learn a bit more about the cloud platforms used. The top 3 are GCP, Azure and AWS. From past experiences (in my previous job at Lloyds Banking Group) I heard many people using GCP (some Azure), but also that AWS is very popular. I decided to check out what kind of learning paths GCP and AWS have, and I found the GCP's Digital leader learning path and AWS' Cloud Practitioner: Foundamental and both have certification.

GCP's Digital Leader Learning Path covers the below topics:

- Digital Transformation with Google Cloud

- Exploring Data Transformation with Google Cloud

- Innovating with Google Cloud Artificial Intelligence

- Modernize Infrastructure and Applications with Google Cloud

- Trust and Security with Google Cloud

- Scaling with Google Cloud Operations

and below are my notes.

The cloud

The cloud refers to a network of data centers that store and process information accessible via the internet. It replaces the traditional setup of managing IT infrastructure by combining software, servers, networks, and security into one accessible entity. Cloud technology supports digital transformation by offering scalable, on-demand computing resources through various implementations, including on-premises, private cloud, public cloud, hybrid cloud, and multicloud. On-premises infrastructure involves hosting hardware and software on-site while private cloud dedicates infrastructure to a single organization. Public cloud services are managed by third-party providers and shared among multiple organizations. Hybrid cloud combines different environments, like public and private clouds, while multicloud involves using multiple public cloud providers simultaneously. Most organizations today adopt a multicloud strategy, combining public and private clouds for flexibility and efficiency.

Its benefits

- scalability

- flexibility

- agility

- strategic value

- security

- data management

- technology infrastructure

- hybrid workplace

- security

- sustainability

- Private Cloud: Involves virtualized servers in an organization's data centers or those of a private cloud provider, offering benefits like self-service, scalability, and elasticity. It's preferred when significant infrastructure investments have been made or for regulatory compliance

- Hybrid Cloud: Combines private and public cloud environments, allowing applications to run across different environments. It's a common setup today, enabling organizations to leverage both on-premises servers and public cloud services

- Multicloud: Involves using multiple public cloud providers, leveraging the strengths of each. Organizations choose multicloud to access the latest technologies, modernize at their own pace, improve ROI, enhance flexibility, and ensure regulatory compliance

- On-premises: It's like owning a car. You're responsible for its usage and maintenance, and upgrading means buying a new car, which can be time-consuming and costly

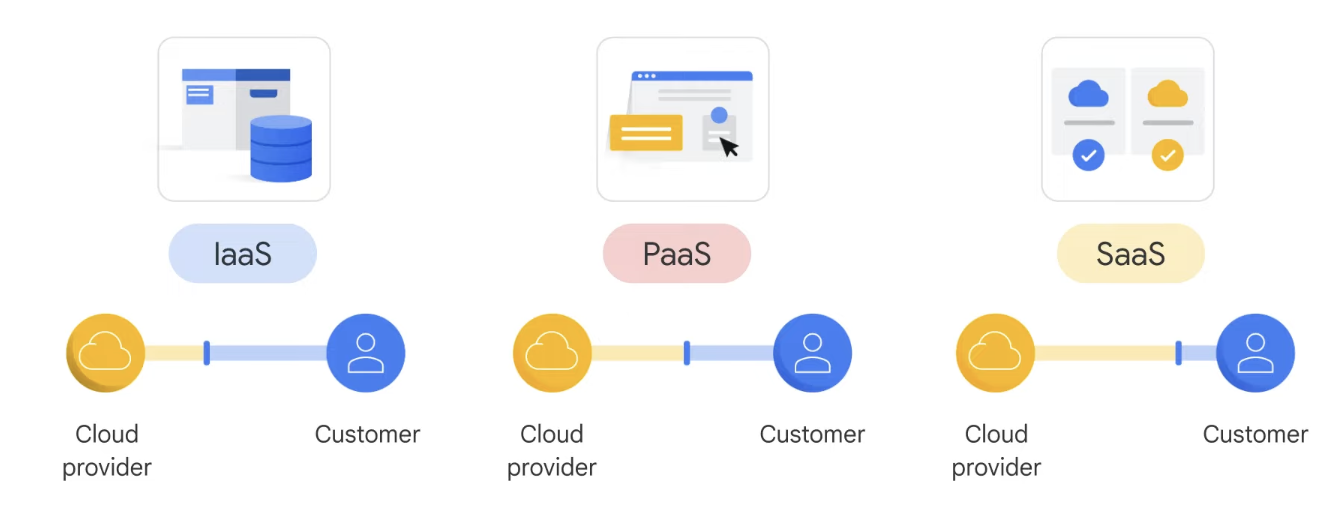

- IaaS (Infrastructure as a Service): It's like leasing a car. You choose a car and drive it wherever you want, but the car isn't yours. Upgrading is easier as you can simply lease a new car

- PaaS (Platform as a Service): It's like taking a taxi. You provide specific directions, like the code, but the driver (platform provider) does the actual driving (manages the underlying infrastructure)

- SaaS (Software as a Service): It's like going by bus. You still get access to transport (software), but it's less customizable. Buses have designated routes, and you share the space with other passengers (users)

- data genesis

- data collection

- data processing

- data storage

- data analysis

- data activation

ML options on GCP

Other full AI solutions

- Contact centre AI

- Document AI

- Discovery AI for retail

- Cloud talent solution

Cloud migration terms

- Workload: A specific application, service, or capability that can be run in the cloud or on premises. Examples include containers, databases, and virtual machines

- Retire: Removing a workload from a platform due to reasons like it being unnecessary, not cost-effective, secure, or compatible with a specific platform

- Retain: Intentionally keeping a workload, often on premises or in a hybrid cloud environment, under the business's management without full cloud provider control

- Rehost: Migrating a workload to the cloud without altering its code or architecture, also known as 'lift and shift'

- Replatform: Migrating a workload to the cloud while making some changes to its code or architecture, often referred to as 'move and improve'

- Refactor: Changing the code of a workload, possibly to utilize cloud-based microservices or serverless architecture, aiming for efficiency, scalability, or security improvements

- Reimagine: Rethinking how an organization uses technology, particularly cloud computing, to achieve its business goals, potentially involving reconsideration of cloud strategy and adoption of technologies like AI and ML for efficiency, cost reduction, and agility improvements

- Total Cost of Ownership (TCO) Reduction: Cloud computing saves money by eliminating the need for purchasing and maintaining physical infrastructure. Pay-as-you-go models and long-term commitment discounts further reduce costs

- Scalability: Cloud offers the ability to easily scale resources up or down to meet changing demand without significant upfront investments

- Reliability: Cloud providers offer high reliability and uptime through redundant data centers and automated problem detection and resolution

- Security: Cloud providers ensure data security through features like encryption, identity and access management, network security, and real-time threat detection and response

- Flexibility: Organizations can choose and adapt cloud services according to their needs, such as increasing storage space or adding new services

- Abstraction: Cloud providers handle infrastructure management, allowing customers to focus on their applications without worrying about underlying infrastructure details. This abstraction also enables access to a wide range of services and technologies

Google Compute Engine is an Infrastructure as a Service (IaaS) offering from Google Cloud Platform (GCP). It allows users to create and manage virtual machines (VMs) on Google's infrastructure. Compute Engine provides scalable, high-performance virtual machines that can run various workloads, from simple web applications to complex data processing tasks

Essential Cloud Security Terms and Concepts

- Privileged Access Security Model: Grants specific users broader access to resources, but misuse can pose risks. Requires careful management and monitoring

- Least Privileged Security Principle: Advocates granting users only the access needed for their job responsibilities, reducing the risk of unauthorized access to sensitive data

- Zero-Trust Architecture: Assumes no user or device can be trusted by default. Requires authentication and authorization before accessing resources, ensuring robust security

- Security by Default: Integrates security measures into systems and applications from the initial stages of development, establishing a strong security foundation in Cloud environments

- Security Posture: Evaluates an organization's overall security status in its Cloud environment by assessing security controls, policies, and practices

- Cyber Resilience: The ability to withstand and recover quickly from cyber attacks by identifying, assessing, and mitigating risks, responding to incidents effectively, and recovering from disruptions quickly

- Data Sovereignty: Refers to the legal concept that data is subject to the laws and regulations of the country where it resides. For example, GDPR in the EU mandates compliance with data protection laws for EU citizens' personal data

- Data Residency: Refers to the physical location where data is stored or processed. Some countries require data to be stored within their borders to ensure compliance with local laws

Operational excellence and reliability at scale

Service level indicators, objectives and agreements

That is all ^^

That is all for today!

See you tomorrow :)