[Day 100] Embeddings in practice + reading a couple of research papers + trying to deploy an LLM in production

Hello :)

Today is Day 100!

A quick summary of today:- finished Vicki Boykis' 'What are embeddings?'

- read 2 papers

- Graph Convolutional Neural Networks for Web-Scale Recommender Systems (Ying et al., 2018)

- TwHIN: Embedding the Twitter Heterogeneous Information Network for Personalized Recommendation (El-Kishky et al., 2022)

- tried to deploy a PDF chat app using streamlit (spoiler: failed)

- Graph Convolutional Neural Networks for Web-Scale Recommender Systems (Ying et al., 2018)

- TwHIN: Embedding the Twitter Heterogeneous Information Network for Personalized Recommendation (El-Kishky et al., 2022)

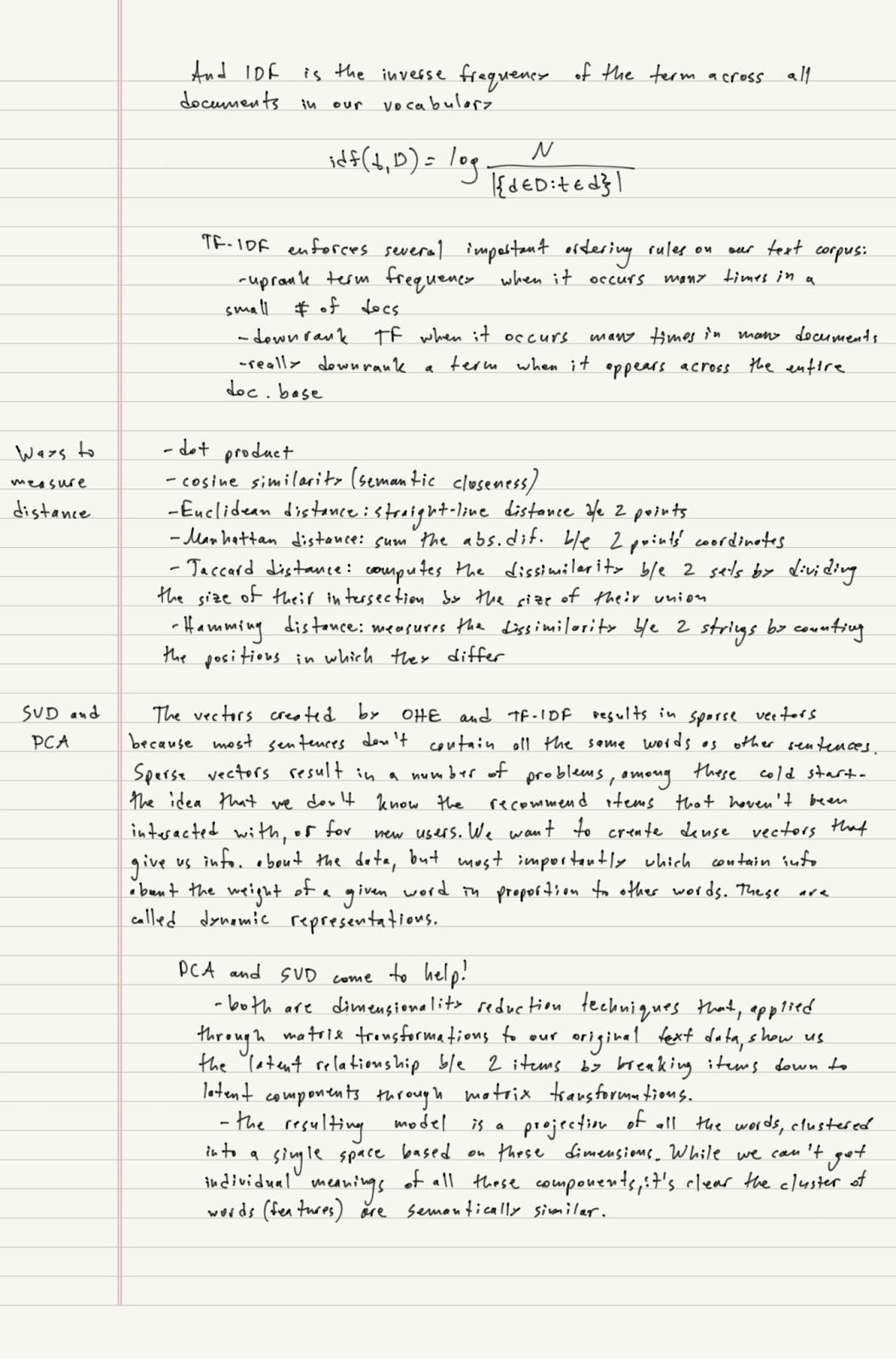

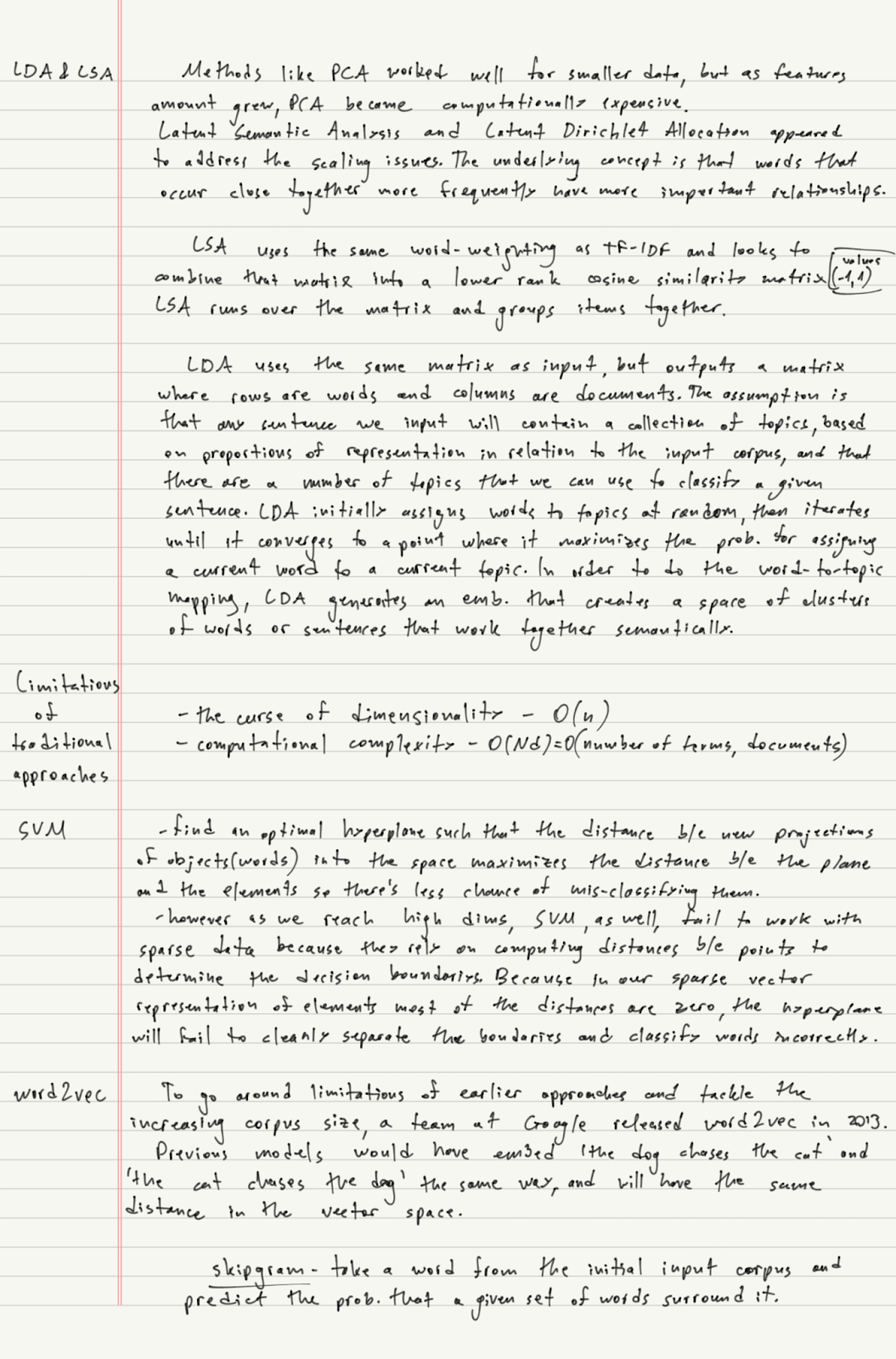

My full notes from Vicki Boykis' What are embeddings? book

The research papers below, I saw them in Vicki Boykis' vook and read them because they referenced GNNs.

Graph Convolutional Neural Networks for Web-Scale Recommender Systems (Ying et al., 2018)The paper talks about a a large-scale deep recommendation engine developed and deployed at Pinterest called PinSage which tackles the challenge of scaling deep neural networks for graph-structured data to web-scale recommendation tasks with huge amounts of users and items (pictures in pinterest's case). PinSage utilizes an efficient Graph Convolutional Network (GCN) algorithm which combines efficient random walks and graph convolutions to generate embeddings of nodes (items) that incorporate both graph structure and node feature information.

Some of the key points from the paper:

- scalability: PinSage is trained on 7.5 billion examples on a graph with 3 billion nodes representing pins and boards and 18 billion edges making it one of the largest applications of deep graph embeddings

- efficiency: PinSage leverages on-the-fly convolutions, producer-consumer minibatch construction, and efficient MapReduce inference to improve scalability

- innovations: the model introduces new techniques like constructing convolutions via random walks, importance pooling(mean vs max vs importance pooling), and curriculum training which enhance the quality of learned representations and lead to big performance gains in downstream recommender system tasks

- deployment and evaluation: PinSage is deployed for recommendation tasks at Pinterest showing state-of-the-art performance compared to other scalable deep content-based recommendation algorithms in offline metrics, controlled user studies, and A/B tests

TwHIN: Embedding the Twitter Heterogeneous Information Network for Personalized Recommendation (El-Kishky et al., 2022)

The paper paper talks about the development of TwHIN which tackles traditional tweet/user follow recommendation systems and networks, by using GNNs to understand complex relationships between users and tweets. The main part of this approach is in the usage of knowledge-graph embeddings - a technique that maps entities and relationships in the TwHIN onto a low-dimensional space. Using this new technique, Twitter can effectively capture the structural nuances of the network and learn embeddings that maximize the likelihood of observed interactions while minimizing those of unobserved ones. Also, traditional systems focus on recommending users to follow TwHIN extends to broader personalization tasks like recommending ads, filtering offensive content, and even improving search ranking.

Next, trying to deploy a streamlit pdf chat app

It was not hard, I even uploaded it to github but I was developing it locally wondering if I can run a huggingface LLM in production. I ran into problems even during dev, because for some reason now when downloading and using gemma-2b-it my laptop was not able to handle it. As for the pdf, I used the What are embeddings? pdf (~60 pages), embedded them and put them on mongodb, but sadly I could not use it. I 'fought' this problem for a bit, and while I was eating I looked at some videos on how to deploy a huggingface model to production. I found this lovely video about deploying on azure/aws/google cloud but sadly all require a credit card haha. So at least for now, I will put this on hold, but in the future, I will try to set up an account and get free resources to deploy a simple model for production inference.

That is all for today!

See you tomorrow :)