Hello :)

Today is Day 78!

A quick summary of today:- Out of the 4 sessions I joined from NVIDIA GTC, the AI careers in Europe one was the most interesting

- my application and thoughts about Stanford's AI professional certificate program

The 4 sessions that I had marked to join today were

1. Retrieval Augmented Generation: Overview of Design Systems, Data, and Customization

2. Navigating AI Careers in Europe

3. Customizing Foundation Large Language Models in Diverse Languages With NVIDIA NeMo

4. LLM Inference Sizing: Benchmarking End-to-End Inference Systems

But besides the 2nd one about AI careers in Europe, the other did not manage to catch my interest. They were talking about research on RAGs, how many GPUs are needed to do LLM inference, based on NeMo which I had not used up until now. Nevertheless, the 2nd talk was very good because I could ask questions to an HR rep.

This was the panel in the talk

They mentioned about difficulties such as the constant evolution in tech and the need to keep up, the increasing difficulty to find jobs for CS grads, and they put emphasis on the need to 'get out there' and be part of the community. Also because this was about Europe, they talked about some of the difficulties such as different languages (English might not be always the first language of a company), and the need to relocate.

Francesca arisani, from EiT RawMaterials informed about a tech talent website, funded by the European Union to help upskill future talent(

website). I checked it but at the moment there are not many courses in my interest area (AI), but I saved the website, and it is a place to keep an eye.

The biggest selling point for me was the chat - a Q&A with, I believe the panelists (or HR reps), and I tried to take advantage of it. Below are some of the questions and answers I asked/got (+ other people's).

Mine:

Q: is putting a link to my kaggle profile on my cv a good idea ? or just including it in my github's/personal website's readme is better?

A: I definitely recommend highlighting Kaggle on your resume. I am not sure what the "best" way to do that is. Most important, is that it is clear on your resume what skills you have and what projects you have worked on. People may not always click through links so make sure there is at least a summary of important projects in the resume itself.

Q: i am self-stidying into Al and write a daily blog. if not just a link on my cv, how can i talk about it on my CV? under skills write a few sentences?

A: The link is great on the CV, but many may not click through. The most important thing to highlight on the CV are your skills and projects. Make sure you highlight the various projects you have worked one. Links to details are great, but a summary must be easily apparent in the CV itself. When I review CVs, I will scan through looking for things that jump out at me. I might click through later to get more info but that is after I have already seen something that catches my eye.

Q: is a 2 page CV the norm for AI?

A: I think a 2 page CV is sufficient. Any more and you risk drowning the recruiter in information. Be sure to boil down the important skills and experiences. I really hate long CVs!

Other people:

Q: Someone who wants to work in AI, what advice for them? What should they learn? Jensen Huang didn't recommend people to learn coding, so what skills should one learn for a job in the future with AI?

A: Learning the fundamentals of a technology always pays off. You might not need to code, but knowing the idea underneath different ML or AI approaches will render very useful for landing a job on AI.

Q: what are the different platforms where we can showcase our projects apart from github?

A: Build a webapp. Participate in Kaggle competitions. Build demos and post them on YouTube. There are lots of ways to present the projects you have been working on.

Q: How do interview coding questions for Al-positions differ from coding questions for general software engineering positions?

A: In my experience most coding challenges are pretty general. Usually the Al part of the interview is more about questions, white papers, etc. I have seen some take home coding challenges (rather than direct online ones) where you might be asked to train a deep learning model or something like that.

About Stanford's AI professional certificate program

As seen from the pic, I actually made the application 8 days ago, I was not in a rush, and was just waiting for an email to say accepted/rejected. And today I checked the spam folder on the email I used, and the email was there... from the 13th haha.

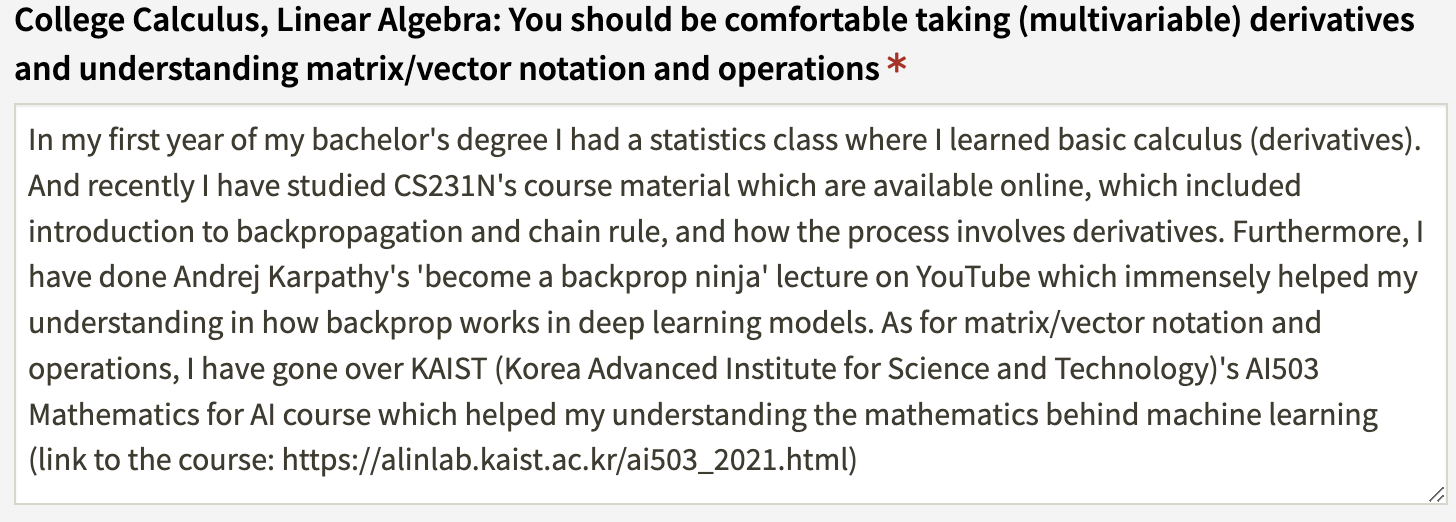

In the applications there is a rerequisites, and given that all my AI knowledge is in this blog, I had to convey it in a way that would make the trust me that I am not a beginner, and I am qualified for some 'hardcore' AI courses haha.

The applications are available to anyone, so hopefully I can share my answers ~

Firstly it was my python knowledge, which was easy since I had tangible experience during my time at Lloyds Banking Group.

Then came calculus and linear algebra. The former, frankly, I thought myself out of boredom in my 1st year of uni haha. But the latter I actually learned by myself in these last 78 days, when I covered AI503 Math for AI by KAIST.

Next, probability theory - again, maonly learned during these last 78 days and I said it as it is.

And finally - my motivations for taking the program:

Now that I am accepted, I have this internal doubt whether to start taking a course from the program.

Below are the upcoming courses, for reference.

The reason for my doubt is, it costs $1750 for one. So far (78 days) no money was spent on any online resource, and I made it this far. And to be honest, some of the courses in this program - XCS224N, XCS229, XCS221 - I have covered those, because their normal Stanford version has the content for free. But the reason I *want* to take at least one, is the structured way of learning, and the most important reason - *up-to-date content*, not just from anyone, but from Stanford - where I know the quality is top notch!!! Currently I am considering taking the ML with Graphs, Deep multi-task and meta learning, and afterwards the next time NLP with DL is ran - because I want some time to have passed between the 2023 fall (free) version (which I took throughout days 57~65) and when I take it, so that the latest about LLMs is out. But that is after a few months. I think at first doing the ML with Graphs and Meta learning courses would be sufficient.

Lastly, the PERL paper - Parameter Efficient Reinforcement Learning from Human Feedback

(I see papers all the time on X, from @_akhaliq but some really spark my interest - like this one)

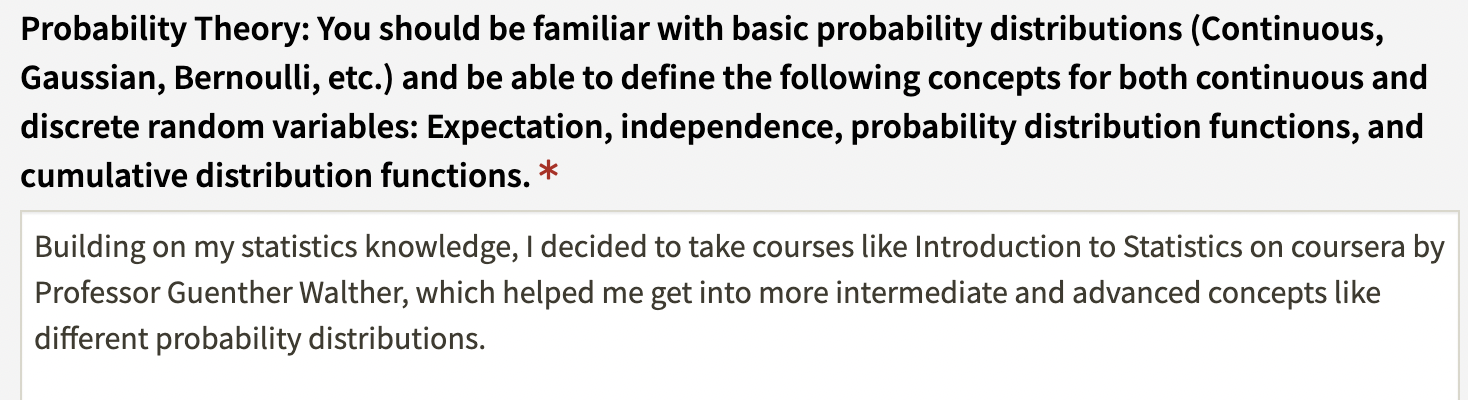

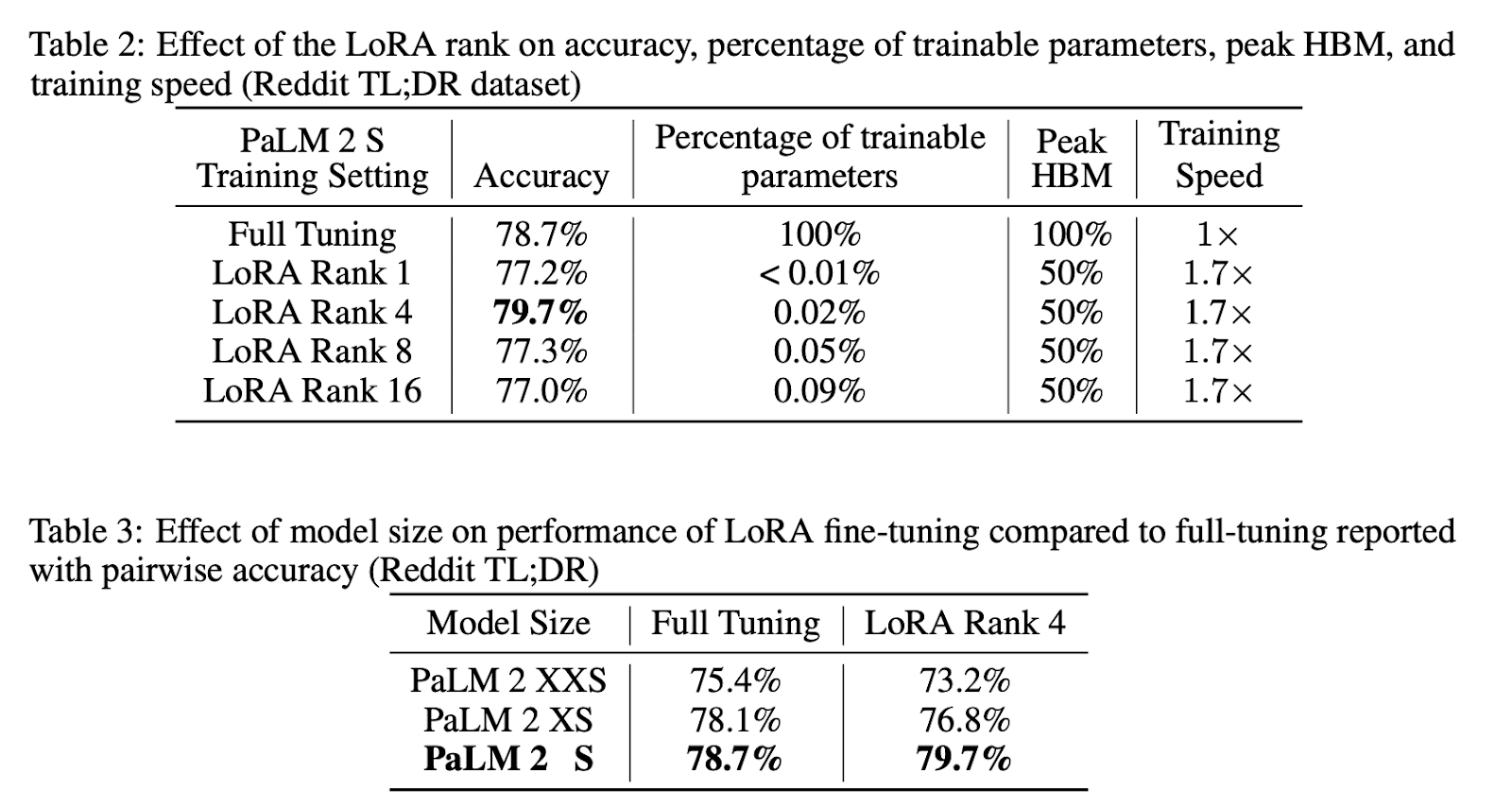

The paper addresses that fact that RLHF is an important part of LLMs' training process, so that we can align the outputs of the model with some predetermined behaviour. However, it is very complex and computationally expensive because we need to compute multiple instances of the LLM like for a reward model and an anchor model. In addition in order to create a good reward model, good training data is needed, which is difficult to collect. In this paper, they find a way to significantly reduce model params, and training time.

So their idea is using LoRA adapters attached to each attention projection matrix - train those, while keeping the model frozen, and at test time add those to the projection matrices.

They tested this on a bunch of datasets. One was on a reddit TL:DR dataset, containing post summaries. Based on that dataset, the results are as follows

They show that using their parameter efficient RLHF method allows for a significant reduction in model params, and also improves speed.

In the paper they show detailed information about each dataset they used, above is just 1 of the cases.

That is all for today!

See you tomorrow :)