[Day 60] Stanford CS224N (NLP with DL): Language modelling, RNNs and LSTMs

Hello :)

Today is Day 60!

A quick summary of today:- Attempted assignment 3 - Neural Transition-Based Dependency Parsing

So far, including today, the lectures have been just phenomenal. Based on my previous NLP knowledge and now with these lectures that dive deeper into how modern language models came to be - I am establishing a solid foundation.

My notes from the lectures are below:

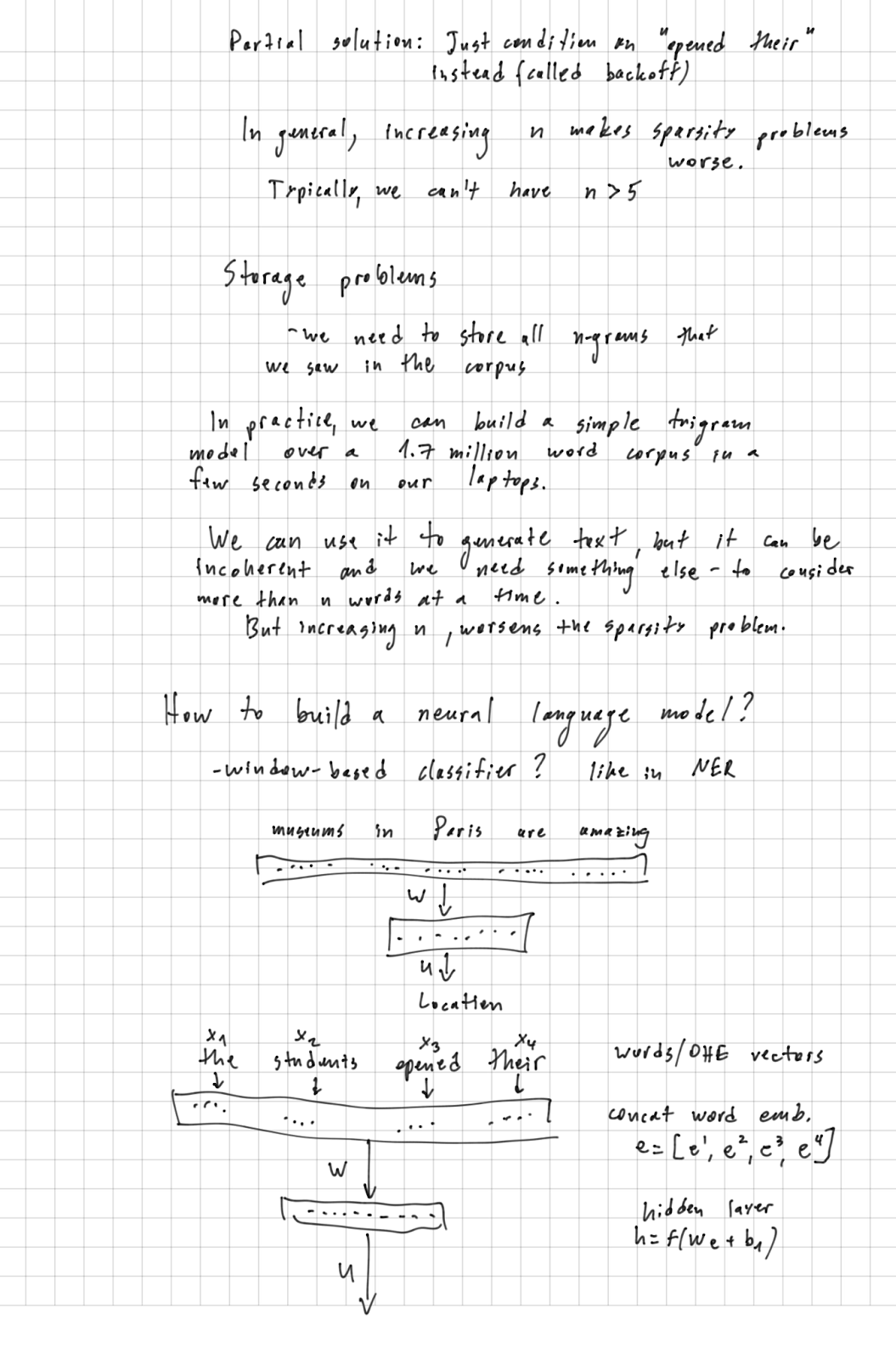

Lecture 5: Language models and RNNs

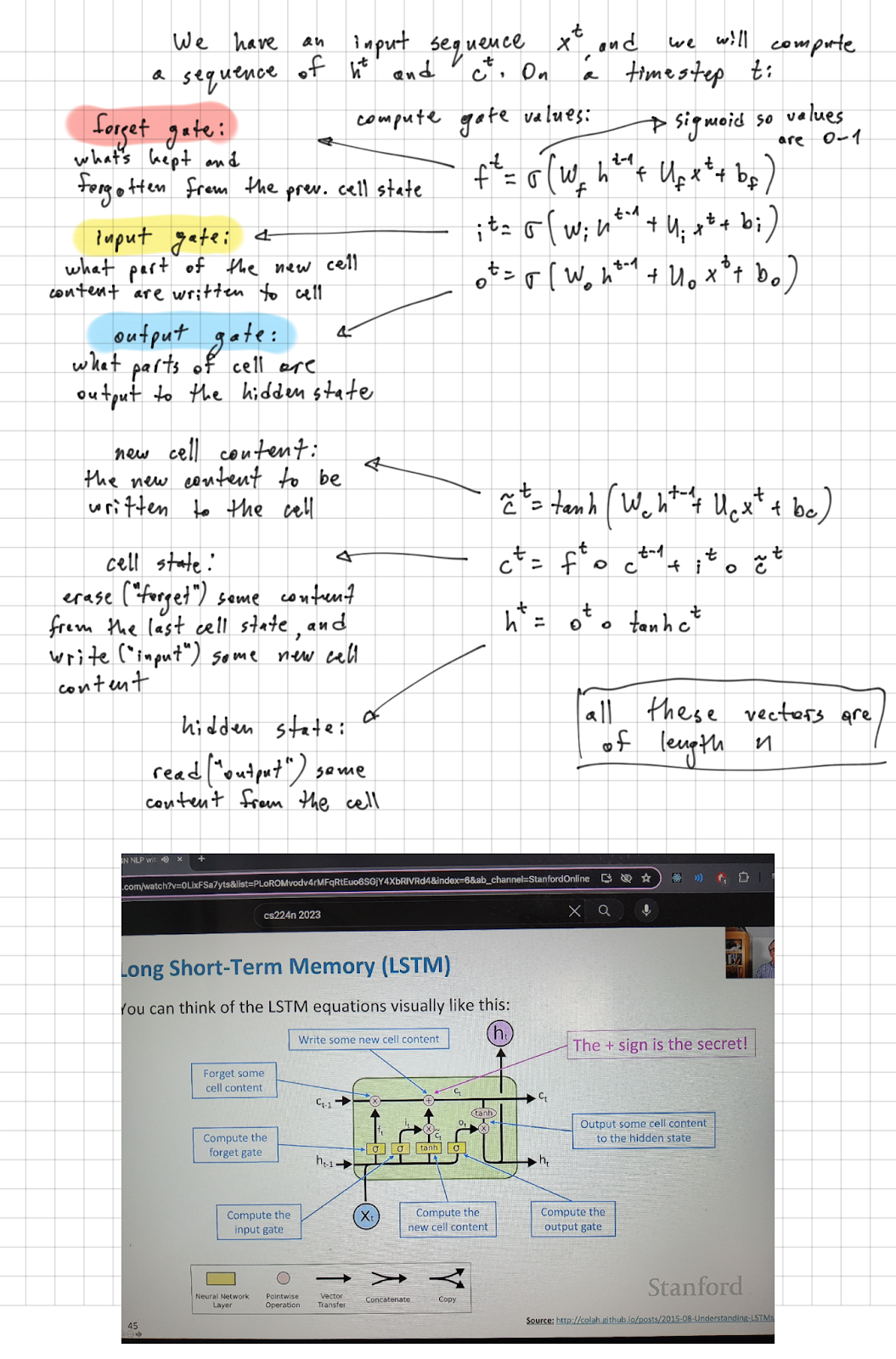

Lecture 6: Simple and LSTM RNNs

Tomorrow's turn is Lecture 7: Machine Translation, Attention, Subword Models and Lecture 8: Transformers depending on lecture 7's length and readings.That is all for today!

See you tomorrow :)