[Day 54] I became a backprop ninja! (woohoo)

Hello :)

Today is Day 54!

A quick summary of today:- Spent 8 hours going over Andrej Karpathy's backprop ninja tutorial

As I mentioned, I wanted to become (or at least attempt to become) a backprop ninja today. I have watched that particular tutorial twice, the 1st time I had no idea what is going on, the 2nd time I could follow, and now it was the 3rd time - and now, I wanted to participate and actually see the why and the how of the things we have to do, when we do manual backprop. My main goal of today was to become the doge on the left.

And now, the backprop line-by-line backwards began.

I was surpised :D that I could do most of these bymyself, just by exploring the shapes of items, and doing derivatives.

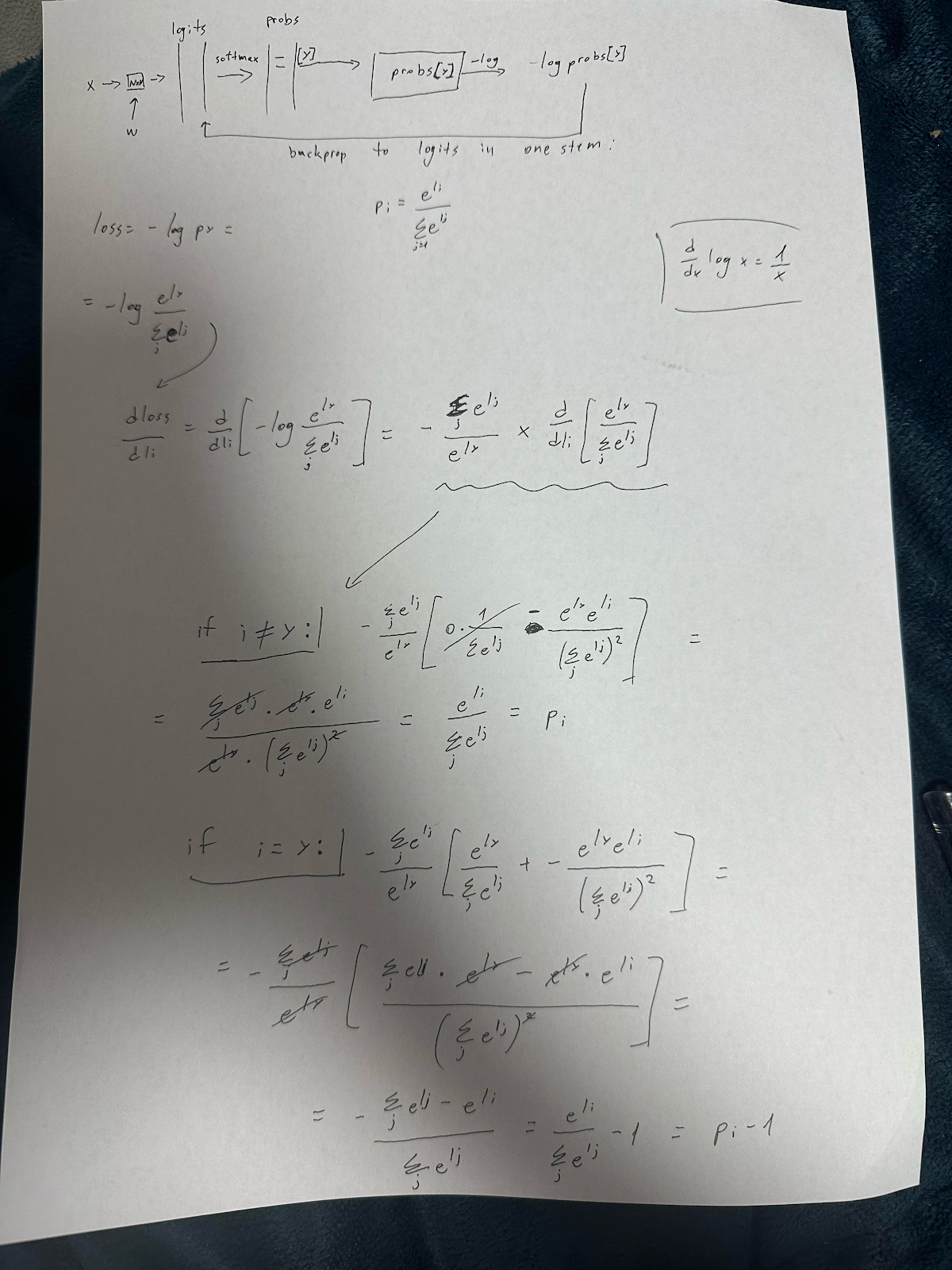

After the manual line-by-line, now it was time for some corner cuts and simplifications.

in the forward pass, when we want to obtain the loss firsly we had to go through 8 lines of code, but we can do the same just by taking the logits and the true Ys and give them to pytorch's cross_entropy loss function.

But in code, it is as simple as:

And in code, instead of the long backward pass, we get:

For the full version, again, I invite you to look at my google drive link, and also Andrej Karpathy's full video.

At the end of the day, yes I did spend my whole day over this. But I think it is definitely worth it. Though Andrej's help was needed at some spots, when I just could not figure out what to multiply by what (especially in some of the more complicated backward pass lines), I do believe I have a strong understanding of the backward propagation process.

At the end of his video Andrej says: "If you understood a good chunk of it and if you have a sense of that then you can count yourself as one of these buffed doges on the left." That said...

Mission accomplished!

I will still review this, and I will probably think a lot about the code and review it in my head, to increase my % of understanding, but what I got today is invaluable. Thank you, Andrej Karpathy! :)

That is all for today!

See you tomorrow :)