[Day 39] Reading papers of powerful CNN models + going back to basics (+ some more Andrej Karpathy lectures)

Hello

Today is day 39!

- Read a few research papers

- Watched Stanford's CS231n Winter 2016 lectures delivered by Andrej Karpathy

- Drew a nice "poster" for my wall thanks to Samson Zhang

First I read about some regularization technique that I saw on paperswithcode called - Label Smoothing. I was curious what other options are there besides Dropout and L2, and that was amongst the top in the regularization category.

It involves smoothing the one-hot encoded target labels by replacing some of the elements with values slightly different from 0 and 1.

- For example, in a classification task with 5 classes, a sample belonging to class 3 would have the target label [0, 0, 1, 0, 0].

- Instead of using hard 0s and 1s in the one-hot encoded vectors, label smoothing replaces them with slightly adjusted values. For example, instead of [0, 0, 1, 0, 0], the smoothed label for the same sample might be [0.05, 0.05, 0.85, 0.05, 0.05].

It helps with overfitting and does not allow our model to get overconfident when it classifies.

After that I read about AlexNet, ResNet and VGG which luckily (a nice coincidence) appeared in Andrej Karpathy's lecture about popular CNN models.

He showed a comparison of the three models on the ImageNet dataset

Parameters: AlexNet: 60 million, VGG: 138 million, ResNet: 25.6 million (ResNet-50)

With deeper models the idea was that the computational complexity will increase (as seen when comparing AlexNet and afterwards VGG), but when ResNets arrived they incorporated 'skip-connections', even tho there are way more layers than VGG for example, (amongst other reasons) help with gradient flow which allows for smoother backprop and battles vanishing gradients.

Side note! In the papers I saw how models get compared and I saw top-1 and top-5 error, not knowing what it meant I just accepted it at face value. But in his lecutres, Dr. Karpathy mentioned that top-5 is, if the 1st guess is not correct, is the ground truth class in the top 5 (and here we are talking about ImageNet which has 1000 classes).

Some other interesting notes I took from some of the lectures:

there is some kind of multi-class SVM loss, but these days cross entropy might be more powerful because during my studies around multi-class classification, i have not heard about the SVM loss.

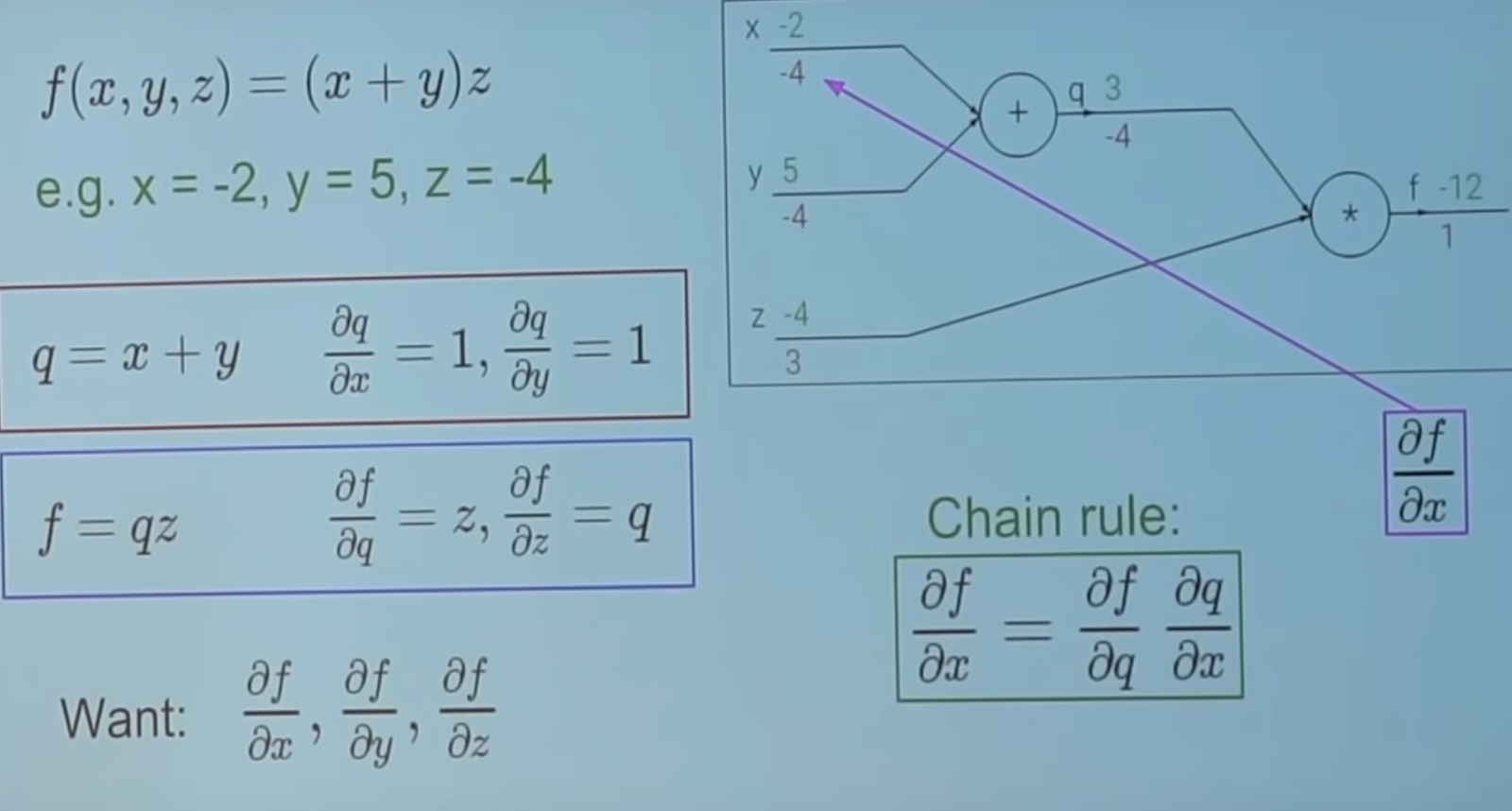

I wanted to go over backprop basics and derivatives. Andrej Karpathy has a nice way of explaining what happens.

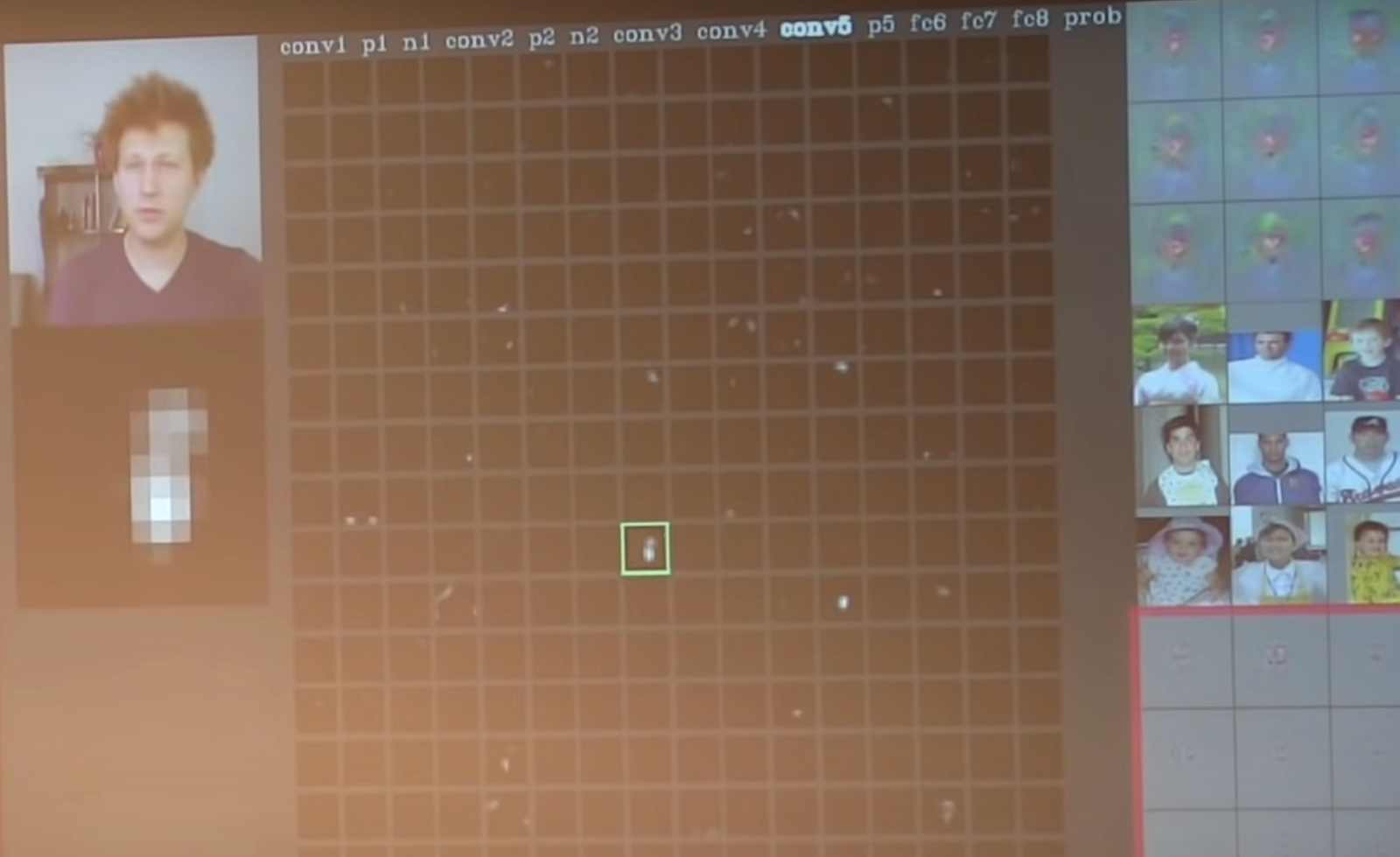

Another cool thing. What happens throughout a CNN.

For example along the network, in this part of the conv6 layer - shirt wrinkles are recognised. You can see in the block under the camera.In this part - faces (kind of).

Finally, as for my nice "poster". On my wall, in front of my desk, I had some Hanja letters from when I studied for a Hanja exam a while ago. But I wanted to change with something more relevant to my current studies.

It does not include detailed info and misses different loss functions (for examples), also regularization, batch norm, but I will look to update it :)

That is all for today ~

See you tomorrow :)